TL;DR:

- Enterprise AI at scale demands a shift from isolated experiments to a unified, governed framework that integrates automation, compliance, and scalability across AWS, Azure, and GCP.

- Infrastructure-as-Code (IaC) forms the foundation for consistent, auditable, and repeatable AI operations, preventing drift, reducing costs, and ensuring security.

- Firefly simplifies multi-cloud complexity by discovering unmanaged resources, auto-generating IaC, enforcing guardrails, and maintaining centralized visibility and policy control.

- Automation and governance become continuous processes, embedding review, compliance, and cost management into everyday AI workflows, enabling teams to scale efficiently and securely across the enterprise.

AI today is no longer a side project or an isolated lab experiment; it has become part of the production fabric of the enterprise. On AWS, Azure, and GCP, companies now operate inference services that process thousands of requests per second, manage regulated data pipelines that cross business units, and retrain models as part of routine development cycles. These workloads sit alongside ERP, CRM, and payment platforms, not as experiments but as mission-critical systems.

At this scale, the challenge is no longer hardware. Every major cloud offers an abundance of CPUs, GPUs, storage, and networking. The real test lies in managing these AI environments reliably. Idle compute clusters quietly drain budgets. IAM roles and network policies drift as teams make urgent manual changes, undermining compliance. Costs spiral as workloads multiply across multiple providers without unified guardrails. A recent TechRadar survey found that ninety-four percent of IT leaders already struggle with cloud costs, and AI’s resource intensity magnifies that pressure even further.

Enterprises that succeed do not treat AI as a loose collection of models and pipelines. They approach it as a lifecycle. It begins with strategy, where objectives are defined and aligned with business priorities, and continues with careful planning of use cases, data flows, and infrastructure. Readiness then becomes a matter of building the skills, platforms, and operational baselines that make scale possible. From there, governance, security, and day-to-day management become ongoing disciplines, not afterthoughts. Throughout this lifecycle, responsible AI principles act as the foundation, with fairness, transparency, and accountability woven into every step.

This is the difference between scattered projects and a true enterprise AI strategy. It is not just about deploying models, but about creating a structured way of working that can scale safely, cost-effectively, and in line with regulatory and business expectations.

From Vision to Execution: Defining an AI Strategy

The journey to successfully adopting AI in an enterprise doesn’t begin with choosing the right cloud service or setting up powerful GPUs. It starts with clearly defining your goals. An AI strategy is about understanding what your business needs to achieve with AI. For example, a bank may want to utilize AI to expedite fraud detection without violating any regulatory rules. A retailer might use AI to provide more personalized recommendations to customers while making sure they protect customer data.

The key challenge here is turning these broad goals into specific, actionable steps. If this isn’t done carefully, organizations often rush into small, isolated AI projects, and each team works independently with their own set of cloud accounts, security settings, and data pipelines. These projects can quickly get out of control, leading to skyrocketing costs, compliance risks, and inconsistent results.

A successful AI strategy bridges this gap by creating a centralized plan for all AI efforts in the organization. It ensures that teams follow standardized processes for building, deploying, and managing AI models across different cloud platforms (like AWS, Azure, and GCP). This strategy should clearly define:

- How models are created and trained

- How data flows through the system

- How compliance and security will be handled

The strategy doesn’t just live in a presentation slide. It needs to be built into the organization’s daily operations through automated workflows and Infrastructure as Code (IaC). This ensures that AI efforts are cost-effective, secure, and scalable.

Scaling AI from Pilot Projects to Enterprise-Level Operations

Many organizations start their AI journey with small, isolated projects. These might include a chatbot built by the innovation lab, a fraud detection model in the finance department, or a recommendation engine used by a single business unit. These pilot projects are valuable experiments, but they often operate in silos, with limited infrastructure, and lack the proper controls needed for a production environment.

However, scaling AI from these initial experiments to an enterprise-wide solution is a completely different challenge. Here’s how the transition from AI pilot projects to enterprise AI unfolds:

- Infrastructure at Scale:

- Instead of just a single GPU for testing, enterprise AI requires large distributed training clusters, real-time inference endpoints, and data pipelines that transfer sensitive information between multiple systems. These systems become as critical as your ERP, CRM, or payments systems and need to run reliably at scale.

- Instead of just a single GPU for testing, enterprise AI requires large distributed training clusters, real-time inference endpoints, and data pipelines that transfer sensitive information between multiple systems. These systems become as critical as your ERP, CRM, or payments systems and need to run reliably at scale.

- Operational Discipline:

- In pilot projects, a small failure might be overlooked, but in an enterprise, any downtime or errors can be costly. For AI models handling customer onboarding or medical diagnostics, they must meet the same service-level agreements (SLAs) that other core business systems adhere to. This requires 24/7 monitoring, backups, and a well-defined disaster recovery plan.

- In pilot projects, a small failure might be overlooked, but in an enterprise, any downtime or errors can be costly. For AI models handling customer onboarding or medical diagnostics, they must meet the same service-level agreements (SLAs) that other core business systems adhere to. This requires 24/7 monitoring, backups, and a well-defined disaster recovery plan.

- Governance and Security:

- In smaller-scale projects, security and governance are often afterthoughts, but when AI becomes enterprise-wide, they need to be baked into the system from the beginning. Ensuring that IAM roles, encryption, data residency, and access controls are carefully planned from day one is crucial for maintaining compliance and preventing security risks.

- In smaller-scale projects, security and governance are often afterthoughts, but when AI becomes enterprise-wide, they need to be baked into the system from the beginning. Ensuring that IAM roles, encryption, data residency, and access controls are carefully planned from day one is crucial for maintaining compliance and preventing security risks.

- Lifecycle Management:

- AI models are dynamic and need constant updates, retraining, and sometimes rolling back when performance drifts. Each change must be versioned, peer-reviewed, and audited to ensure it’s traceable and meets regulatory standards, not buried in a data scientist’s local environment.

- AI models are dynamic and need constant updates, retraining, and sometimes rolling back when performance drifts. Each change must be versioned, peer-reviewed, and audited to ensure it’s traceable and meets regulatory standards, not buried in a data scientist’s local environment.

Moving from small AI experiments to enterprise AI involves much more than just scaling technology. It requires building operational rigor, ensuring security and compliance, and developing a structured process to manage the continuous evolution of AI models at scale.

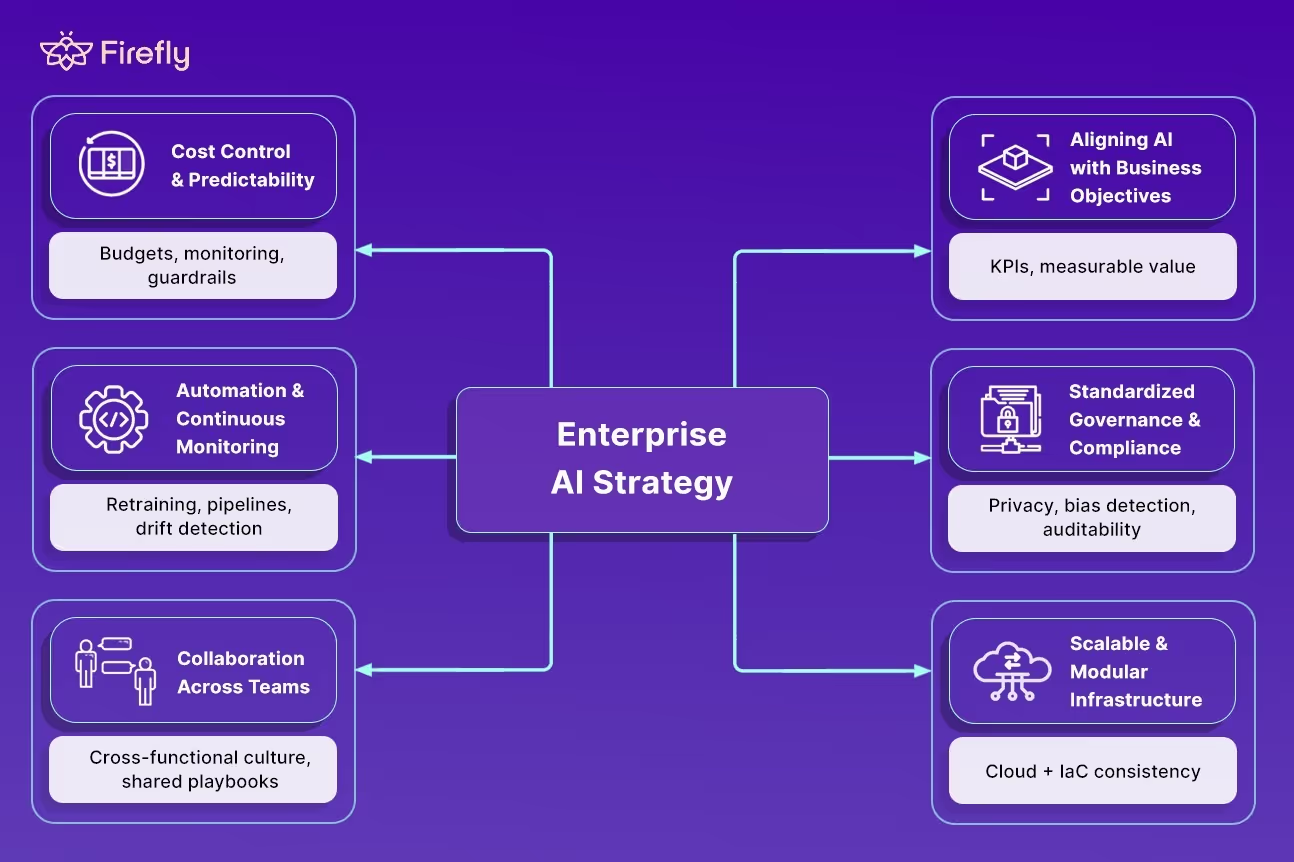

Key Elements of an Effective Enterprise AI Strategy

To successfully scale AI across an enterprise, it's essential to establish a clear strategy that aligns with business goals while addressing the technical, operational, and governance challenges.

Here are the critical elements defined in detail that make up an effective enterprise AI strategy:

- Aligning AI with Business Objectives

- At the heart of any AI strategy is its alignment with broader business goals. For AI to add value, it must support the organization’s core objectives. Whether it's increasing operational efficiency, improving customer experiences, or driving innovation, AI should be tailored to solve real, tangible problems. This means AI initiatives must be prioritized based on business value, with clear KPIs and measurable outcomes defined from the start.

- At the heart of any AI strategy is its alignment with broader business goals. For AI to add value, it must support the organization’s core objectives. Whether it's increasing operational efficiency, improving customer experiences, or driving innovation, AI should be tailored to solve real, tangible problems. This means AI initiatives must be prioritized based on business value, with clear KPIs and measurable outcomes defined from the start.

- Standardized Governance and Compliance Framework

- As AI systems become increasingly complex, managing them without a consistent governance framework becomes unmanageable. Enterprises must establish standardized policies to ensure data privacy, security, and ethical considerations are adhered to. Governance should not be an afterthought but integrated into every stage of the AI lifecycle, from data collection and model training to deployment and monitoring.

- This includes having auditable workflows and ensuring AI models are explainable, with appropriate bias detection and mitigation strategies in place.

- As AI systems become increasingly complex, managing them without a consistent governance framework becomes unmanageable. Enterprises must establish standardized policies to ensure data privacy, security, and ethical considerations are adhered to. Governance should not be an afterthought but integrated into every stage of the AI lifecycle, from data collection and model training to deployment and monitoring.

- Scalable and Modular Infrastructure

- At enterprise scale, AI requires a flexible and modular infrastructure that can adapt to evolving needs. This includes leveraging cloud services (AWS, Azure, GCP) to quickly scale up compute power or storage as needed, while ensuring the environment remains consistent and manageable.

- Using Infrastructure as Code (IaC) tools like Terraform, teams can ensure that infrastructure remains standardized and repeatable, reducing the risk of errors and ensuring that resources are provisioned in a compliant and cost-effective manner.

- At enterprise scale, AI requires a flexible and modular infrastructure that can adapt to evolving needs. This includes leveraging cloud services (AWS, Azure, GCP) to quickly scale up compute power or storage as needed, while ensuring the environment remains consistent and manageable.

- Cost Control and Predictability

- One of the most common challenges when scaling AI is managing costs. AI workloads, especially when running on cloud platforms, can quickly escalate, resulting in unexpected expenses. To prevent this, it’s crucial to implement cost controls that ensure predictable cloud expenses.

- Enterprises need to enforce spending limits at different levels (e.g., project or workspace) and monitor resource utilization to avoid unnecessary waste. Tools that integrate cost-awareness into the deployment process are key to maintaining financial control.

- One of the most common challenges when scaling AI is managing costs. AI workloads, especially when running on cloud platforms, can quickly escalate, resulting in unexpected expenses. To prevent this, it’s crucial to implement cost controls that ensure predictable cloud expenses.

- Automation and Continuous Monitoring

- As AI systems operate at scale, manual intervention becomes impractical. Automation is essential to ensure that changes are made consistently, reliably, and quickly. This includes automating model retraining, data pipeline updates, and infrastructure management.

- Furthermore, continuous monitoring of AI systems is required to detect issues such as performance degradation or data drift. Automated tools can help teams stay on top of issues in real-time, enabling them to take immediate corrective action before they affect production.

- As AI systems operate at scale, manual intervention becomes impractical. Automation is essential to ensure that changes are made consistently, reliably, and quickly. This includes automating model retraining, data pipeline updates, and infrastructure management.

- Collaboration Across Teams

- For AI to be effectively scaled, it must be a cross-functional effort. Data scientists, engineers, product managers, and business leaders must work together to ensure AI initiatives meet both technical requirements and business objectives.

- A collaborative culture ensures that teams can share knowledge, build on each other’s work, and tackle challenges more efficiently. Additionally, creating shared playbooks and best practices allows teams to avoid reinventing the wheel and fosters consistency across the enterprise.

- For AI to be effectively scaled, it must be a cross-functional effort. Data scientists, engineers, product managers, and business leaders must work together to ensure AI initiatives meet both technical requirements and business objectives.

Building an effective AI strategy for the enterprise is not a one-time task; it’s an ongoing journey. From aligning AI with business objectives to creating standardized governance and ensuring cost predictability, each element plays a crucial role in driving success. The foundation of a scalable infrastructure, combined with automation and continuous monitoring, ensures that AI remains a long-term, sustainable asset to the organization.

With these principles in place, enterprises can create a solid framework for managing AI resources effectively. However, to truly realize the potential of AI across an organization, it’s important to establish clear core principles for AI operations. These principles will guide teams in standardizing infrastructure, enforcing software-led governance, ensuring security, and maintaining scalability.

In the next section, the core principles behind a robust enterprise AI strategy in the cloud will be outlined. These principles focus on automating infrastructure management through Infrastructure as Code (IaC), establishing policy-driven governance for compliance, and implementing scalable, modular architectures for cost-effective and high-performance AI workloads.

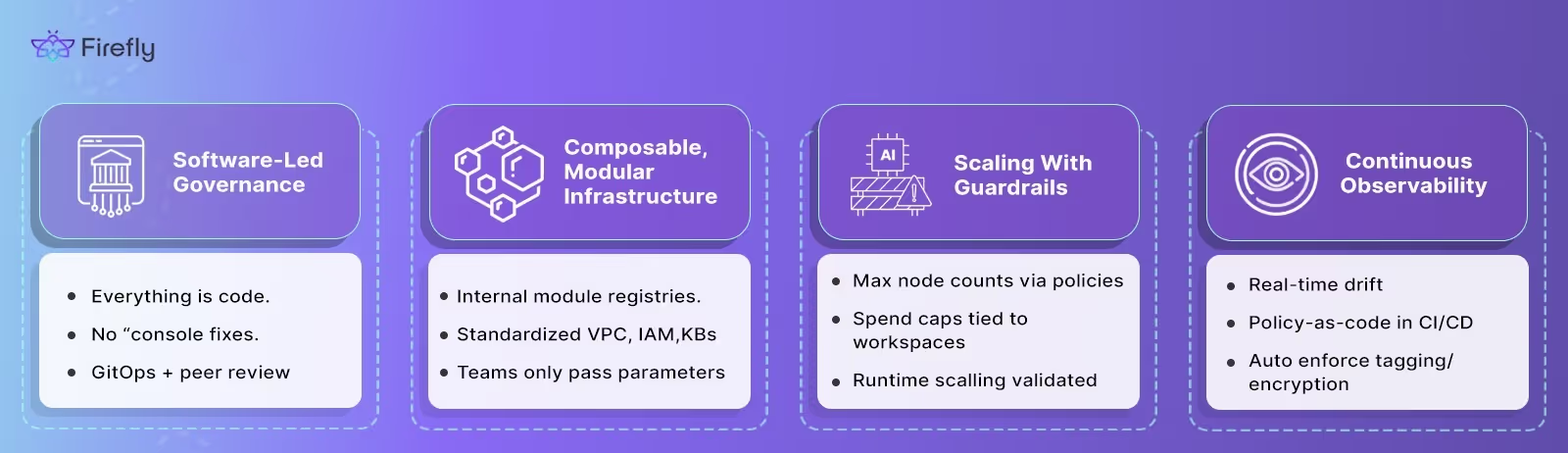

Core Principles of an Enterprise AI Strategy in the Cloud

When you run AI in a mid-to-large enterprise (~5,000 people), you quickly hit a wall: too many teams, too much Terraform, and no single source of truth. Dev teams are writing their own Terraform modules, data teams are doing the same for pipelines, and platform teams are trying to keep the lights on in between. Without clear principles, you end up with drift, duplicated code, and auditors asking questions nobody can answer.

Here’s how organizations deal with this problem.

1. Software-Led Governance (IaC as the Ground Truth)

The first rule is simple: everything is code. Compute instances, storage, IAM roles, network policies: if it isn’t in Git, it doesn’t exist.

At enterprise scale, this means:

- No “console fixes.” If an engineer makes a manual change, it’s flagged and rolled back.

- Terraform state is managed centrally, usually with Terraform Cloud/Enterprise or a similar remote backend, so multiple teams don’t stomp on each other’s state files.

- All changes flow through pull requests with peer review.

Example: A data engineering team defines its BigQuery datasets in Terraform. A separate dev team wants to add a new API gateway. Both go through the same GitOps flow, and both rely on a shared state backend with RBAC so they don’t clobber each other’s resources.

2. Composable, Modular Infrastructure

When every team builds Terraform from scratch, you end up with ten different VPC definitions and no two IAM policies alike. Enterprises avoid this by standardizing on internal module registries.

The platform team publishes hardened modules, for example:

- company/network/vpc - enforces CIDR ranges, logging, and private subnets.

- company/security/iam-role - ensures MFA, least privilege, and tagging.

- company/k8s/cluster - wraps a Kubernetes cluster with encryption and cost tags baked in.

Teams then consume these modules instead of writing their own. They only pass parameters (size, region, name), which keeps consistency high and governance centralized.

Example: The data science group needs a GPU-enabled Kubernetes cluster. They don’t write it from scratch. They call the company/k8s/cluster module, which already has encryption, monitoring, and cost tagging pre-configured.

3. Scaling With Guardrails

Elastic scaling is essential for AI workloads, but uncontrolled scaling can bankrupt a budget or break compliance. Enterprises enforce policies around scaling, not just autoscaling rules.

- Maximum node counts are defined in Terraform and checked by policy engines like OPA or Sentinel.

- Cost guardrails are tied to workspaces, so a dev team can’t spin up a 200-node cluster “just to test something.”

- Runtime scaling actions are logged and validated against policies before they’re applied.

Example: The dev team’s autoscaler tries to add 50 new nodes during a load test. Policy blocks it at 20, because finance capped that environment’s spend at $10k/month. The autoscaler still works, but inside the fence line.

4. Continuous Observability and Drift Detection

Enterprises don’t rely on quarterly audits. They run continuous checks against live infrastructure.

- Tools like AWS Config, Azure Policy, or Cloud Custodian detect drift and non-compliant resources in real time.

- Policy-as-code runs in CI pipelines before Terraform is applied, catching violations early.

- Every workspace and module enforces tagging, region restrictions, and encryption standards automatically.

Example: A developer tries to deploy an S3 bucket without encryption. The CI job fails because the OPA policy requires server_side_encryption_configuration. The change never even makes it to production.

Challenges in Scaling Enterprise AI Strategy Across Clouds

Even with the right principles defined, execution inside a large enterprise is rarely clean. At scale, different teams adopt their own workflows, clouds evolve independently, and policies are hard to enforce uniformly. The main challenges usually look like this:

1. Multi-Cloud Complexity

Running across AWS, Azure, and GCP introduces fragmentation. Each cloud has its own identity model, networking defaults, and billing system. A security rule that works in one cloud may need to be expressed differently in another. Without a consistent strategy, organizations spend more time reconciling differences than building AI capabilities.

2. Lack of Central Visibility

When infrastructure is spread across providers and regions, it’s hard to answer simple questions: What resources exist? Who owns them? Are they compliant? Without a single inventory, duplicated deployments, shadow environments, and wasted spend are inevitable.

3. Inconsistent Delivery Practices

One team uses hardened Terraform modules, another writes raw templates, and a third manages resources directly in the console. Over time, the differences in how infrastructure is provisioned make it difficult to enforce security baselines or roll out upgrades consistently.

4. Security and Compliance Gaps

Policies on paper don’t guarantee enforcement in practice. Engineers under pressure often relax IAM roles, skip tagging, or open network rules “just to make it work.” Across hundreds of services, these one-off changes add up to drift, privilege creep, and audit findings.

5. Cost Volatility

Cloud bills become unpredictable when spend is spread across multiple providers with no unified guardrails. A single team leaving compute resources idle can trigger six-figure overruns. Finance teams need predictability, but without central policy and monitoring, costs remain reactive.

How Firefly Enables an Enterprise AI Cloud Strategy

Managing cloud infrastructure at scale quickly becomes painful when teams rely on console clicks, custom scripts, or inconsistent pipelines. It leads to drift, compliance gaps, and unpredictable costs. Firefly enforces a consistent, auditable workflow for every change.

Here’s how that works in practice.

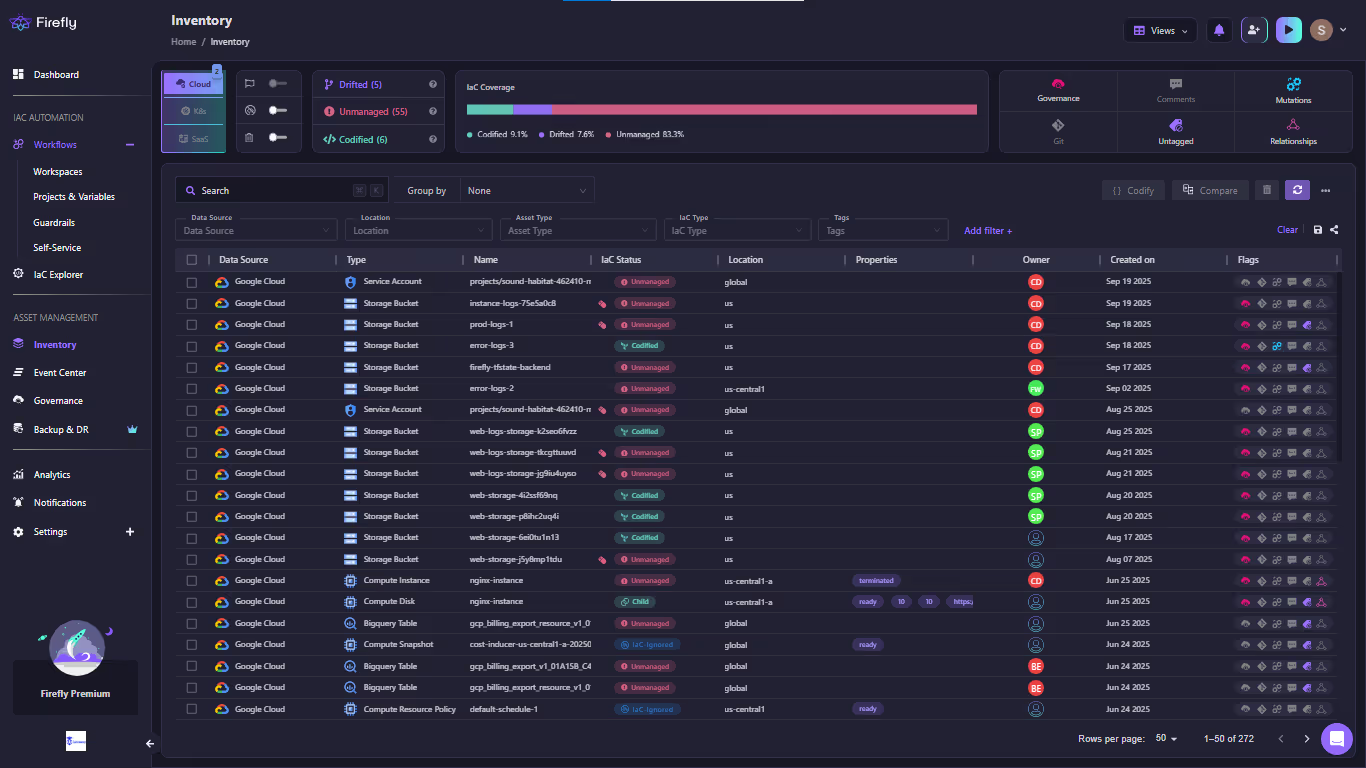

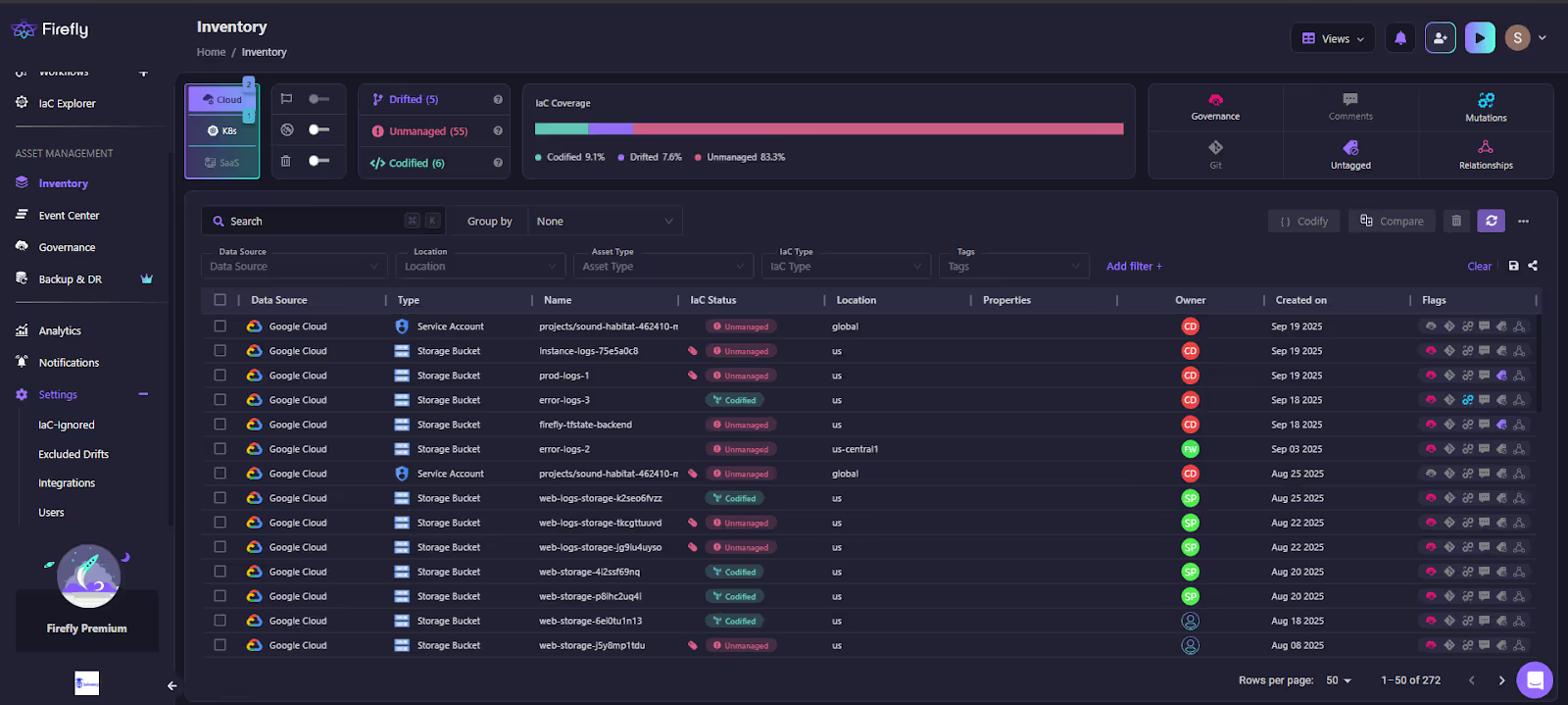

1) Discover and codify what already exists

Firefly continuously inventories AWS, Azure, GCP, and Kubernetes. It surfaces a complete view of all resources, highlighting which are:

- Codified (already tracked by IaC)

- Drifted (live state has diverged from IaC)

- Unmanaged (not tracked by any code at all)

Here’s Firefly’s Inventory view, which shows codified, unmanaged, and drifted resources side by side:

This allows engineers to immediately see what’s outside version control. From there, Firefly can auto-generate Terraform, Pulumi, or OpenTofu definitions, plus import commands, so unmanaged resources are codified into Git. This way we get no more “shadow infra.” Everything lives in the same lifecycle, and drift is visible and correctable.

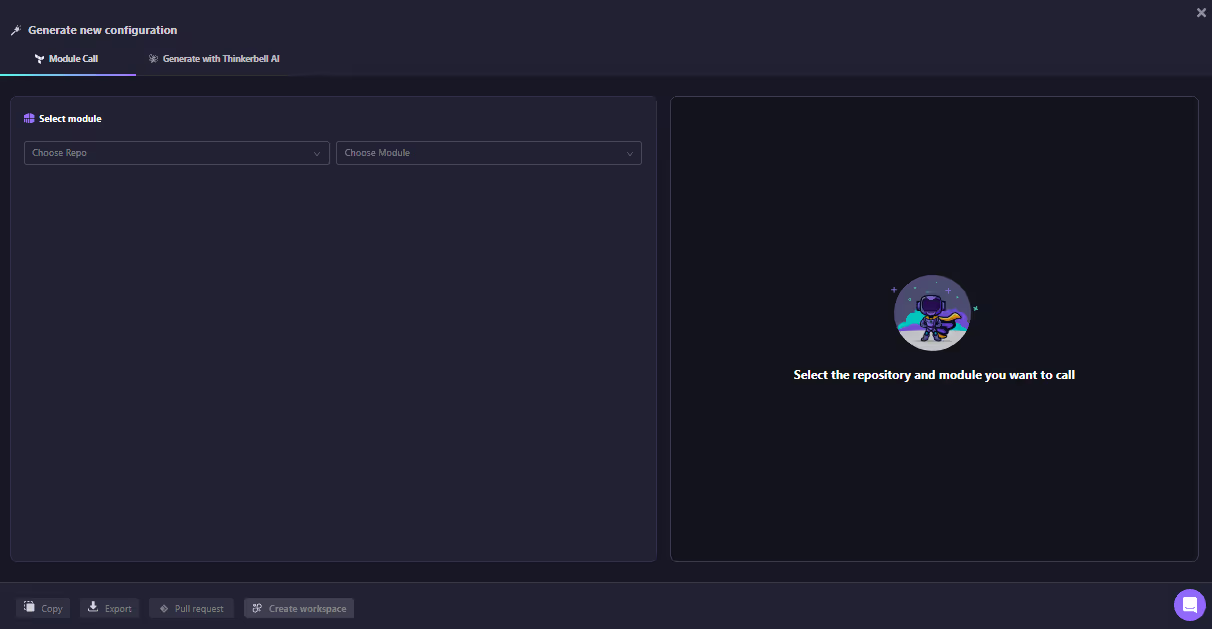

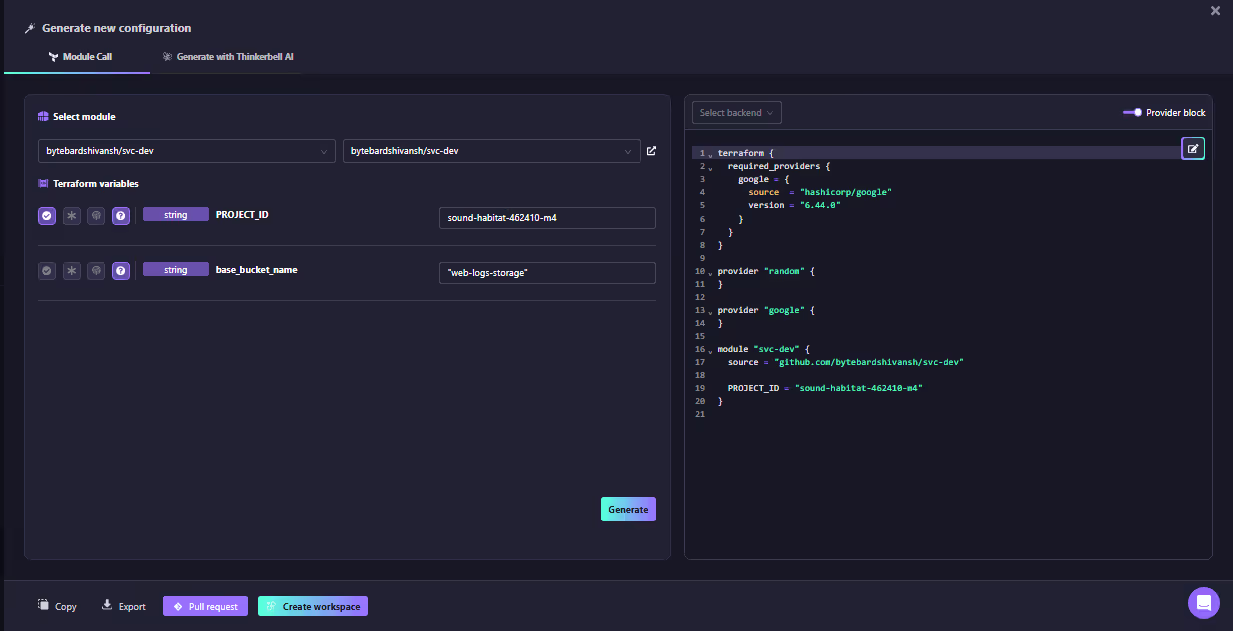

2) Create new infrastructure consistently

When new stacks are needed, Firefly enforces a consistent creation path through Self-Service:

- Module Call: Developers select approved modules from internal or public repos. Firefly guides them through required, validated, and sensitive variables, then emits a ready-to-use module call.

- Thinkerbell AI: Developers describe the stack in plain English, and Firefly generates clean IaC for Terraform, OpenTofu, Pulumi, or CloudFormation.

Here’s Firefly’s Module Call interface for selecting repos/modules and generating ready-to-use IaC:

Both approaches end in a pull request (or workspace), ensuring every change is reviewed and traceable, helping engineers move quickly without ad-hoc scripts, while platform teams keep standards centralized in modules.

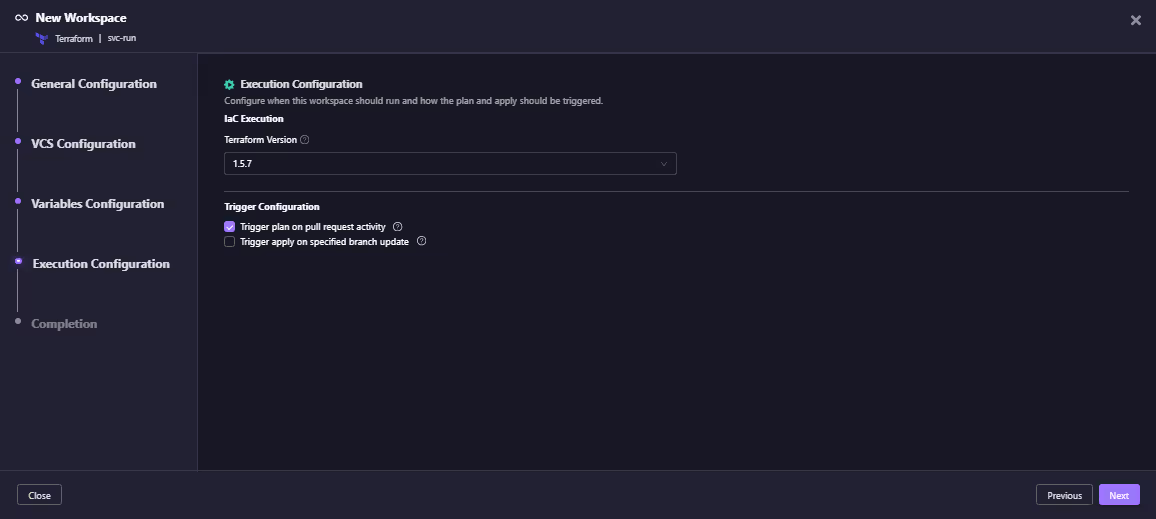

3) Standardize plan/apply with Workflows

Every stack follows the same lifecycle:

- Plan runs on pull request.

- Apply runs on merge/approval.

- Tool versions are pinned, runs are logged, and RBAC governs execution.

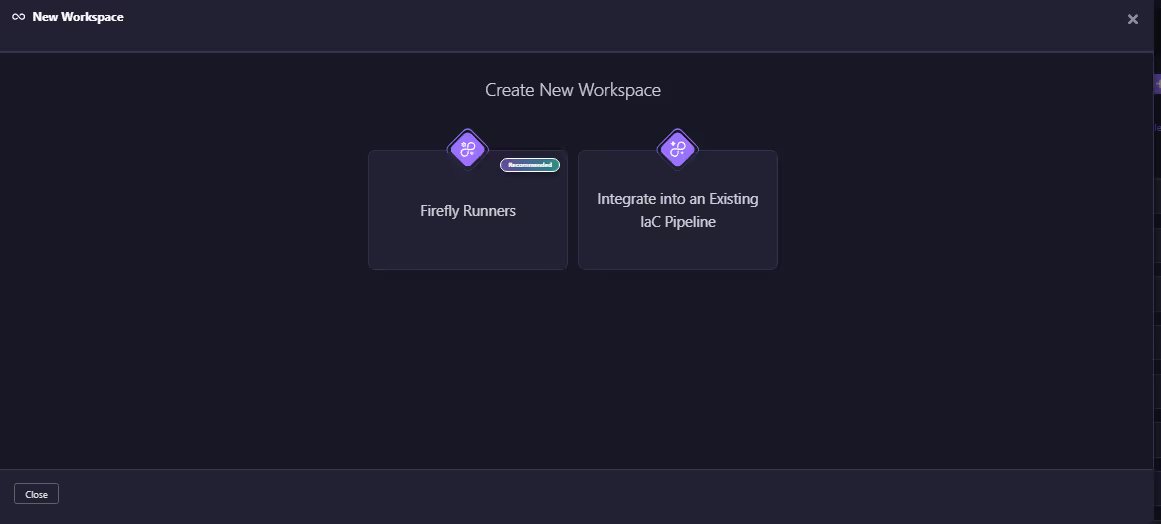

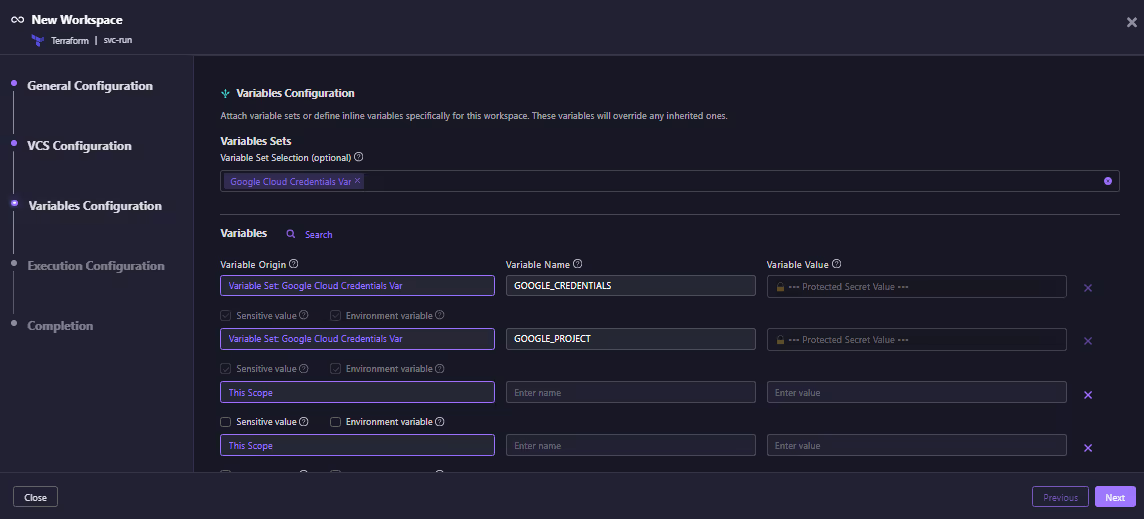

Here are Workspace creation options via Firefly Runners and integrating into existing pipelines:

This way, teams can either use Firefly’s own managed runners or plug into their existing CI/CD pipelines with a lightweight fireflyci step. The runners remove the burden of managing execution environments, tool versions, and scaling, while the CI/CD integration means you don’t have to replace what you already have, just add one step to bring your pipelines under Firefly’s governance.

In both cases, every plan runs on pull request and every apply runs on merge or approval, with policies and RBAC enforced consistently. This ensures that regardless of which team delivers infrastructure or which pipeline they prefer, the workflow stays lightweight, auditable, and uniform across the organization.

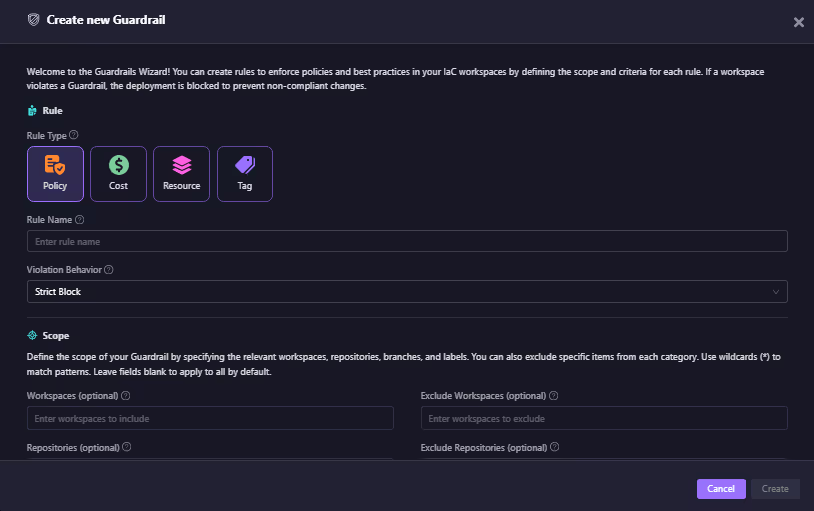

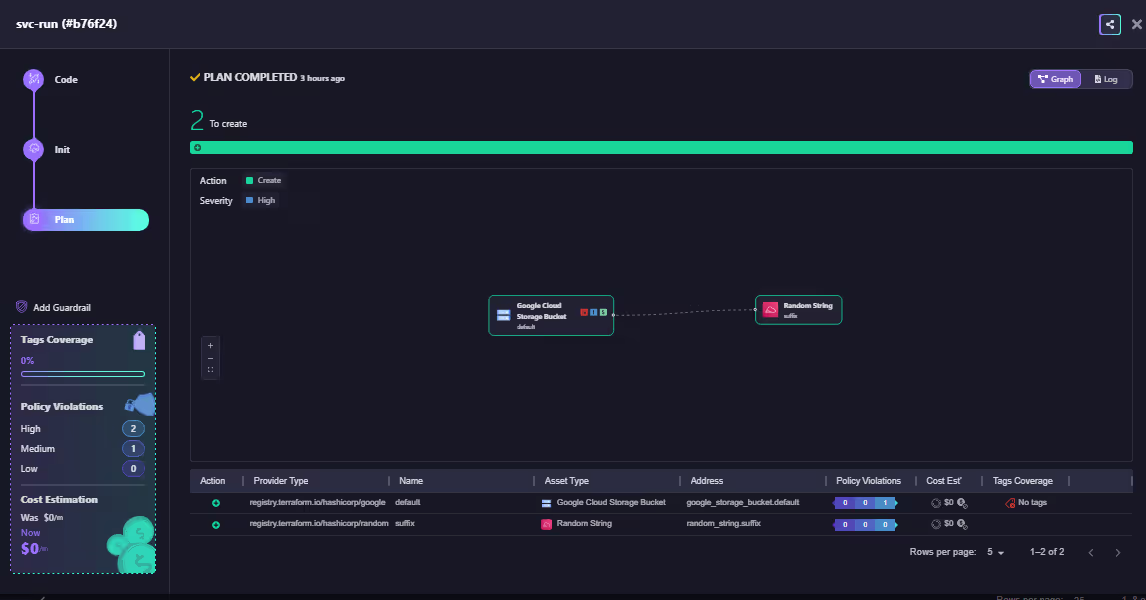

4) Enforce guardrails during deployment

Guardrails evaluate every plan before changes land. Rules can be defined for:

- Policy (OPA): e.g., encryption required, region allow-lists.

- Cost: prevent >$X or >Y% monthly differences.

- Resource: block deletes of protected resources.

- Tags: enforce ownership, cost center, environment.

As shown in the snapshot below, the Guardrails wizard shows rule types (Policy, Cost, Resource, Tag) and scoping options:

Violations show up in run logs, PR comments, and Slack/email. You can configure rules as strict blocks or allow overrides with justification. This way, compliance and budget checks happen during development, not after deployment.

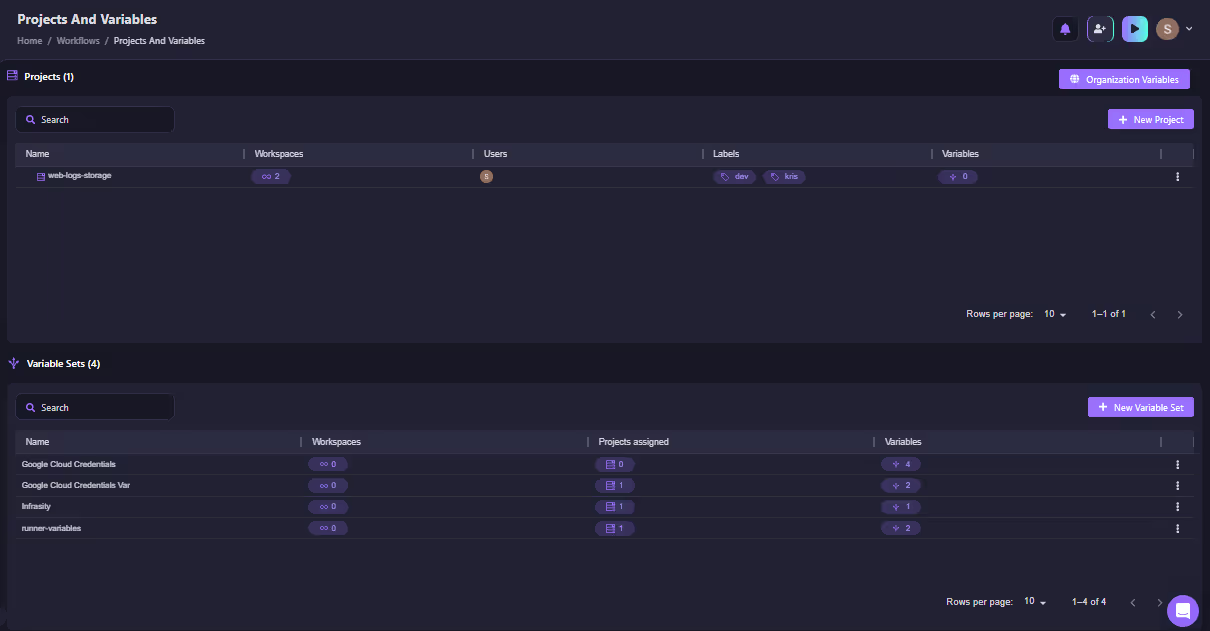

5) Organize at scale: Projects, Workspaces, Variable Sets

At enterprise scale, managing configuration and ownership across hundreds of teams is messy. Firefly provides a clear model:

- Projects: mirror org boundaries; carry RBAC, defaults, and drift schedules.

- Workspaces: the unit of execution, tied to repo/branch.

- Variable Sets: centralize config and secrets with inheritance (Org -> Project -> Sub-project -> Workspace).

Start by setting Project and Variable Sets showing scoped credentials and inheritance as shown in the snapshot below:

Variable definition where sensitive/environment variables can be scoped at the org, project, or workspace level. With this kind of structuring, there are no duplicated .env files, no secret sprawl, and ownership is explicit at every level.

6) Keep costs predictable by design

Firefly integrates cost awareness directly into the workflow:

- Cost views: break down spend by project, workspace, or tag.

- Cost guardrails: catch spikes before applying.

- Region/size constraints: prevent premium services from sneaking in.

Why it matters: Finance gets predictable spend, and engineering keeps delivery velocity.

Firefly doesn’t replace existing IaC tools; it orchestrates them. By enforcing one consistent lifecycle across discovery, codification, enforcement, and monitoring, it removes the “hidden costs” of drift, compliance gaps, and snowflake infra. The result is infrastructure that scales as cleanly as the applications it supports.

From Strategy to Implementation: A Hands-On Example with Firefly

An enterprise AI strategy isn’t just a slide deck of principles; it has to work in day-to-day operations where multiple teams manage cloud infrastructure at scale. Firefly takes the pillars of the strategy, software-led, composable, governed, and integrated, and makes them enforceable in practice.

The walkthrough below shows how Firefly operationalizes these ideas using Terraform and policy-as-code, turning strategy into repeatable workflows across teams:

Step 1: Generate IaC from a module (Self-Service → Module Call)

Firefly guides engineers through variable inputs (required/validation/sensitive/type) and produces a Terraform block that’s immediately ready to be version-controlled.

Step 2: Create a Workspace and attach Variable Sets

Variable Sets provide organization-approved credentials and project values, while workspace-scoped values override where needed. Sensitive inputs are masked and centrally managed.

Step 3: Execution rules: plan on PR, apply on merge

Workspaces pin the IaC engine version and define triggers. Common setup: plan on pull request, manual apply for production branches, auto-apply for development.

Step 4: Guardrails at plan time

Every plan is evaluated against Guardrails: encryption, tagging, cost ceilings, region restrictions, etc. As shown in the snapshot of the firefly runner:

Violations are highlighted directly in the run view and PR comments. Developers fix and rerun before merging.

Step 5: Apply and enforce drift detection

Once merged, Firefly applies the change and continuously checks for drift to ensure that the deployed infrastructure always matches what’s in version control.

But knowing the framework isn’t enough; a clear path is needed to move from scattered, unmanaged infrastructure to a governed, scalable AI platform. That’s where Firefly’s implementation roadmap comes in. It breaks down the lifecycle into practical, hands-on steps that teams can follow to build enterprise AI infrastructure with consistency, compliance, and cost predictability.

Roadmap to Implement AI Strategy with Firefly

1. Import and Map Resources for Unified Visibility

The first step is to gain complete visibility into your existing infrastructure. Firefly automatically discovers and imports all resources across AWS, Azure, GCP, and Kubernetes, mapping them into a centralized control plane. Here is the inventory where we get all the resources mapped based on the IaC coverage in the multi-cloud inventory we get in Firefly:

This gives your teams a single view of all cloud resources, making it easier to track usage, monitor security, and identify inefficiencies.

2. Codify Infrastructure Using IaC

Next, codify your infrastructure using Infrastructure as Code (IaC). Firefly automatically generates Terraform or Pulumi templates for all the resources it discovers. This allows you to version control, track changes, and keep your infrastructure aligned with your team’s workflows, eliminating manual configurations and reducing the risk of drift. Though we can see the unmanaged resources here in the inventory itself, since we deploy our IAC from our IDE, it becomes more feasible to get this kind of insight right inside the IDE. This firefly provides an MCP server, which connects with the live inventory, getting updated continuously

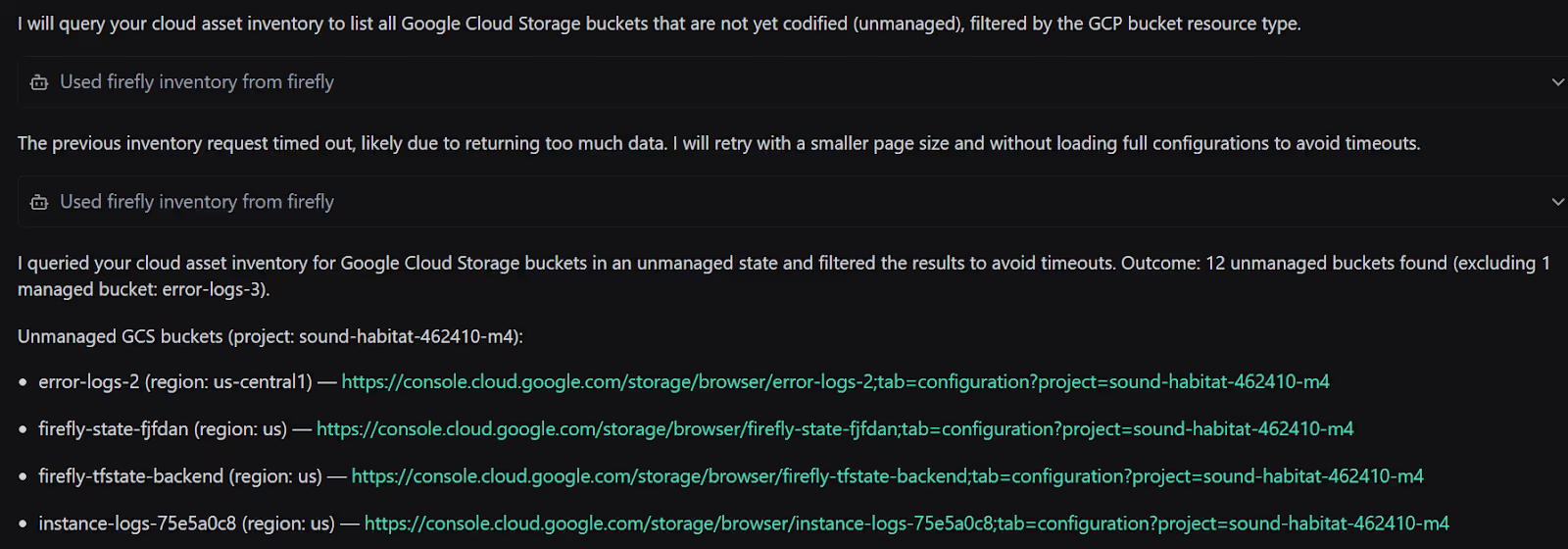

Example: Codifying Google Cloud Storage Buckets

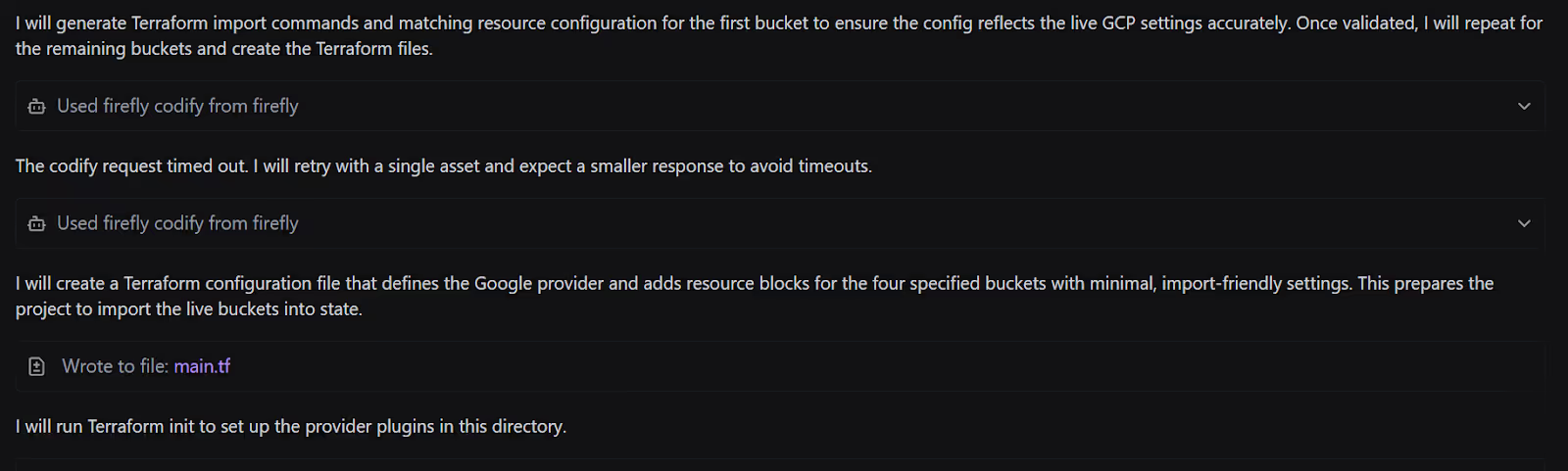

To manage unmanaged Google Cloud Storage (GCS) buckets right from your IDE, Firefly can identify and codify them into Terraform. Here's the process:

- Identifying Unmanaged Buckets: Firefly queries the cloud inventory to identify GCS buckets that have not been codified yet (unmanaged). The inventory dashboard shows all the resources, including unmanaged storage buckets.

- Generating Terraform Configurations: Firefly generates Terraform import commands and resource blocks for these GCS buckets, ensuring the configuration accurately reflects the live settings. It creates the initial resource definitions to prepare for importing existing data into Terraform's state.

3. Reinitializing Terraform: Once the backend configuration is set, Terraform is reinitialized with the -migrate-state flag, ensuring the state files are migrated to the newly configured remote backend.

Implementing an AI strategy with Firefly goes beyond codifying infrastructure. It ensures that governance, automation, team enablement, and continuous optimization are built into a single workflow. By following this roadmap, enterprises can scale AI workloads securely and predictably across multiple clouds, while keeping compliance and costs under control.

FAQs

What is the difference between AI and Enterprise AI?

AI refers to the use of algorithms and models to perform intelligent tasks like prediction or automation. Enterprise AI applies these capabilities at an organizational scale, with a focus on governance, security, compliance, and integration with existing business systems.

What is the cloud adoption framework for AI?

A cloud adoption framework for AI provides structured guidance on how to build, govern, and scale AI workloads in the cloud. It covers resource provisioning, security policies, cost management, and operational practices to ensure AI projects move from pilots to production successfully.

What is enterprise AI adoption?

Enterprise AI adoption is the process of embedding AI into business operations across the organization. It involves aligning infrastructure, data, governance, and teams so AI solutions are not just experimental but deliver consistent value at scale.

What is the open platform for Enterprise AI?

An open platform for Enterprise AI is an ecosystem that integrates multiple tools, frameworks, and clouds without lock-in. It allows enterprises to build, deploy, and manage AI workloads flexibly while maintaining control over governance, costs, and compliance.