TL;DR

- Mergers and acquisitions (M&A) often trigger major cloud and infrastructure challenges, overlapping networks, IAM sprawl, hidden assets, and inconsistent standards that slow integration and increase costs.

- Transition Service Agreements (TSAs) provide temporary stability but quickly become expensive; early discovery, codification, and automation are essential to shorten TSA reliance.

- Typical failure points include network overlaps, unmanaged assets, hybrid drift, and fragmented IAM across AWS, Azure, and GCP, creating compliance, cost, and performance risks.

- Best practices involve starting discovery early, building a unified cloud foundation, standardizing IAM, automating migrations with IaC, embedding security from Day 1, and managing TSA exits with defined milestones.

- Firefly enables seamless M&A integration by automatically discovering all inherited resources, converting unmanaged infrastructure into code, detecting and remediating drift, enforcing governance policies, and optimizing costs, giving organizations full visibility and control across merged cloud environments.

Mergers and Acquisitions, or merger and acquisition, looks great on paper: new markets, shared tech, bigger footprint. But the hardest part isn’t closing the deal; it’s untangling the IT and cloud mess that comes after.

You inherit overlapping networks, mismatched IAM setups, untagged resources, and workloads nobody fully owns. That’s what most engineers call cloud noise; every team had its own standards and automation before the merger, and now they all collide. TSAs keep systems alive for a while, but they also burn budget and slow down integration.

This post from Jeffrey Silverman captures it well:

“A friend has been assigned to handle tech integration for a current merger and acquisition… There must be a need for a Mergers and Acquisitions Cloud Migration Tool. Target company Cloud-first, migration service would have access to AWS, Azure, and GCP.”

That’s a familiar scenario, engineers scrambling to merge multi-cloud environments without a unified baseline or clear visibility. Tools like Firefly help by automatically discovering every resource across AWS, Azure, GCP, and Kubernetes, codifying them into manageable infrastructure-as-code, and surfacing hidden assets early.

In Mergers and Acquisitions, getting a full and accurate inventory of what you actually own is always the first real milestone. This can be challenging because different teams are used to having different ways of working and managing things. Whether it's different cloud platforms (AWS, Azure, GCP) or distinct internal processes, each team may have its own conventions, tools, and approaches for managing resources. These discrepancies can make it difficult to get a unified, comprehensive view of the entire cloud estate.

Where Cloud and Infra Break During Mergers and Acquisitions

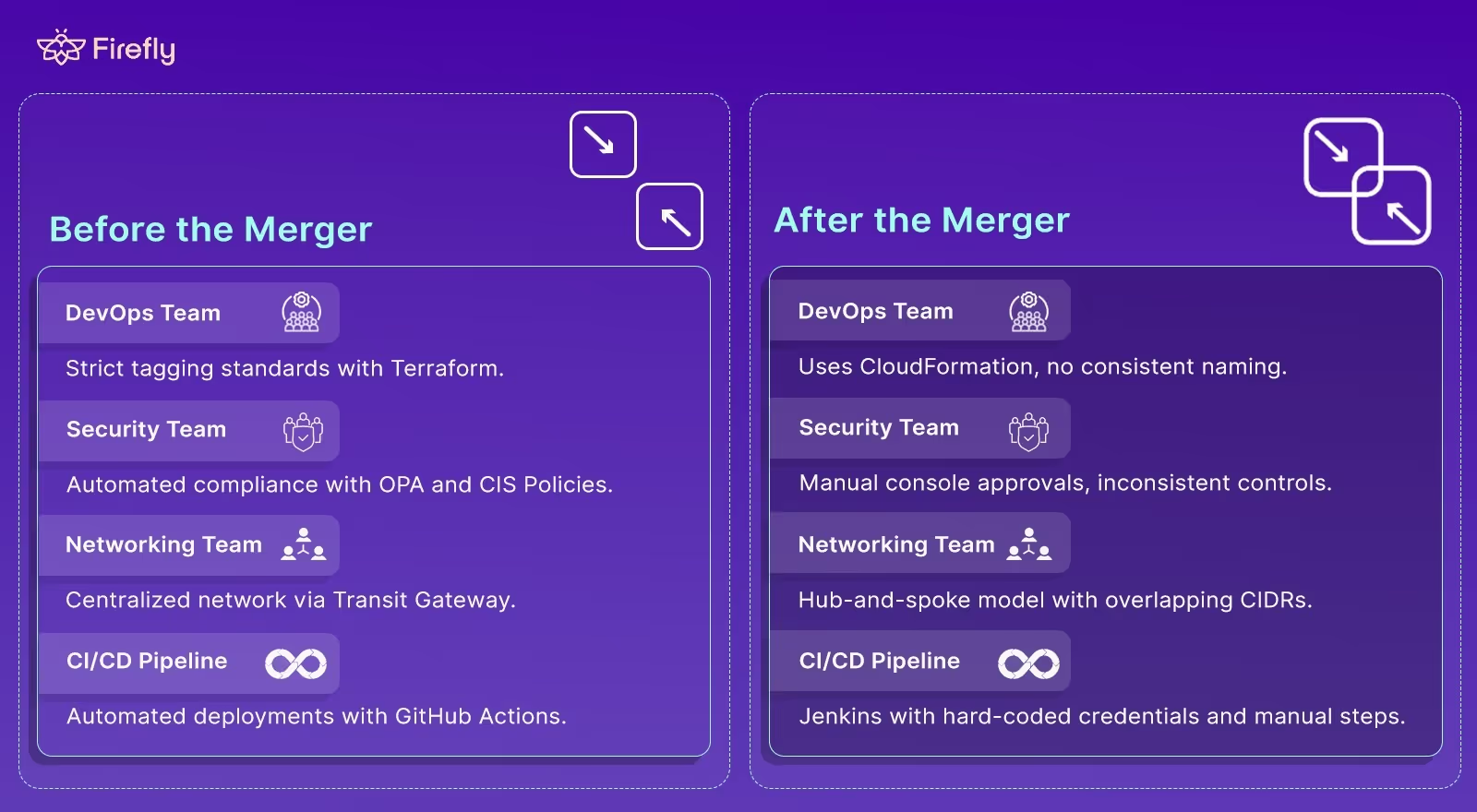

The first week after an acquisition, integration typically turns into a cloud infrastructure nightmare. You’re suddenly tasked with merging two environments that have drifted independently for years. The challenges come fast, and they often come in predictable forms: network overlaps, IAM sprawl, hidden assets, and multi-cloud fragmentation. These problems don’t just slow down your M&A integration; they create serious compliance, cost, and performance risks. Before diving into specific examples, here’s an image comparing how cloud operations typically look before and after a merger:

It highlights the shift from structured, automated workflows to inconsistent, manual, and fragmented processes that often emerge once two organizations combine their environments.

Take this example:

In a recent merger, an acquired company had 12 AWS accounts that no one knew about. They found out the hard way when billing alerts started coming in. These accounts had been silently running with no oversight, and no one had tagged or tracked the resources. What followed were weeks of investigation to determine what was in those accounts and how they were impacting costs.

So, what went wrong?

1. Network Bottlenecks: Overlapping CIDRs and Routing Chaos

The first week after a merger, routing usually becomes everyone’s nightmare.

- Two VPCs share overlapping CIDRs; the old VPN won’t connect, and no one remembers who owns the network file.

- Teams spend days re-aligning routing tables, dealing with asymmetric traffic across VPNs, or worse, hair-pinned traffic running through outdated data centers.

Real-world case: After a merger, the teams realized the complex SD-WAN setup was causing traffic to be misdirected between the networks, adding latency and burning WAN bandwidth. Platforms like Alkira’s Cloud helped here by normalizing routing across both estates and collapsing site integration from months into days. With Alkira, 90 sites were up and running in record time without needing to redesign peering.

Firefly’s Role: When you plug Firefly into the merged environments, the platform instantly maps out the network and shows you where the overlaps are. Instead of running around trying to figure out who owns the CIDR block, Firefly makes that network map visible, providing a single source of truth for network inventory. No more routing detective work.

2. Hidden Assets: Ghosts in Your Cloud

The moment you start merging environments, hidden assets pop up like ghosts.

- Old S3 buckets are left running with sensitive data.

- Test VMs are still alive with public IPs.

- Unmanaged Kubernetes clusters or forgotten DNS zones.

These hidden resources surface right after Day 1, and they often expose major security risks. Teams only realize how many hidden assets they have after going live, when an external scan, like Palo Alto’s Cortex Xpanse, reveals assets that were never listed in the CMDB. That’s when compliance violations or security breaches show up.

Real-world case: A previous acquisition left a large company unaware of 10+ shadow Kubernetes clusters running in various regions, completely untagged and without any oversight. Once these were discovered, the company realized it had exposed sensitive data on the public cloud without realizing it.

Firefly’s Role: Firefly’s discovery tools map out every resource, even unmanaged ones, across the entire cloud environment. It pulls in resources like Kubernetes clusters, S3 buckets, and VMs from every region and flags those that lack tags, encryption, or are publicly exposed. It automatically identifies shadow assets and gives you visibility into all the assets you inherited, even the ones no one knew about.

3. Data Center Sprawl: Legacy Costs That Don’t Go Away

Data center sprawl is another common issue. When you acquire a company, you’re not just inheriting a cloud environment; you’re also inheriting physical data centers with:

- Overlapping DR contracts

- Mid-cycle hardware refreshes

- Redundant WAN setups

Consolidating isn’t just about shutting down a few racks. It’s about mapping out dependencies and sequencing the shutdowns properly.

Real-world case: AWS + Pomeroy went through a major data center consolidation and managed to reduce operating costs by 30% by unifying network and compute layers. But to do this, they had to create a detailed dependency map and plan the phased data center exit. Without that plan, the savings would’ve remained theoretical.

Firefly’s Role: Firefly maps dependencies across the entire cloud and on-prem infrastructure, making it easier to consolidate and rationalize legacy environments. It shows you exactly what’s dependent on what and helps you create a seamless, staged plan for data center exits and cloud migrations, ensuring nothing is missed.

4. Hybrid Drift: Different Rules for Two Worlds

Hybrid drift is one of the hardest things to track when merging on-prem and cloud systems.

Post-merger, on-prem firewalls and cloud security groups often don’t share the same policy logic.

- Routing rules in data centers don’t match cloud route tables.

- Security policies diverge, causing gaps in compliance and potential security breaches.

Real-world case: Forward Networks uses digital twin models to simulate an entire hybrid topology and identify mismatches in security rules or traffic paths. They provide engineers with the visibility needed to trace end-to-end reachability, verify compliance, and test routing changes before they hit production. This level of visibility is essential for preventing hybrid drift from breaking critical traffic paths.

Firefly’s Role: Firefly’s drift detection and visibility tools provide real-time, end-to-end simulation of your hybrid environment, allowing you to trace traffic flows across both cloud and on-prem environments. Firefly automatically detects when there’s drift between cloud security groups and on-prem firewalls and helps you align policies to ensure they’re consistent across the merged estate.

5. Multi-Cloud Fragmentation: IAM Sprawl and Tooling Conflicts

Each M&A brings new cloud accounts, toolchains, and IAM systems.

- Multiple AWS Organizations, Azure tenants, and GCP projects.

- Each cloud provider has its own IAM models, tagging practices, and security baselines.

- Your cost reporting and compliance evidence are scattered across these platforms, making cross-platform visibility difficult.

Real-world case: VMware has highlighted that multi-cloud fragmentation is one of the most complex issues in M&A integration. Without a unified system to manage multiple IAM systems and cloud providers, you end up with a cloud estate that, on paper, looks like it’s merged but operates like separate silos.

Firefly’s Role: Firefly unifies IAM systems, tagging practices, and cost reporting across multiple clouds. With centralized visibility, you can enforce consistent guardrails (policies, tags, logging) across AWS, Azure, and GCP, reducing fragmentation and giving you a single control plane to manage all your cloud estates.

When infrastructure starts breaking during an acquisition, it’s rarely because something “failed.” It’s because both environments were built with different assumptions and practices. Without visibility into the current state and a clear understanding of connections and gaps, you end up in a reactive mode, dealing with fallout instead of being proactive.

Transition Service Agreements (TSAs)

When one company acquires another, there’s rarely a clean hand-off of IT systems on Day 1. The buyer’s cloud landing zone isn’t fully ready yet, and the seller can’t just power down its infrastructure. That’s where a Transition Service Agreement (TSA) comes in; it’s a temporary contract where the seller continues to run critical IT and infrastructure services until the buyer can stand on its own.

In theory, TSAs are meant to give breathing room. In reality, they become one of the biggest sources of drag and cost in Mergers and acquisitions integration. For cloud and infrastructure teams, TSAs define the window in which you must discover, migrate, and rebuild everything before the meter runs out. Each extra month means more spending, more dependencies on someone else’s systems, and a higher risk of delay.

Why TSAs exist in Mergers and Acquisitions

During a merger, both companies often have different systems for identity, networking, and hosting. Re-platforming them overnight isn’t realistic.

A TSA keeps essential systems alive while you:

- Build a new cloud foundation (landing zones, accounts, IAM, guardrails).

- Migrate workloads out of the seller’s environment.

- Re-establish connectivity and shared services under the buyer’s control.

For example, the TSA may cover identity federation through the seller’s AD forest, shared SD-WAN links for site connectivity, or access to their on-prem data centers for database replication. It’s a bridge, but it’s rented infrastructure.

Why TSAs are a double-edged sword

TSAs look like a cushion, but they’re a ticking meter.

- Costly extensions: every month you stay increases license, hosting, and staffing costs; you’re effectively paying twice (once for your new build, once to keep the old lights on).

- Limited control: the seller owns the change windows, tools, and network controls, so you can’t move at your own speed.

- Security exposure: shared identity and hybrid networks stretch your blast radius; a compromise on their side is now your problem too.

- Slower synergy realization: the business expects savings and speed; TSA extensions delay both.

In one large industrial merger, a delayed TSA exit added nearly $8 million in unplanned IT costs because shared Active Directory and network links couldn’t be cleanly separated. Those dollars weren’t for new capability, just rent on legacy systems.

Real-world example: Carrier’s portfolio changes

When Carrier Global acquired Viessmann Climate Solutions in early 2024 while divesting its Fire & Security business, both moves required multiple TSAs. Each covered services like identity, network, and application hosting between the two sides while migrations were completed. Those agreements bought time, but they also meant Carrier was paying to maintain environments it was trying to exit. This is typical of large multi-deal portfolios: one Mergers and Acquisitions adds new environments, while another TSA keeps the old ones alive. Without automation and clear exit plans, those overlaps eat both time and money.

How cloud-first integration shortens TSA reliance

The faster you can discover, codify, and migrate infrastructure, the faster you can shut down TSA dependencies.

- Start with discovery. Identify every workload, network path, and shared service still relying on the seller’s environment.

- Stand up a clean landing zone. Create your own identity, networking, and logging stacks first; don’t build on top of TSA systems.

- Codify inherited infrastructure. Use Infrastructure-as-Code tools to rebuild resources under your control instead of cloning them manually.

- Decouple identity early. Cut over from shared AD or Azure AD tenants to your own; rotate credentials and keys to remove hidden trust paths.

- Phase out connectivity. Replace shared VPNs and SD-WAN tunnels with your own backbone or cloud-native transit.

- Validate before decommission. Verify data integrity, logging, and reachability, then retire the old systems permanently.

Teams that treat TSA exit like a project sprint, with defined milestones, owners, and automation, consistently close months earlier than those managing it as an open-ended support period.

Where Firefly fits into the TSA execution

A tool like Firefly directly accelerates TSA exit because it turns unknown, ad-hoc infrastructure into something you can actually manage:

- Automatic discovery across AWS, Azure, GCP, and Kubernetes reveals every inherited resource still tied to the seller.

- IaC generation lets you codify those resources cleanly in your new environment without manual re-engineering.

- Drift and compliance tracking keep configurations aligned according to your needs while both clouds remain interconnected.

- Cost optimization reports flag duplicate or idle workloads that exist only to keep the TSA functional; easy savings once you migrate off.

TSAs aren’t just contracts; they define the technical runway for Mergers and acquisitions cloud integration. The longer you stay in one, the longer you delay owning your infrastructure. Successful teams treat TSA exit as a core engineering milestone, not a commercial afterthought.

Best Practices for Cloud/Infra-Centric Mergers and Acquisitions

Integrating the IT and cloud environments of two companies during a merger or acquisition is a complex, high-stakes task. This is not just about moving workloads, it’s about ensuring security, compliance, and seamless access to critical services while keeping everything running. Below are actionable best practices based on experience with real-world Mergers and acquisitions cloud integrations.

1. Start discovery before the acquisition is finalized

Even before the merger or acquisition is legally finalized, engineering teams should begin discovery. By the time you’re fully integrated, it’s too late to understand what you’ve inherited. Mapping out the existing infrastructure early can save time and money during the integration phase.

What to do:

- Scan and inventory all assets (cloud accounts, on-prem servers, DNS records, IP ranges) across both companies.

- Leverage automated discovery tools (e.g., Firefly) to map out cloud resources, workloads, and network connections across AWS, GCP, Azure, and Kubernetes.

- Identify regulatory and compliance boundaries (PII, financial data, or sensitive assets) and flag these areas for focused attention.

- Run external scans to uncover any public-facing assets that might not be in the existing asset registry.

What to avoid:

- Relying solely on internal asset lists or CMDBs, they often miss critical shadow assets or legacy systems.

2. Build your cloud foundation before starting migrations

A merger isn’t the time to design your cloud foundation. Establishing a solid, unified environment first is essential for avoiding confusion and mitigating security risks during the migration process.

What to do:

- Design your cloud landing zone, set up Shared VPCs, IAM policies, org structure, and billing configuration.

- Use Infrastructure-as-Code (IaC) to automate the creation of resources such as networks, security groups, IAM roles, and logs.

- Set up Org Policies to enforce security and compliance from Day 1. These policies should be standardized across both companies to ensure consistency.

What to avoid:

- Migrating workloads into a “temporary” environment that’s based on the seller’s infrastructure. This creates unnecessary complexity and risk.

3. Prioritize identity and access management (IAM) early

IAM is the gatekeeper to your cloud infrastructure. If you don’t manage access properly, you risk unintentional exposure and security breaches. Merging two IAM systems should be one of the first integration tasks.

What to do:

- Set up Cloud Identity or Google Workspace as the single identity provider.

- Ensure IAM roles are synced and policies are mapped across both companies, focusing on group-based IAM instead of user-level permissions.

- Plan to phase out any federated trust between the two organizations as you move resources under the buyer’s control.

- Implement Workload Identity Federation for CI/CD pipelines and other automated processes.

What to avoid:

- Letting IAM remain decentralized. Having multiple identity systems across the merged companies will slow the integration and increase risk.

4. Plan each migration wave like a product release

Treating migrations like “one-off” projects leads to missed deadlines and untracked issues. Every wave of migration should follow a structured, tested process to ensure a smooth transition.

What to do:

- Break the migration into waves: start with low-risk environments (e.g., non-critical services), move to business-critical workloads, and finally handle sensitive data or complex systems.

- Use CI/CD pipelines to automate the migration process and ensure configurations and security policies are consistently applied.

- Implement pre-migration validation (check IAM, network routes, and data integrity) and post-migration checks (check logs, service health, and performance).

What to avoid:

- Moving systems without validating them against your baseline. Skipping this step can lead to failures later on.

5. Integrate security and compliance from Day 1

Security can’t be an afterthought in a merger and acquisition integration. Compliance gaps and security risks are harder to address after migration, so build security controls into every phase of the integration.

What to do:

- Encrypt all data at rest and in transit.

- Implement network segmentation using VPCs, subnets, and security groups to isolate sensitive workloads.

- Set up continuous monitoring and audit logging to detect potential security issues early in the process.

- Use tools like Firefly to monitor and detect drift, ensuring compliance across inherited environments.

What to avoid:

- Delaying security or compliance checks until after migration. Proactive control is critical.

6. Manage TSA exit as a critical milestone

Transition Service Agreements (TSAs) are designed to provide temporary support, but they become a drain on resources if extended for too long. TSA exit is not a passive process; it should be treated as a project milestone with clear objectives and timelines.

What to do:

- Define exit milestones for each TSA service (identity, network, apps).

- Use automated tools like Firefly to track which services remain under TSA and identify when they can be safely decommissioned.

- Validate each cutover by testing connections, migrating data, and confirming service availability.

What to avoid:

- Letting TSA timelines drag. Proactive planning and execution are key to minimizing TSA reliance.

7. Establish governance and monitoring from the start

Governance ensures consistency, and continuous monitoring ensures that both companies’ infrastructures are aligned and compliant. Without strong governance, IT teams lose control over the cloud environment, and the integration stalls.

What to do:

- Set up centralized logging and monitoring from the outset using services like Cloud Monitoring and Cloud Logging to track performance, errors, and security incidents.

- Define and enforce resource tagging and labeling to ensure that workloads are aligned with business units, compliance standards, and cost centers.

- Use tools like Firefly to track cloud drift and keep both environments in sync during the transition.

What to avoid:

- Setting up governance and monitoring from the beginning ensures that the environment remains secure, compliant, and cost-efficient. It gives you visibility into the merged environment, making it easier to track issues and maintain control.

These best practices provide a clear, structured approach to cloud and infrastructure integration. However, beyond technical challenges, cultural differences between teams can often complicate the process.

Issues arise from the habits and engineering philosophies that each organization brings to the table. When these worlds collide, cloud drift becomes more than just a technical issue; it's the result of differing approaches meeting for the first time.

The Missing "Merge Story": Cultural Differences in Cloud Practices

When two companies merge, it's not just about consolidating code and infrastructure; you're inheriting deeply ingrained habits that can complicate the integration:

The challenges in the Missing Merge Story are evident: differing tools, processes, and habits create friction during M&A integrations. To overcome this, you need the right tools to unify these environments.

Firefly delivers exactly that, providing real-time visibility, automating resource discovery, and enforcing governance, ensuring a seamless, efficient integration process.

Firefly’s Role in Reducing “Cloud Noise”

Merging two cloud estates isn’t just about moving workloads; it’s about regaining visibility, standardizing governance, and eliminating redundant resources before cutover. Firefly automates these discovery, codification, and compliance workflows that would otherwise take weeks of manual auditing and scripting.

Here’s a breakdown of how Firefly replaces the manual effort during Mergers and acquisitions integration.

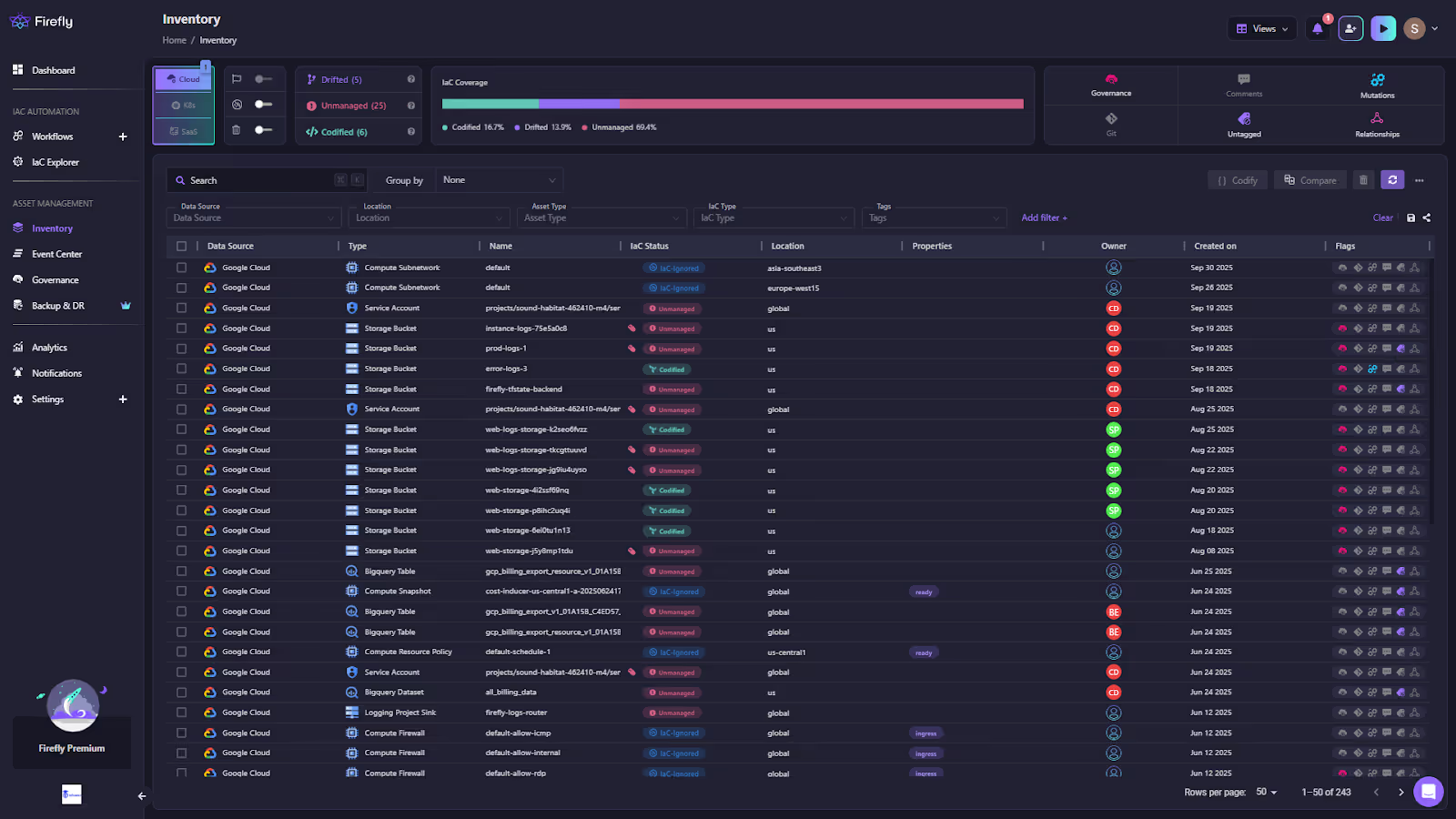

1. Discovering and Cataloging Resources Across Clouds

Without Firefly: During an acquisition, engineers typically have to log into multiple cloud consoles (AWS, Azure, GCP, Kubernetes) to list active accounts, projects, and resources. Each inventory must then be exported and correlated manually, including EC2 instances, S3 buckets, VPCs, IAM roles, databases, and more. Mapping dependencies (e.g., which VPC connects to which cluster or storage bucket) often requires cross-referencing configurations by hand.

With Firefly: It continuously scans connected cloud providers and Kubernetes clusters to create a unified, real-time inventory.

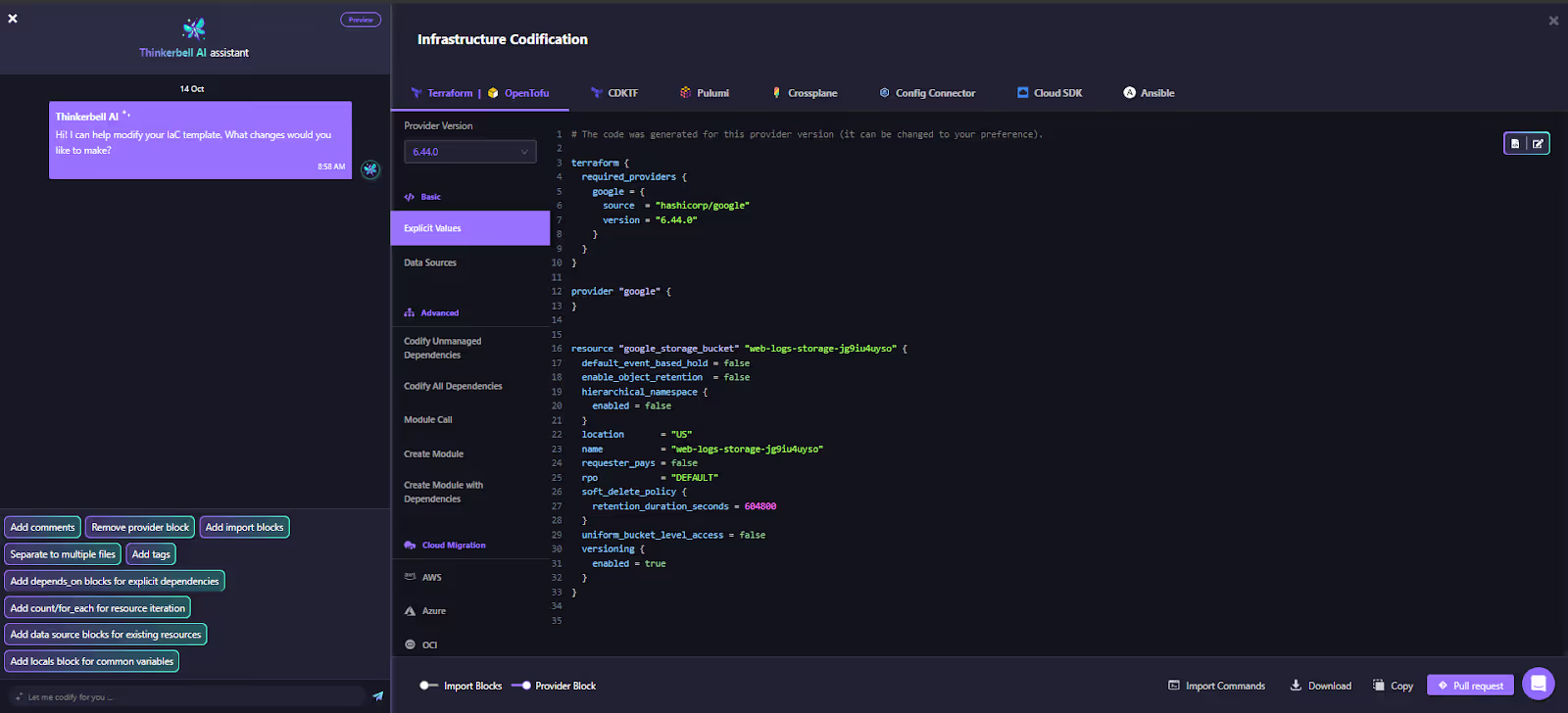

Here is how Firefly categorizes resources by their state (e.g., Codified, Drifted, Unmanaged), with associated metadata such as the owner and location:

This dashboard offers visibility into the entire environment, showing dependencies and relations between various assets across cloud providers and environments. It automatically maps relationships between resources, for example, linking a Cloud SQL instance to the GKE workloads consuming it, and exposes this dependency graph through a single interface.

Result: Instead of maintaining multiple spreadsheets and scripts, teams get a centralized, queryable inventory covering every asset across both environments, updated in real time.

2. Converting Unmanaged Infrastructure to Infrastructure-as-Code (IaC)

Without Firefly: After discovery, engineers must manually write Terraform or CloudFormation templates for existing resources. Each compute instance, VPC, subnet, and IAM policy needs to be re-created from console configurations or API exports, a tedious process prone to inconsistencies and drift from production.

With Firefly: Firefly allows you to codify discovered resources as Terraform, Pulumi, or CloudFormation code. It detects provider types and configuration parameters and produces valid, ready-to-apply IaC templates. These templates can then be version-controlled and reused for redeployment or further modification.

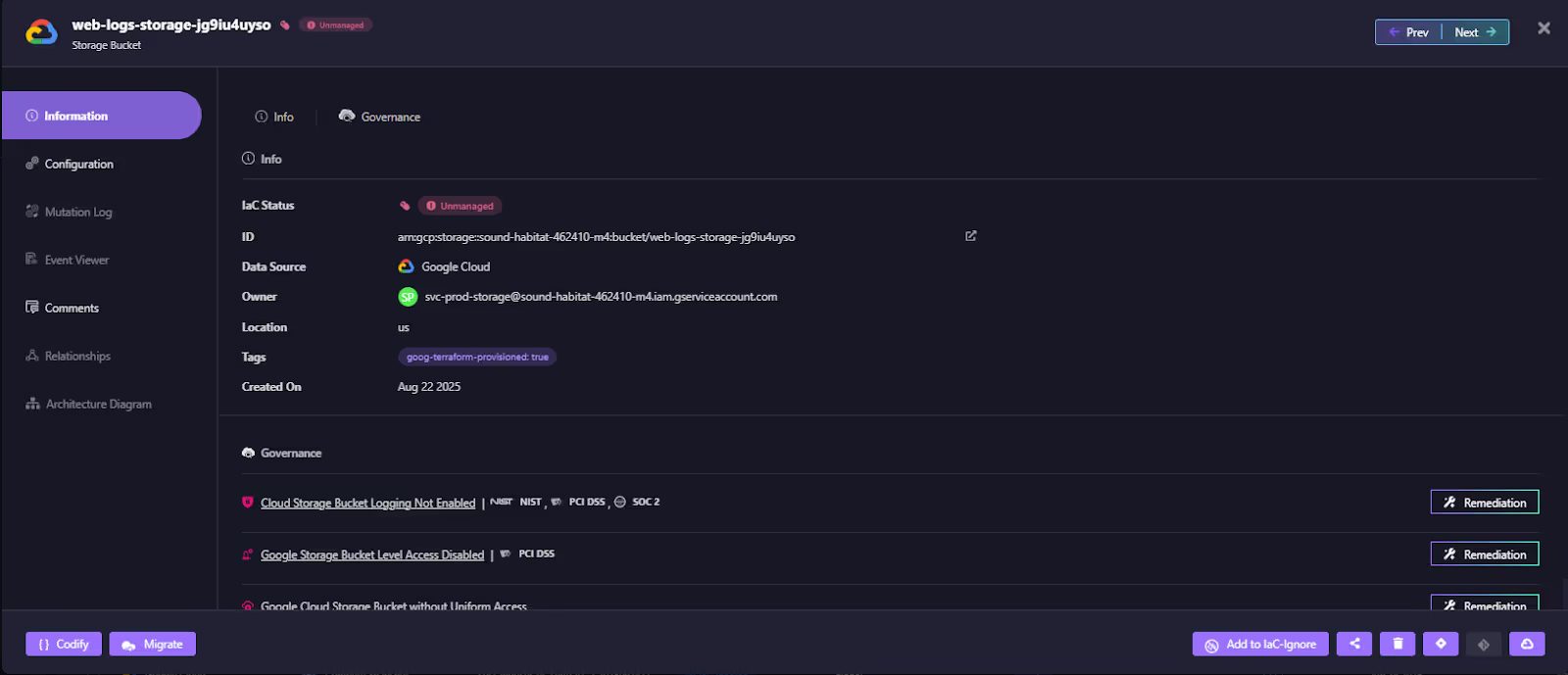

Let's take a look at the unmanaged resource below in Firefly:

On codifying this resource, Firefly generates a PR ready Terraform that can then be applied directly to provision the infrastructure, making it reusable and version-controlled. Here's how the scanned Google Cloud Storage Bucket config is auto-generated:

Result: Legacy, console-managed infrastructure is instantly converted into IaC, enabling automation, versioning, and Git-based collaboration across merged environments.

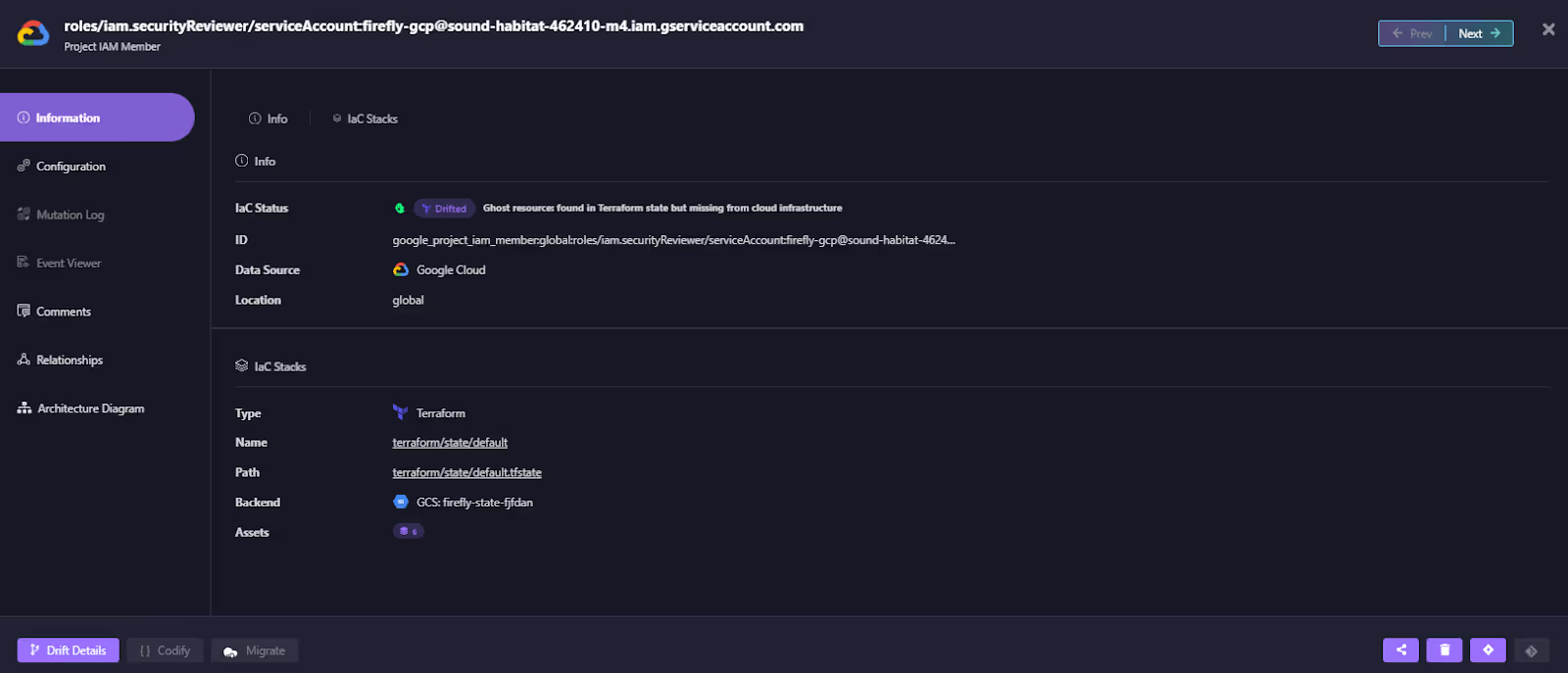

3. Detecting and Managing Infrastructure Drift

Without Firefly: Once the IaC baseline is in place, maintaining alignment between live infrastructure and code is critical. Traditionally, this means periodically exporting configurations, running manual diffs, and remediating mismatches by hand. Drift in IAM, networking, or security groups often goes unnoticed until a production issue arises.

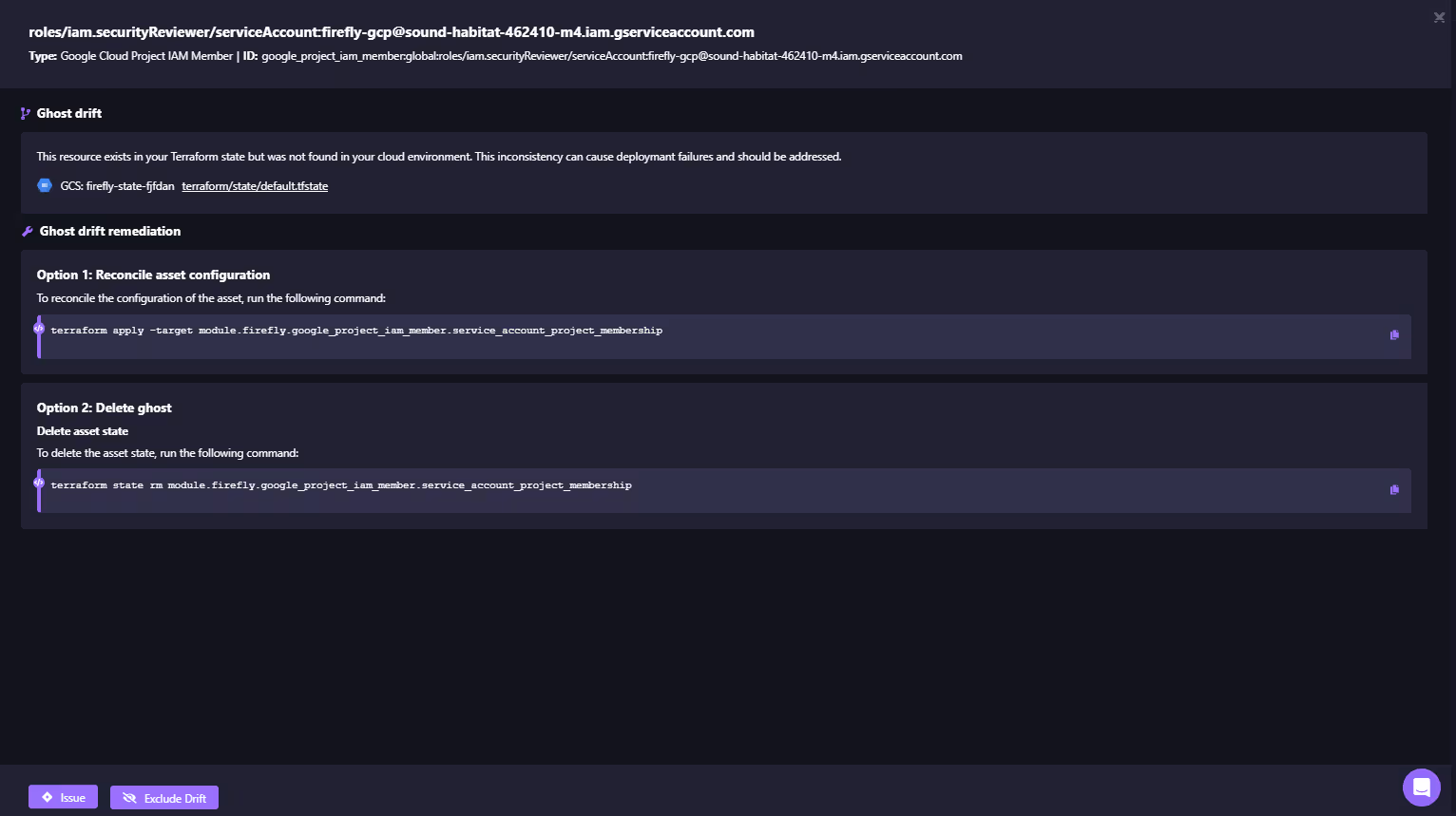

With Firefly: Firefly continuously compares IaC-managed resources against the source IaC definitions. When drift is detected, for example, if a security group is manually modified in one environment, Firefly not only flags the deviation but also offers remediation options. You can choose to revert the resource to match the IaC stack or update the IaC stack to reflect the current configuration

When drift is detected, such as when a security group is manually modified in one environment, Firefly flags the deviation and can automatically open a pull request in your Git repository with the necessary fix.

For example, here is marked with a drift status, indicating that a change has been detected between the infrastructure code and the actual deployed configuration:

It is also flagged as Ghost, which signifies that the IAM role exists in the Terraform state file but is missing from the live environment. Firefly also provides the remediation of these drifted resources, as shown below:

Here, it gives two options: one is to either reconcile the drift by:

terraform apply -target module.firefly.google_project_iam_member.service_account_project_membership

And the second option is to delete the asset state by:

terraform state rm module.firefly.google_project_iam_member.service_account_project_membership

This way, your multiple clouds stay synchronized with the codebase. All remediations are tracked through GitOps workflows, reducing configuration drift risk across both estates.

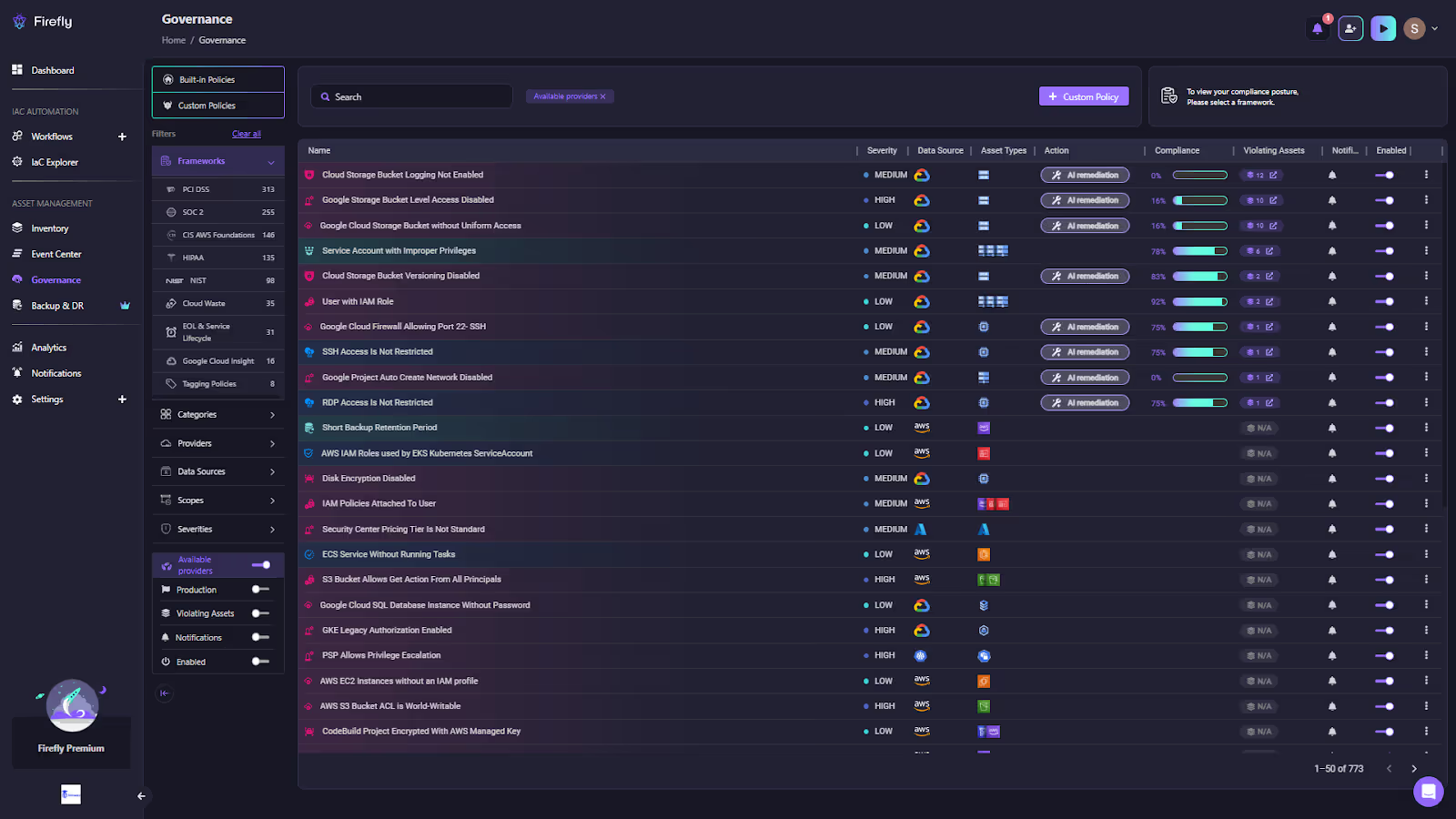

4. Enforcing Governance and Compliance Policies

Without Firefly: Governance teams typically manage separate policies per cloud, AWS Config, Azure Policy, or GCP Organization Policies, and must manually verify that encryption, IAM roles, and tags are compliant. Audits involve sampling resources rather than continuous monitoring, which leaves room for misconfigurations.

With Firefly: Firefly uses Open Policy Agent (OPA) to enforce governance as code across all connected environments. Here’s the OPA-based governance dashboard of Firefly:

The above snapshot highlights non-compliant resources, such as Google Cloud Storage Buckets, that are not encrypted or are missing required tags. The continuous monitoring and automated remediation reduce the burden on governance teams, ensuring resources remain compliant with organizational and regulatory policies.

Result: Governance is defined once and automatically enforced across AWS, Azure, GCP, and Kubernetes, closing policy gaps before they reach production.

5. Cost Management and Waste Reduction

Without Firefly: Financial teams must consolidate cost exports from different clouds, normalize currencies, and manually correlate billing data with discovered resources. Identifying idle VMs, unattached storage, or over-provisioned databases often requires custom scripts and days of analysis.

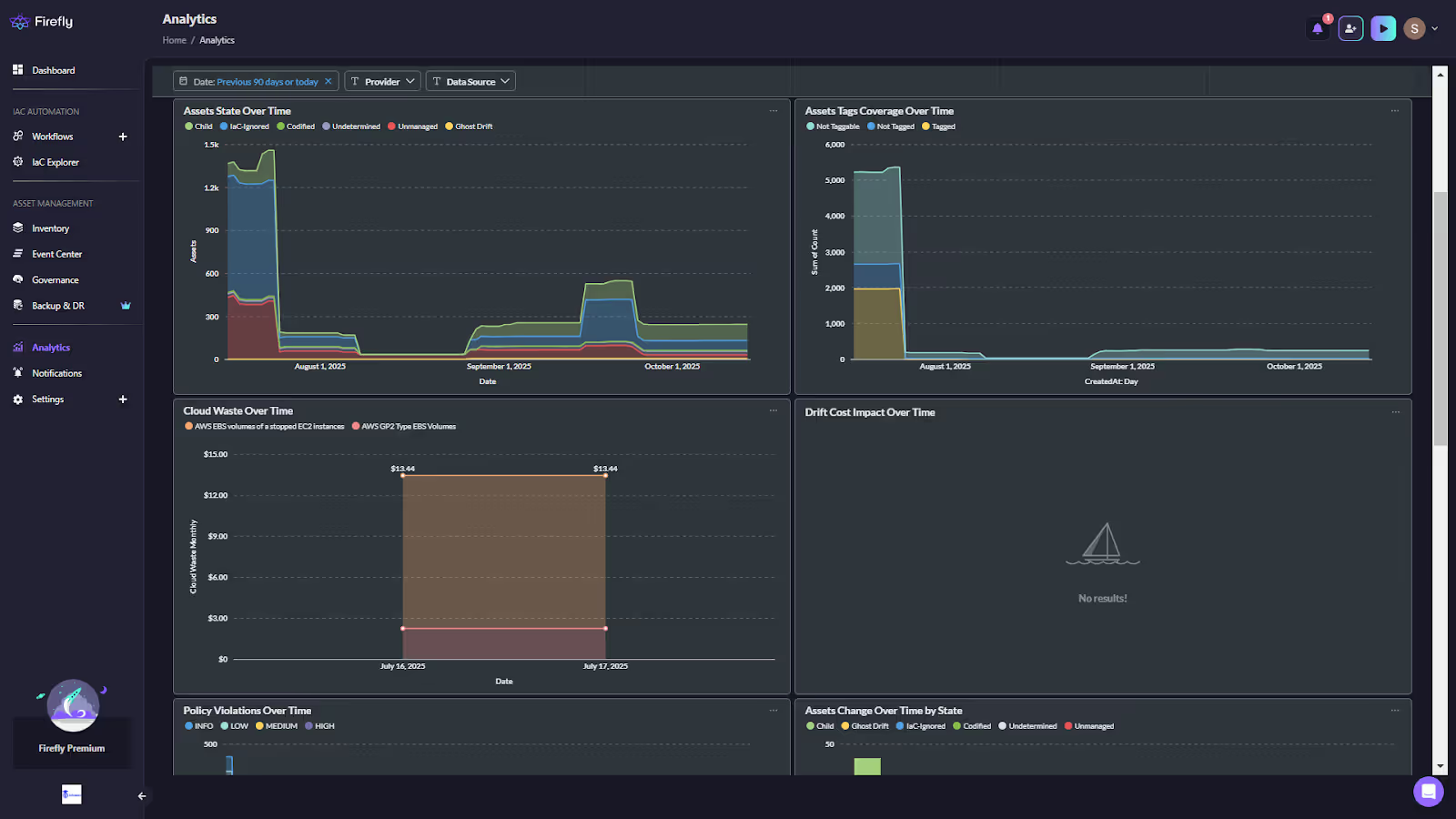

With Firefly: Firefly provides real-time cost visibility for every discovered resource, enriched with metadata like owner, project, and tag. It highlights unused or underutilized resources and quantifies potential savings from right-sizing or shutdown. As shown in the Firefly’s analytics page below, Firefly highlights potential cost savings and waste from unused resources, such as unoptimized VMs or storage buckets:

By tracking cost impact over time, Firefly ensures that the infrastructure is cost-efficient and optimized all the time. Cloud costs are continuously monitored alongside infrastructure visibility. Redundant and idle resources are surfaced early, allowing teams to optimize spend during the transition period.

6. Managing Changes Across the Cloud Environments

Without Firefly: Change tracking during integration typically relies on a mix of audit logs, ticket comments, and chat updates. Identifying who modified which environment, and why, often requires manual correlation across providers.

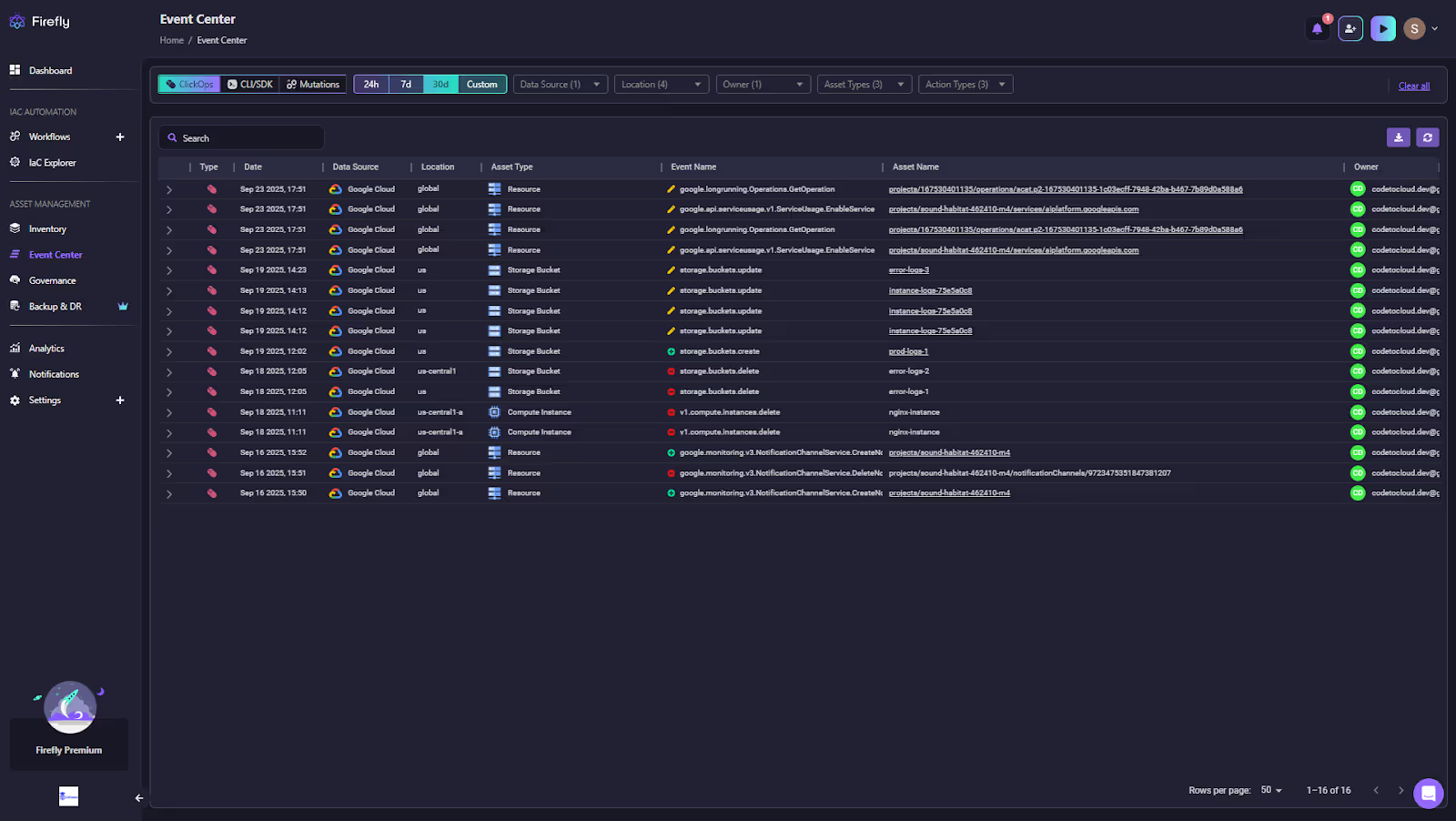

With Firefly: Firefly’s Event Center aggregates all infrastructure changes across AWS, GCP, Azure, and Kubernetes into a unified audit timeline, including events triggered via ClickOps, CLI/SDK activity, and direct mutations.. Each event includes event type, user identity, resource type, timestamp, and before/after configuration deltas. In the snapshot below, Firefly’s Event Center provides a comprehensive timeline of all infrastructure changes:

Teams gain complete visibility into every infrastructure change, improving traceability, rollback readiness, and compliance auditing across merged environments.

FAQs

How can cloud drive value in M&A transactions?

Cloud accelerates M&A integrations by offering scalable infrastructure, reducing operational costs, and enabling rapid resource provisioning. It helps unify IT systems faster, reduces data center expenses, and improves collaboration across teams, driving quicker realization of synergies.

What major factors drive M&A?

M&A is typically driven by factors like market expansion, access to new technologies, cost and revenue synergies, geographic growth, and strategic alignment. Companies seek to combine strengths, enhance capabilities, and streamline operations to achieve long-term value.

What is M&A in cloud?

M&A in cloud refers to the integration of cloud platforms and technologies during mergers and acquisitions, where companies consolidate cloud services, migrate workloads, and unify systems to leverage cloud capabilities for faster, more efficient operations and scalability.

What is the M&A integration lifecycle?

The M&A integration lifecycle includes pre-deal planning, deal closing, post-deal integration, execution, and optimization. It involves aligning business operations, IT systems, and cultures to achieve synergies, with cloud technology often playing a key role in streamlining integration and ensuring scalability.