TL;DR

- DR in the cloud often fails due to untested failovers, siloed tools, and missing coverage for legacy systems.

- Small cracks like outdated runbooks and ignored apps add up, causing long downtime and compliance risks.

- Key to success: track RTO/RPO, run frequent tests, automate failover, and cover all systems.

- Firefly simplifies DR with codified infra, real-time detection, automated recovery, and continuous compliance.

- Result: faster recovery, stronger security, lower costs, and audit-ready resilience.

According to a 2014 study by Gartner, the average cost of application downtime is $5,600 per minute. This makes disaster recovery (DR) a crucial practice on the cloud. Cloud adoption was supposed to make disaster recovery easier. With global regions, managed backups, and elastic scaling, enterprises believed resilience would be built-in. In reality, disaster recovery is one of the most fragile parts of modern infrastructure.

There are two major types of recovery challenges:

Infrastructure recovery: when physical or virtual systems fail and workloads must be restored elsewhere. For example, an AWS region goes down, but the failover process hasn’t been tested, leaving applications stuck offline.

Data recovery: when backups or replicas diverge from what’s in production. A database may have daily snapshots, but if ransomware strikes at noon, those snapshots leave a 12-hour gap of unrecoverable data loss.

These gaps exist because enterprise systems are dynamic. Engineers deploy fixes directly in production, security teams add emergency firewall rules, SaaS vendors change APIs without notice, and backups often run on outdated schedules. The result is that what’s documented in the DR plan rarely matches what’s actually running in the environment.

The consequences are severe: recovery times stretch beyond SLA promises, compliance audits expose missing safeguards, attackers exploit unprotected data, and costs balloon when outages linger longer than planned. This blog will explore why DR fails, what enterprises need to get it right, and how Firefly’s solution ensures resilience.

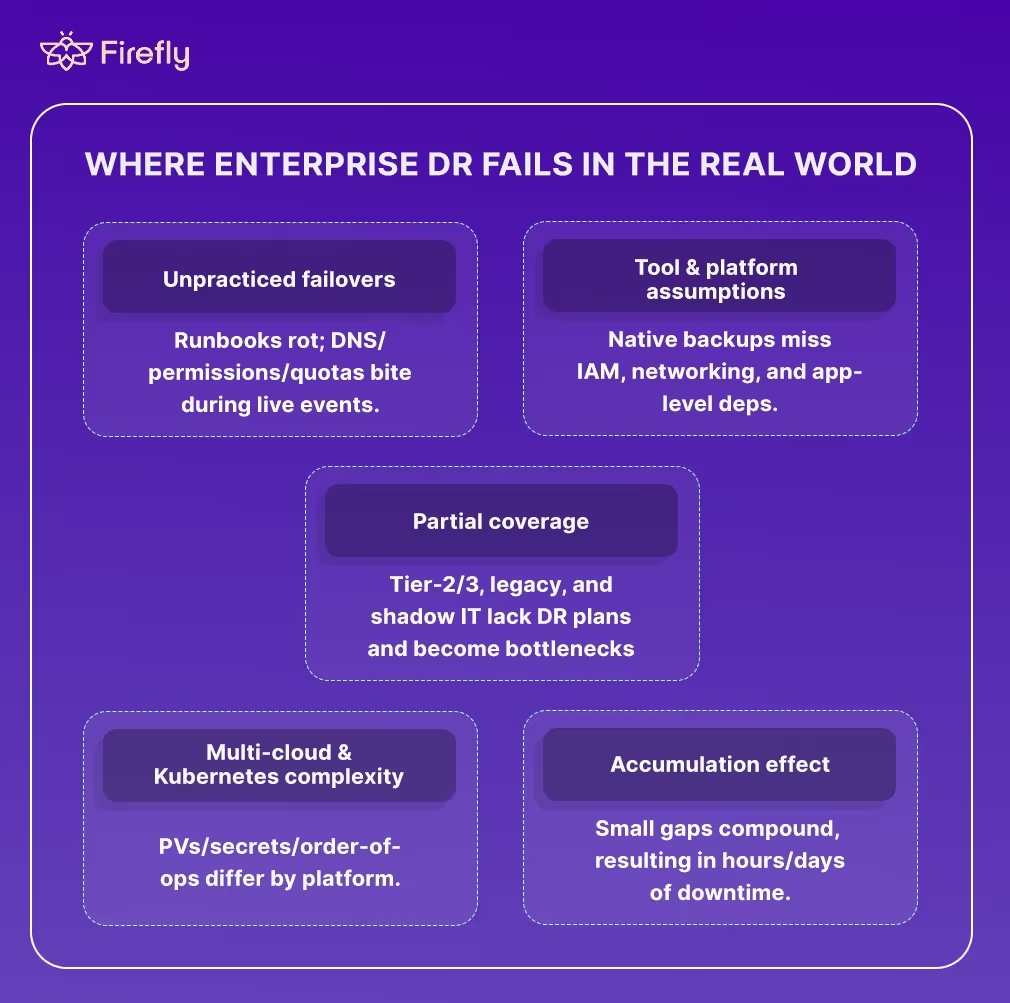

Where Enterprise DR Fails In The Real World

The failure patterns are surprisingly predictable. Disaster recovery doesn’t collapse overnight; it usually unravels through a handful of common scenarios that repeat across enterprises.

Unprepared Failover Scenarios

Every DevOps engineer knows this story: a region-wide outage strikes, pressure mounts, and the recovery runbook is pulled out for the first time in months. Scripts fail, permissions are missing, DNS changes don’t propagate, and teams scramble to improvise. The recovery eventually works, hours later, but the downtime damage is already done.

Tool and platform assumptions

Cloud providers advertise native backup and replication features, but those tools don’t always integrate with enterprise workflows. An AWS RDS snapshot may not restore IAM roles or custom configs. Azure’s backup might exclude certain SaaS connectors. These mismatches create a false sense of safety until recovery day proves otherwise.

Partial coverage of critical systems

Most enterprises protect their Tier-1 apps, but Tier-2 and Tier-3 workloads are often left with weaker recovery strategies, or none at all. Legacy databases, SaaS integrations, or shadow IT services like Google Drive, Dropbox, or personal cloud storage accounts used by employees to store and share work-related files without IT approval fall outside formal DR planning. Ironically, it’s often these “secondary” systems that bottleneck recovery because they hold essential data or dependencies.

Multi-cloud and Kubernetes complexity

Recovery looks different depending on the stack. In AWS, it might mean restoring EBS volumes in another region. In Azure, it redeploy resources groups with dependencies intact. In Kubernetes, recovery involves rehydrating persistent volumes, secrets, and configs in the right order. Each platform brings its own failure points, and when combined, complexity multiplies.

The accumulation effect

Missed test drills, outdated backups, overlooked dependencies; each seems minor on its own. But they add up over time. By the time an enterprise faces a true outage, these small cracks form a systemic gap. Recovery timelines stretch far past SLAs, and confidence in the DR plan collapses.

Small-Scale Recovery vs. Enterprise-Scale Recovery

Not all recovery efforts are equal. Sometimes a disruption is minor and recoverable with minimal effort. Other times, the scope and scale of failure expose deep cracks in enterprise preparedness. To understand why large organizations struggle, it’s helpful to distinguish between normal recovery and enterprise recovery.

Small-Scale Recovery: Creating Resource Snapshots

Normal recovery happens in most teams that experience small-scale outages or localized failures. Typical examples include:

- A developer accidentally deletes a Kubernetes namespace, and the cluster is restored from a recent backup.

- A VM crashes, but snapshots allow it to be spun up again in minutes.

- A SaaS outage forces teams to switch to a manual workflow temporarily until the provider restores service.

Here’s how you create an automated backup policy for a production RDS database in AWS:

aws backup create-backup-plan --backup-plan '{

"BackupPlanName":"daily-rds-plan",

"Rules":[

{"RuleName":"daily","TargetBackupVaultName":"Default",

"ScheduleExpression":"cron(0 3 * * ? *)",

"StartWindowMinutes":60,"CompletionWindowMinutes":180,

"Lifecycle":{"DeleteAfterDays":14}}

]}'

aws backup create-backup-selection --backup-plan-id <PLAN_ID> --backup-selection "{

\"SelectionName\":\"rds-selection\",

\"IamRoleArn\":\"arn:aws:iam::<ACCOUNT_ID>:role/service-role/AWSBackupDefaultServiceRole\",

\"Resources\":[\"arn:aws:rds:us-east-1:<ACCOUNT_ID>:db:prod-db\"]

}"

In case of a crash, redeploy the database using the most recent snapshot:

aws rds restore-db-instance-from-db-snapshot \

--db-instance-identifier prod-db-recovery \

--db-snapshot-identifier prod-db-snap-2025-09-24 \

--db-subnet-group-name prod-db-subnets \

--vpc-security-group-ids sg-0123456789abcdef0 \

--db-instance-class db.m6g.large

The above code shows how automation reduces recovery time: instead of scrambling to create ad-hoc snapshots or restore manually, you already have daily backups in place and a clear restore process you can run in minutes.

These disasters are disruptive but containable. The impact usually looks like this:

- Limited downtime for a single team or application.

- Some productivity loss or customer inconvenience.

- Minor SLA violations or temporary security risks.

These disasters are serious but generally manageable. With back-up, small failover procedures, or vendor recovery, most organizations can return to normal operations without long-term damage.

Enterprise-Scale Recovery

Enterprise recovery is a very different challenge. This is what happens when disaster strikes across multiple regions, platforms, or critical systems, and the cracks in planning become visible. For instance, a simultaneous AWS outage across multiple regions can disrupt both primary applications and critical backups, exposing gaps in recovery strategies.

Characteristics of enterprise recovery failures:

- Scale: Instead of one VM, thousands of workloads need to be restored simultaneously.

- Partial protection: Only Tier-1 apps were included in the plan, leaving secondary systems as bottlenecks.

- Shadow IT and SaaS dependencies: Critical business processes run on services never included in the DR strategy.

- Tool gaps: Cloud-native backups miss configurations, identities, or cross-platform dependencies.

- Compliance exposure: Recovery timelines and data integrity fall short of what regulations like GDPR, HIPAA, or SOX require.

- Accumulation effect: Years of under-tested plans and unverified assumptions suddenly surface.

The impact of enterprise-scale disasters is brutal:

- Business-critical risk: Prolonged downtime causes lost revenue, reputational damage, and legal exposure.

- Financial waste: Manual recovery and SLA penalties cost enterprises millions.

- Operational paralysis: Teams lose confidence in DR, slowing innovation and forcing endless fire drills.

Enterprise recovery isn’t just about restoring systems; it’s about whether the entire continuity model holds up when the stakes are highest.

Why Traditional Disaster Recovery Fails at Scale

Most enterprises try to handle disaster recovery with the tools and processes they already have: periodic backup jobs, vendor failover options, or quarterly tabletop exercises. These approaches can work for small environments, but they collapse under the scale and complexity of modern enterprises.

Scheduled Backups

Backups are usually the first line of defense, but they only protect what you’ve chosen to back up. If some applications or systems are left out, they simply won’t come back during recovery. Even when backups exist, they’re just snapshots in time.

For example, if your last snapshot was at 3 AM and ransomware hits at 3 PM, you’ve instantly lost 12 hours of important data. On top of that, most companies never test how fast they can actually restore from these backups. So when disaster strikes, no one really knows how long it will take to get back online.

Native Cloud Recovery Tools

AWS Backup, Azure Site Recovery, and GCP’s Disaster Recovery services promise easy resilience. The problem is, these tools operate in silos. They don’t understand cross-cloud dependencies or application-level needs.

For example, restoring an RDS database snapshot may not include custom IAM roles, networking rules, or SaaS connectors required for the app to function. The result: “restored” systems that still don’t work.

Manual Runbooks and Scripts

Many organizations rely on recovery runbooks or custom scripts for failovers. On paper, they look fine. But in practice, they often fail.

For example, a script written to switch traffic to another region might stop working after a cloud provider updates its API. Or a runbook may assume an engineer with special access is available, but that person has left the company. When an outage hits, teams end up guessing instead of executing.

Periodic Tabletop Exercises

Some companies test recovery quarterly or annually through simulated outages. The flaw is obvious: disasters don’t wait for the next scheduled drill. By the time the exercise happens, countless configuration changes, new apps, and SaaS integrations have gone untested. The gap between the plan and reality grows wider every day.

For example, creating an AWS IAM Policy for Multi-AZ enforcement:

package dr.policies

deny[msg] {

some i

r := input.resource_changes[i]

r.type == "aws_db_instance"

r.change.after.multi_az == false

msg := sprintf("RDS %s must be Multi-AZ", [r.address])

}

The result is that traditional DR strategies may look good in presentations but fail under the pressure of real-world, enterprise-scale disasters.

Key Metrics for Measuring DR Success

Enterprises can’t improve what they don’t measure. Tracking the right metrics ensures disaster recovery plans aren’t just theoretical but actually deliver when needed. The most critical KPIs include:

- Recovery Time Objective (RTO): The maximum acceptable downtime before systems must be restored.

- Recovery Point Objective (RPO): The maximum acceptable data loss measured in time (e.g., last 15 minutes of transactions).

- Failover Test Frequency: How often DR drills are performed and validated across regions and platforms.

- Codification Coverage: The percentage of infrastructure codified into IaC, ensuring resources are recoverable via automation.

- Recovery Drill Success Rate: The percentage of tests that meet RTO and RPO targets without manual workarounds.

By monitoring these metrics continuously, enterprises can assess whether their DR strategy is resilient enough and where investments or improvements are needed.

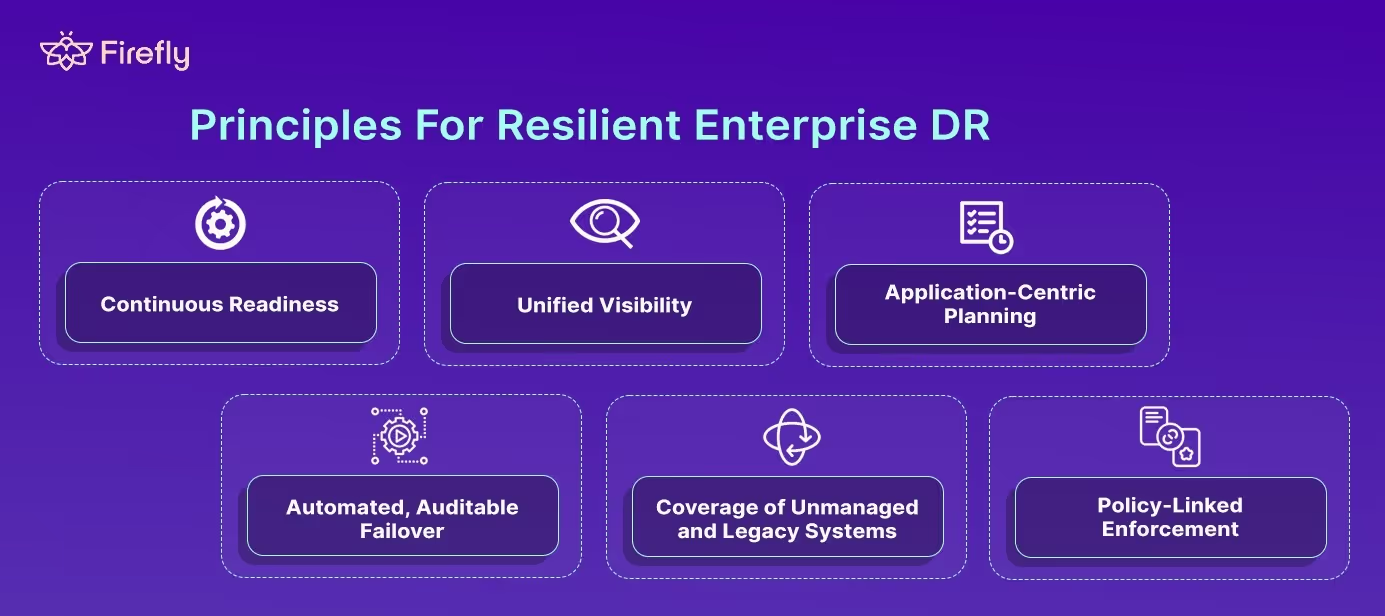

Design Principles For Resilient Enterprise DR

To recover effectively at scale, enterprises need principles that go beyond just backup tools or annual drills. Successful disaster recovery relies on continuous, integrated systems that address the complexities of modern infrastructure. Here’s how to make that happen:

1. Continuous Readiness

Recovery can’t be validated once a year during a tabletop exercise. In dynamic, multi-cloud setups, changes happen daily. Continuous, automated testing of failover scenarios is essential. If a region outage occurs at 3 AM, you should know immediately whether systems can switch over, not find out during the next quarterly review.

2. Unified Visibility

With cloud environments spanning AWS, GCP, and Azure, visibility across all platforms is crucial. Without a centralized view, businesses risk missing issues, like an unprotected app on Azure, until it's too late. A unified dashboard that tracks DR posture across platforms ensures you're always prepared.

3. Application-Centric Planning

At enterprise scale, infrastructure alone isn’t enough. For an app running on AWS but relying on a database in Azure, your DR plan must include both. Failing to account for cross-platform dependencies could lead to partial recoveries, leaving your service broken. This means capturing dependencies across clouds, SaaS tools, identity systems, and networking, and ensuring the whole service can recover, not just the pieces.

4. Automated, Auditable Failover

Manual recovery is too slow and error-prone. Enterprises need automation that can orchestrate failovers and restorations with minimal human intervention. And those workflows must be auditable, with a clear trail of who approved what, for compliance and accountability.

5. Coverage of Unmanaged and Legacy Systems

Most failures happen in systems that were never included in the DR plan. Enterprises need to bring legacy apps, shadow IT services, and manually managed systems under a unified recovery framework. Without this, those blind spots will always undermine resilience. For example, if you have systems, like an old Windows server in Azure, that need to be included to prevent recovery issues when they fail.

6. Policy-Linked Enforcement

Recovery isn’t just about uptime; it’s also about compliance. Enterprises must tie DR validation directly to policies, ensuring that recovery objectives (RTOs, RPOs) align with regulations like HIPAA, SOX, or GDPR. If a system can’t meet its RPO, that should be flagged immediately, not discovered after a breach or outage.

Enterprise-grade disaster recovery requires moving from reactive, backup-first strategies to continuous, automated, application-aware resilience. Without these principles, teams are stuck hoping their plans work instead of knowing they will.

Firefly’s Differentiated Approach to Enterprise Disaster Recovery

The principles of enterprise-grade disaster recovery sound good in theory, but most teams can’t operationalize them with the tools they already have. The problem is that existing solutions are siloed, overly manual, and lack full coverage across clouds, SaaS, and legacy systems.

That’s where Firefly comes in. Firefly was designed to take DR from a fragile, manual exercise to a controlled, auditable workflow that prevents downtime before it happens.

Here’s how Firefly approaches DR differently:

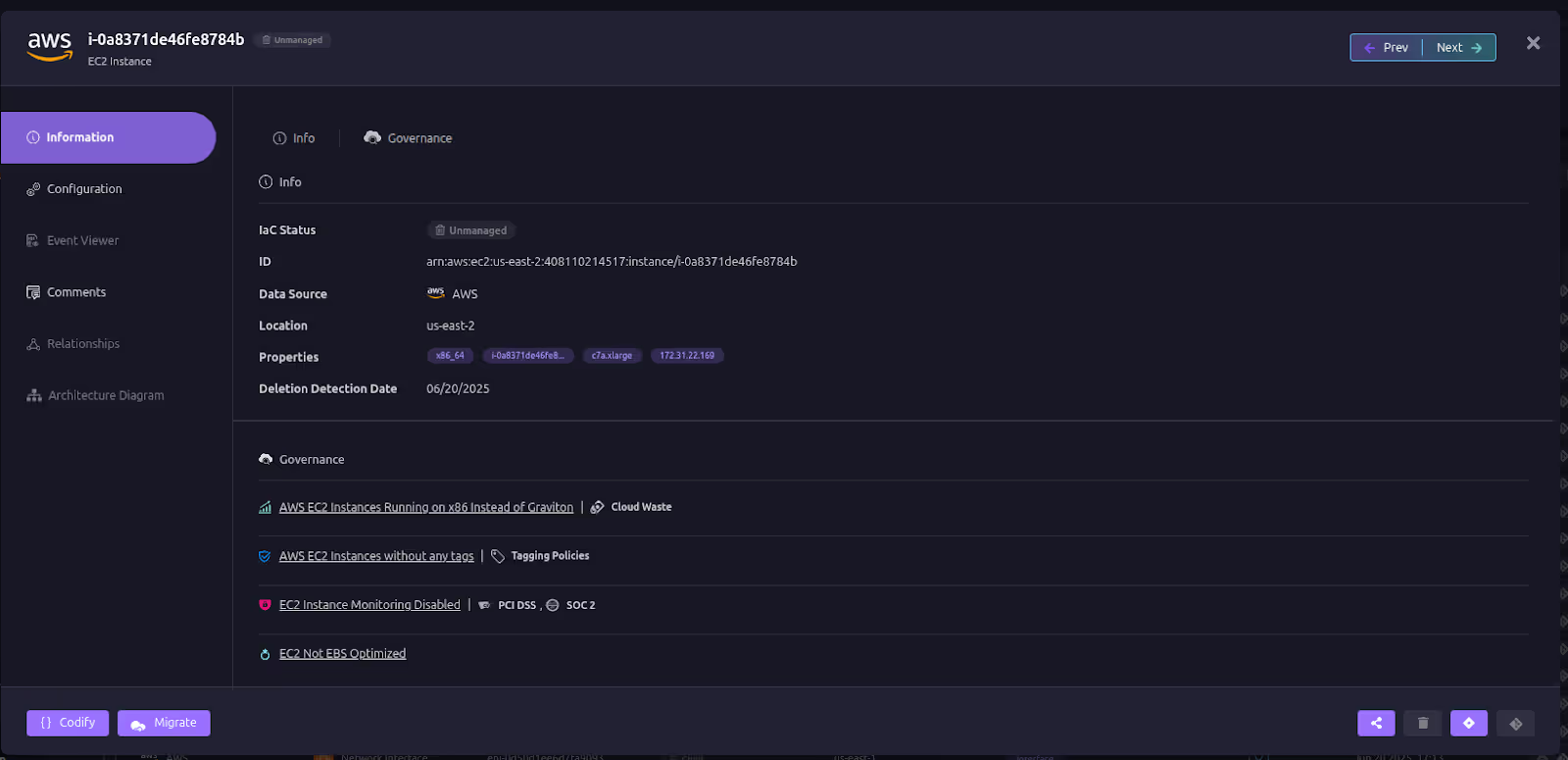

Rapid Recovery of Deleted or Misconfigured Assets

Instead of scrambling to rebuild a missing VM or database from memory, Firefly can instantly regenerate its Infrastructure-as-Code (IaC) definition. From there, it opens a pull request into your GitOps workflow so the asset is recreated exactly as it was, with full auditability. This ensures recovery doesn’t just restore service, it also brings the resource under code management, reducing future risk.

Here’s a deleted EC2 that can easily be codified and recovered with Firefly:

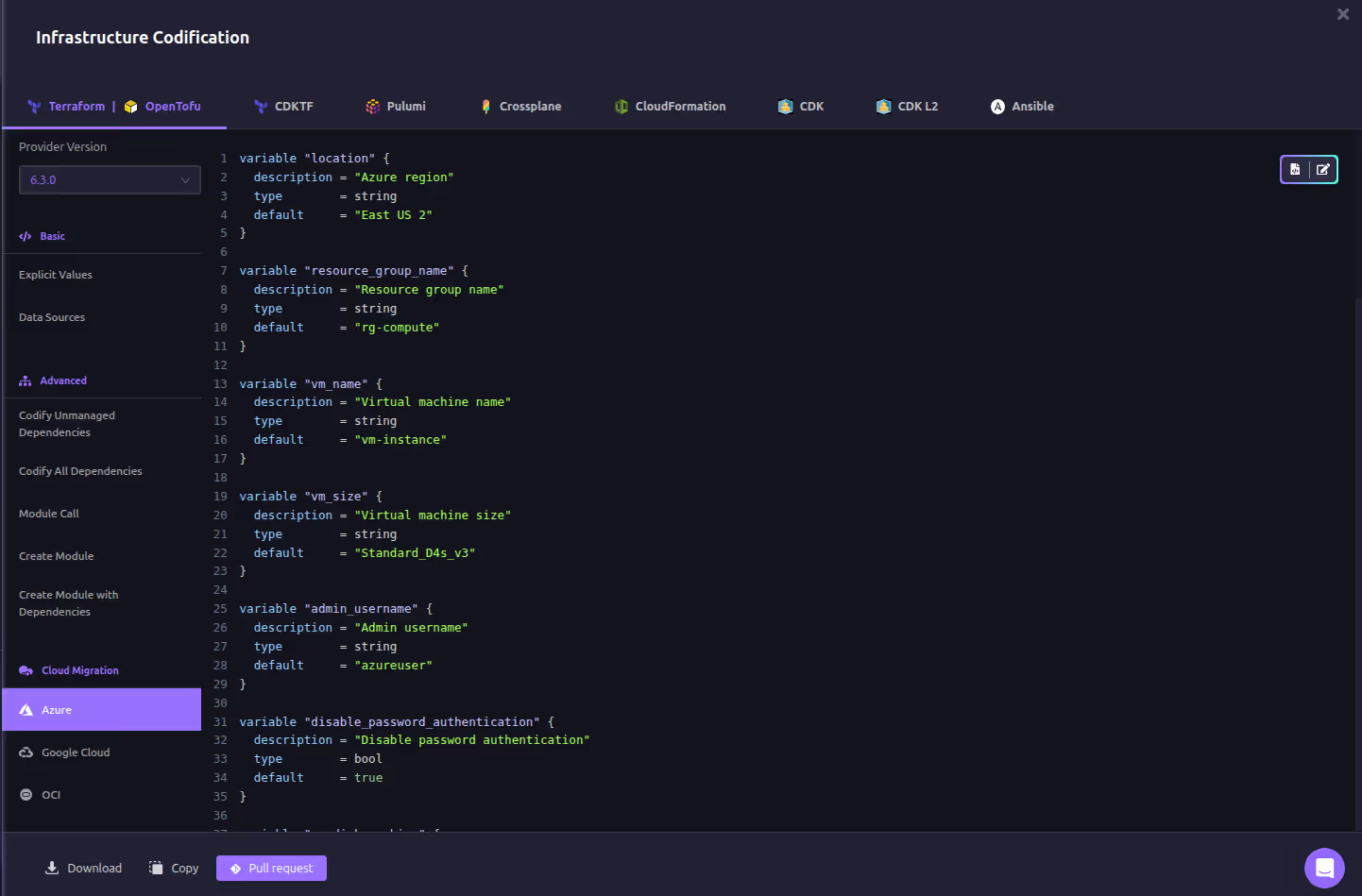

Codification as a DR Foundation

Most outages get worse because critical assets aren’t documented or managed by code. Firefly’s codification engine converts all resources, even legacy or ClickOps-created ones, into Terraform, Pulumi, or CloudFormation. This creates a complete, version-controlled blueprint of your infrastructure. During recovery, teams can redeploy resources consistently, with dependencies intact, instead of guessing at configurations.

Here’s an example of generating IaC of unmanaged resources in Firefly:

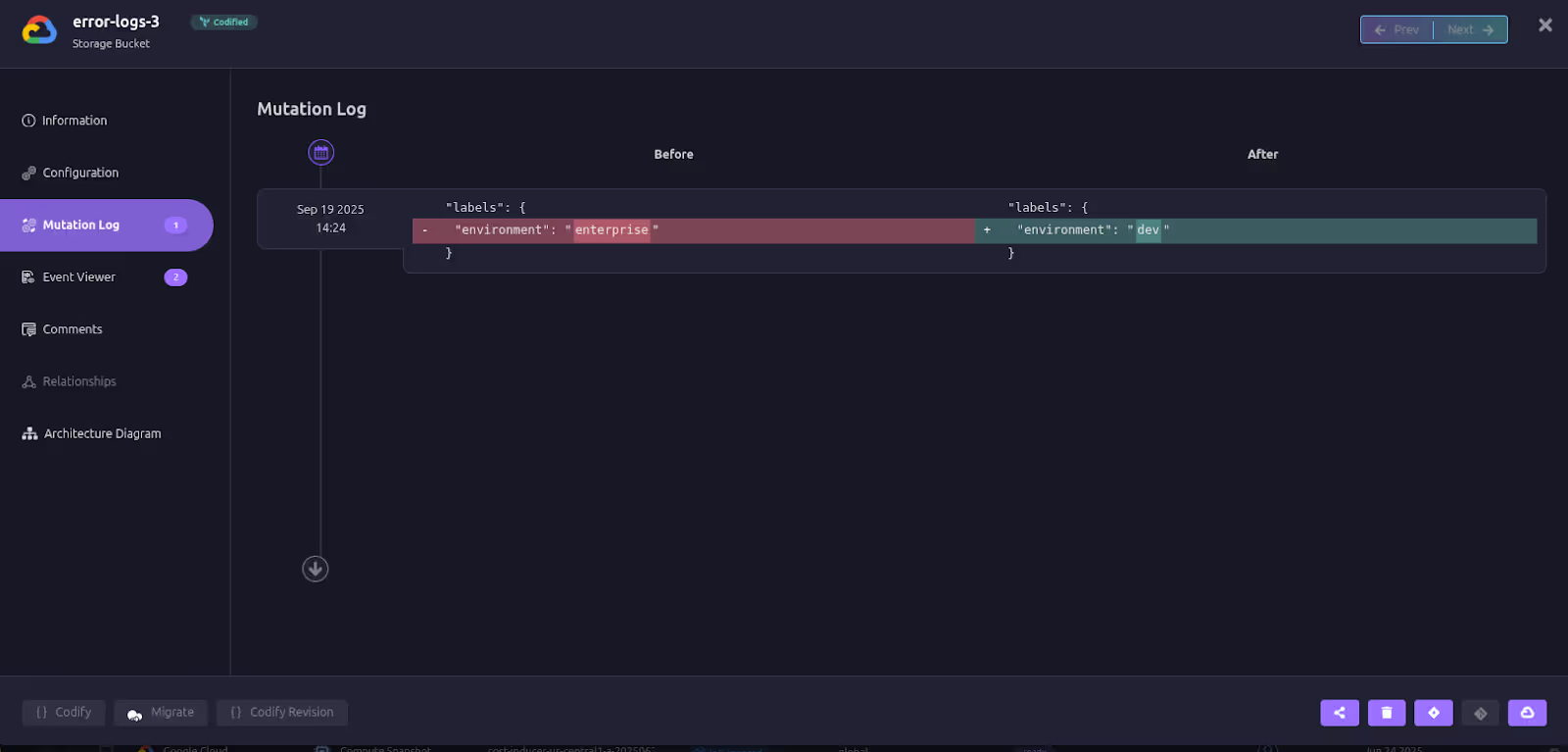

Event-Centered Diagnosis and Root Cause Analysis

When the source of failure isn’t obvious, Firefly’s mutation logs and ClickOps event tracking provide a clear timeline of changes across environments. Teams can see exactly who modified what, when, and why. With one click, they can roll back to a previous known-good configuration via a pull request, turning chaotic troubleshooting into a systematic recovery process.

Here’s how Firefly identifies and highlights changes in mutation logs and ClickOps events:

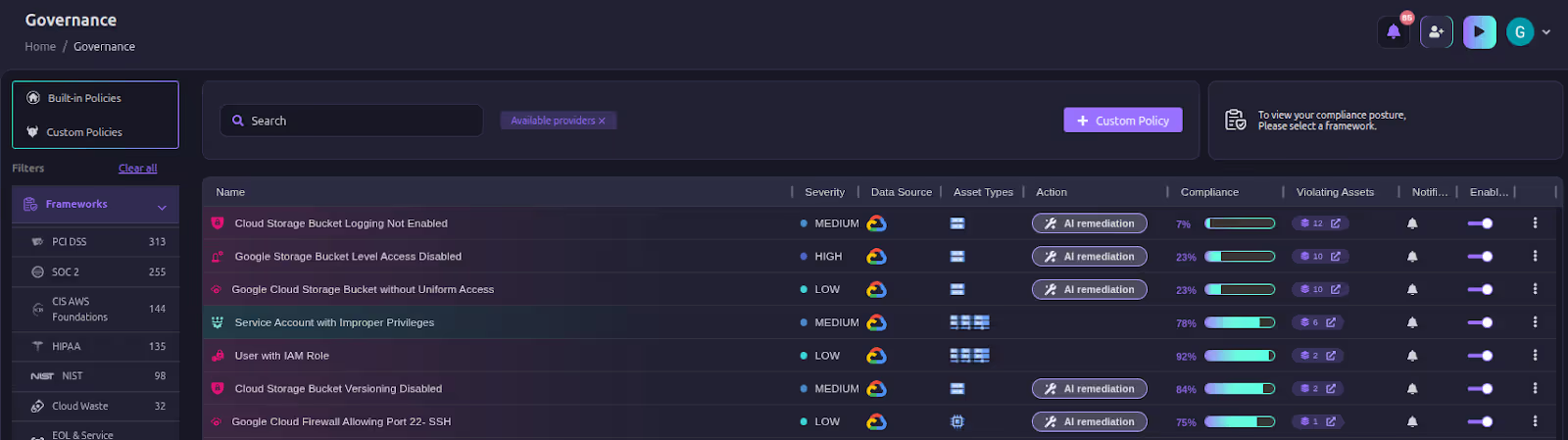

Proactive Prevention with Notifications and Policies

Firefly isn’t just reactive; it helps prevent disasters in the first place. Real-time notifications alert teams to risky changes like untagged assets, missing backups, or guardrail violations. Its governance engine enforces policies such as “RDS must be Multi-AZ,” “DynamoDB must have point-in-time recovery,” or “Kubernetes workloads must have CPU limits.” By catching misconfigurations before they cause outages, Firefly strengthens resilience day to day.

Here are some examples of Firefly Governance policies:

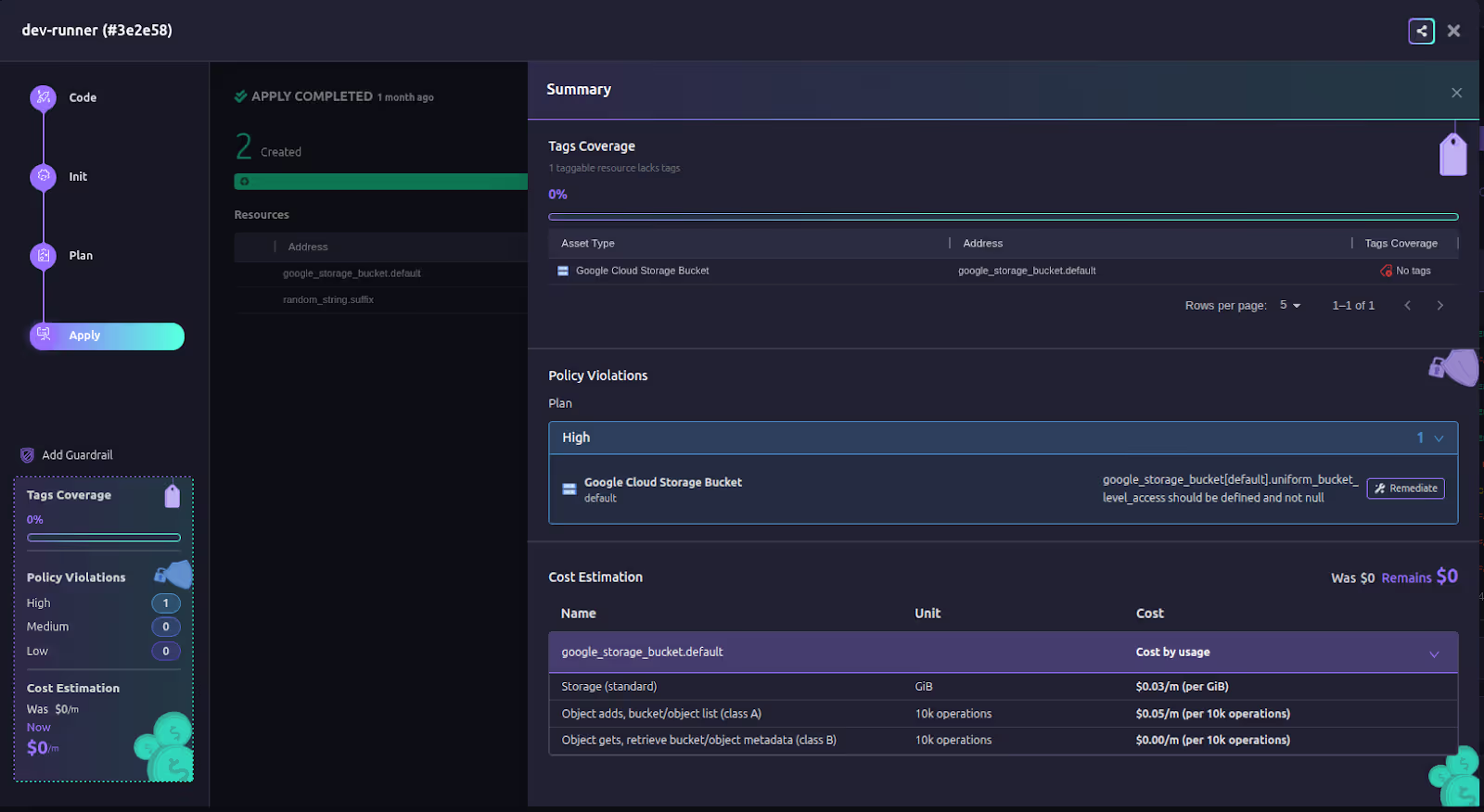

Automated, Auditable Workflows

Detection without resolution is just noise. Firefly integrates with GitOps and enterprise workflows like Slack, Teams, Jira, and ServiceNow. Every recovery action, whether recreating an asset or rolling back a config, flows through auditable pull requests with clear approvals. Compliance teams get a built-in evidence trail, while engineers get automation that accelerates recovery.

You can create custom workflows with custom guardrail policies that ensure that you run policy-compliant workflows directly from Firefly:

In short, Firefly operationalizes disaster recovery by combining codification, real-time detection, automated recovery, and proactive governance into one system. It doesn’t just help enterprises bounce back from outages; it helps them prevent many of those outages altogether.

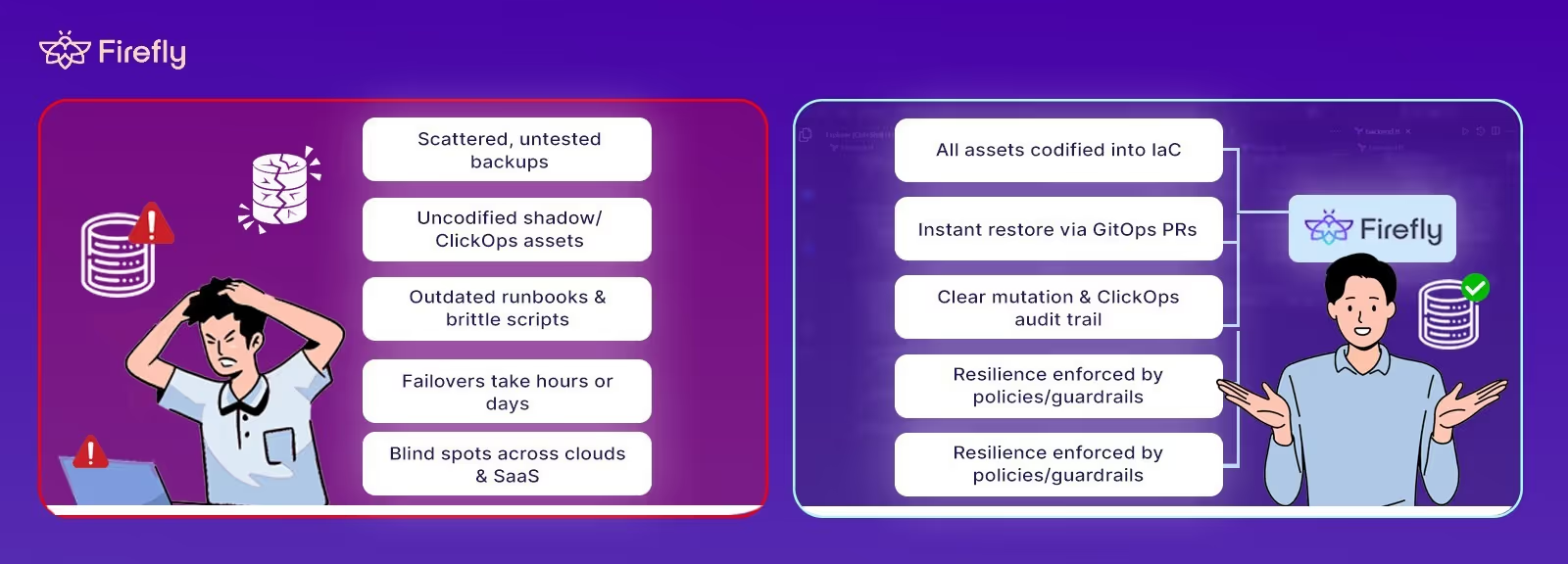

Disaster Recovery Before vs. After Firefly

For most enterprises, disaster recovery today is a fragile patchwork of backups, scripts, and untested assumptions. Plans look solid in presentations but often collapse under the stress of real outages. Firefly changes that by making recovery visible, actionable, and reliable, all in real time.

The shift is dramatic: instead of hoping recovery will work, enterprises using Firefly can know it will, and prove it.

With Firefly, enterprises can simplify disaster recovery and experience immediate benefits across teams. Recovery times are drastically reduced, as assets are restored instantly via codification and GitOps. Security improves with automatic detection of misconfigurations and enforcement of best practices like Multi-AZ databases. Compliance becomes easier, with automatic logging of every recovery and policy check, meeting regulations like HIPAA or GDPR. Firefly also boosts operational efficiency, eliminating manual tasks, and helps reduce costs by minimizing downtime and identifying mismanaged assets. Overall, Firefly turns disaster recovery into a predictable, efficient process, giving teams confidence in their ability to recover quickly.

FAQs

What Are the 5 Steps of Disaster Recovery?

The five steps are: risk assessment (identify threats), business impact analysis (understand critical systems), strategy development (define recovery methods), plan implementation (set up tools and processes), and testing/improvement (regularly validate and update the plan).

What Are the Three Types of Disaster Recovery?

The three types are: backup and restore (simple, cost-effective but slower), hot/warm/cold site recovery (alternate sites with varying readiness), and cloud-based disaster recovery (leveraging cloud infrastructure for flexible, rapid failover).

What Are the 4 Pillars of Disaster Recovery?

The four pillars are: prevention (reducing risks), preparedness (planning and training), response (executing recovery actions during incidents), and recovery (restoring systems and learning from the event).

Which Is More Important, RTO or RPO?

Both are critical. RTO (Recovery Time Objective) defines how quickly systems must be restored, vital for uptime and customer trust. RPO (Recovery Point Objective) defines how much data loss is acceptable, essential for data integrity. The priority depends on business needs; customer-facing apps often prioritize RTO, while data-heavy systems prioritize RPO.