TL;DR

- Infrastructure drift occurs when Terraform, Pulumi, or any IaC definitions no longer match the actual running configuration, and configuration drift happens when runtime settings, such as IAM roles, security group rules, or Kubernetes replicas, are modified directly. Both forms lead to pipeline failures, audit discrepancies, and unmanaged costs.

- Drift surfaces in enterprises through specific patterns: console hotfixes made during incidents, Azure Policy or agent-driven modifications applied after deployment, Kubernetes autoscalers or manual edits that change the live state, and unmanaged legacy workloads that were never codified.

- While small mismatches can be reconciled with good hygiene, at enterprise scale, where only 60 to 80 percent of resources are managed as code and the rest exist across multiple clouds and shadow accounts, drift compounds until pipelines cannot converge, and IaC stops being a reliable source of truth.

- Traditional drift management approaches, such as CMDB-driven audits, large and noisy Terraform plans, siloed native cloud tools, and custom scripts, break down across hundreds of accounts and thousands of resources, leaving blind spots and creating more operational overhead than value.

- Firefly addresses these gaps by continuously detecting drift in real time, consolidating visibility across all environments into a single inventory, showing side-by-side diffs with actor attribution, codifying unmanaged resources, and driving remediation through pull requests, resulting in fewer failed deployments, stronger compliance evidence, improved security posture, and reduced waste.

Infrastructure drift happens when what’s defined in Infrastructure-as-Code tools like Terraform, Pulumi, or CloudFormation no longer matches what’s actually running in the cloud. Configuration drift goes a step further: even if resources are deployed correctly, their runtime settings, such as IAM roles, security group rules, or cluster configurations, diverge from what the code describes. Both forms of drift create gaps between the intended state and the actual state of infrastructure.

Even organizations that invest heavily in IaC cannot fully escape drift. Emergency console fixes during incidents, legacy infrastructure that was never codified, and automated changes from scaling policies or security tools all introduce variations that remain invisible to the codebase. Over time, these mismatches accumulate until pipelines start failing, compliance audits surface discrepancies, and security posture weakens. Costs also spiral as orphaned or misconfigured resources stay online without governance.

Gartner projects that by 2028, 25% of organizations will report significant dissatisfaction with their cloud adoption, with uncontrolled costs and suboptimal implementation cited as primary drivers. Drift plays a central role in this dissatisfaction, because unmanaged change leads directly to inefficiency, wasted spend, and increased operational risk. What starts as small, untracked differences between code and infrastructure quickly becomes a systemic issue that undermines the very benefits cloud and IaC were meant to deliver.

How Drift Manifests in Cloud-Native Enterprises

Drift isn’t one thing; it shows up in multiple ways across day-to-day operations, and each path creates different problems for engineering teams. Some forms block pipelines outright, others erode compliance posture, and some silently increase cost.

The examples below show the most common sources of drift in cloud-native enterprises and the operational pain they cause:

Console Fixes During Incidents

When production is unstable, engineers often skip the pipeline and patch things directly in the cloud console. A security group rule is changed or an IAM role is updated on the fly. These edits keep systems running but break alignment with IaC. AWS itself warns that out-of-band changes cause CloudFormation stack updates to fail, and Terraform shows the same behavior during plan.

Platform and Tool Modifications

Drift is not limited to manual edits in the console. Cloud platforms and runtime tools frequently modify resources automatically. In Azure, for example, Policy effects such as modify or deployIfNotExists can inject tags, enable encryption, or enforce diagnostic settings after a resource is created. If those enforced changes aren’t captured in Terraform or ARM templates, the code and the live environment diverge.

Security and monitoring agents introduce similar gaps. Antivirus daemons may update OS registry keys, log agents can change file paths or retention policies, and cloud security tools often rewrite IAM or firewall rules at runtime. These changes happen outside the IaC pipeline, which means Git never records them. The result is drift that originates from automation itself, not just from humans.

Kubernetes Edits and Autoscaling

In Kubernetes, drift happens the moment someone uses kubectl edit or when an autoscaler updates replica counts. These are normal actions, but they instantly desync the cluster from the manifests in Git. GitOps tools like Argo CD flag these states as OutOfSync, which shows just how routine this kind of drift is.

Unmanaged and Legacy Resources

Most enterprises only codify 60–80% of their infrastructure. The rest, old VMs, storage buckets, or entire shadow accounts, are unmanaged and drift fastest. These don’t show up in IaC at all, so pipelines can’t reconcile them. Surveys consistently tie partial codification to wasted spend and compliance gaps.

Accumulation Over Time

A single console patch looks harmless, but across thousands of resources, the divergence compounds. Eventually, terraform plan or a Helm upgrade can’t converge, leaving the pipeline unusable. Finastra reported this exact problem: staging and production drifted until releases started failing and audits broke down, and it was only resolved once continuous drift checks and policy-as-code were enforced.

Normal Drift vs. Enterprise Drift

It’s key to draw this distinction because small-scale mismatch issues in the cloud are normal, but once your infrastructure and practices scale, the same issues become blockers. Knowing when drift is “just noise” vs. when it’s threatening your reliability, security, or costs changes how you should respond.

Normal Drift - Localized Drift in Day-to-Day Operations

Normal drift shows up in everyday operations: a devops engineer fixes something via the console during an incident, or a monitoring agent tweaks runtime settings like log retention, or an auto-scaler changes replica count. These happen because pipelines are slow, emergencies demand speed, or operational tools act automatically.

The effects are limited: a Terraform plan fails, a Kubernetes apply reports differences, or there’s a small compliance gap in a non-production environment. It adds friction, but teams can fix these with standard IaC hygiene, pull console changes into code, run drift detection in pipelines, and enforce GitOps.

Enterprise Drift - From Partial Codification and Day-2 Changes

Enterprise drift emerges when everyday mismatches accumulate across large, distributed environments. Several factors drive this:

- Partial codification: In most enterprises, only 60–80% of resources are defined in IaC. The rest exist outside code because engineers inherited legacy workloads, acquired new business units with unmanaged infrastructure, or delivered features quickly without backfilling IaC. These uncodified resources drift immediately because no pipeline governs them.

- Day-2 operations gap: After deployment, infrastructure needs runtime changes: scaling, tuning thresholds, patching agents, and enabling monitoring. These updates often happen through consoles, scripts, or platform defaults instead of IaC, so the live state diverges from what’s in Git.

- Governance and visibility limits: A global platform team may own core modules, but application teams often create additional resources. Over time, hundreds of repos and accounts make it unclear which resource belongs to which module or team. When drift surfaces, it’s difficult to trace ownership or reconcile changes across so many codebases.

- Impact at enterprise scale: In a large estate with thousands of resources, these factors create systemic issues. Terraform or Helm pipelines stop applying cleanly because too many resources have changed outside of code. GitOps controllers remain in OutOfSync states, making automated reconciliation unreliable. Compliance teams fail audits because the deployed environment doesn’t align with the documented source of truth. Finance reports show millions in spend that can’t be tied to tagged owners because unmanaged resources accumulate without governance. Security posture weakens as these blind spots increase the attack surface.

Enterprise drift is not just a routine mismatch; it’s the breakdown of IaC as a source of truth once unmanaged resources, runtime changes, and fragmented ownership compound across thousands of deployed assets.

Why Traditional Drift Management Fails at Scale

Most enterprises already use IaC features, native cloud tools, or internal scripts to check for drift. These approaches can be effective in smaller, well-defined environments, but they do not keep pace once infrastructure spans thousands of resources, multiple clouds, and distributed teams.

Why Traditional Drift Management Fails at Scale

Traditional drift management is the manual and reactive practice of defining a “golden baseline” for infrastructure, periodically checking live systems against it, and then remediating mismatches by hand.

- A baseline is often stored in a CMDB or documented as the intended configuration.

- Discovery jobs or manual scans capture the current state.

- Audits are run periodically to compare the live state against the baseline.

- Drift is flagged and administrators fix it manually, usually through change requests or incident tickets.

This approach made sense in static data center environments, but it doesn’t fit the reality of cloud-native enterprises where thousands of resources are created, scaled, and modified every day. The challenges become obvious at scale: drift is only detected reactively, it isn’t tied to IaC pipelines, and remediation bypasses Git entirely.

Enterprises that embraced Infrastructure-as-Code have tried to modernize drift management using tools like Terraform, native cloud services, or custom scripts. These are improvements over CMDB-driven audits, but they still inherit many of the same scaling limitations.

Terraform Plan Loops

Terraform detects drift by running terraform plan and comparing state files to deployed resources. This only covers codified assets. Legacy VMs, one-off IAM roles, or storage accounts created outside code never appear in a plan. In large estates, plans can take hours to complete and output thousands of noisy diffs, many triggered by autoscaling or policy defaults. Reviewing them at scale becomes impractical, and critical drift signals get buried.

Native Cloud Drift Tools

AWS Config, Azure Policy, and GCP Security Command Center each detect when a resource diverges from a rule or standard. Their scope is limited to the provider where they run, and they don’t tie drifted resources back to the Terraform or Helm modules that deployed them. For example, AWS Config might flag an overly permissive security group, but won’t show which repo or team owns it. In multi-cloud environments, this results in separate drift reports without a consolidated view.

Custom Scripts and One-Off Automations

Platform teams often write scripts to query APIs and check for drift. For example, in AWS a quick Python script with boto3 can list S3 buckets and flag untagged ones that don’t align with the IaC baseline:

import boto3

# Fetch all buckets in AWS

s3 = boto3.client('s3')

buckets = s3.list_buckets()["Buckets"]

# Example Terraform state bucket names for comparison

terraform_buckets = {"logs-prod", "backups-main"}

# Find buckets missing from IaC or missing tags

for bucket in buckets:

name = bucket["Name"]

tags = s3.get_bucket_tagging(Bucket=name).get("TagSet", [])

if name not in terraform_buckets or not any(tag["Key"] == "owner" for tag in tags):

print(f"Drift detected: {name}")This works well for one account with a handful of buckets. But in an enterprise running 40+ AWS accounts, each with thousands of buckets, these scripts collapse under their own weight. Engineers face rate limits, API changes break scripts, and coverage is always partial. Without centralization, drift detection devolves into brittle tooling that can’t support compliance or operational scale.

Periodic Audits

Quarterly or monthly drift audits do surface problems, but only after weeks of changes have accumulated. By that point, Terraform or Helm pipelines may no longer converge, and remediation becomes a re-codification effort rather than a quick fix. This reactive approach slows delivery and adds operational overhead.

Static Guardrails

Preventive policies, such as AWS Service Control Policies, Azure Blueprints, or Kubernetes admission controllers, are useful for blocking misconfigurations at creation time. What they don’t address are runtime changes or resources created before the policies were applied. Those continue to drift undetected.

The Scaling Challenge

Each of these methods, such as Terraform plans that only cover codified resources, native tools like AWS Config, Azure Policy, or GCP SCC that remain siloed, custom scripts that are brittle, and periodic audits or static guardrails that surface drift too late, addresses only part of the problem. None provides continuous, unified visibility across codified and unmanaged infrastructure or automated reconciliation back into IaC, so gaps inevitably accumulate into operational, compliance, and financial risks.

Principles for Enterprise-Grade Drift Management

Enterprises can’t rely on periodic audits or ad-hoc scripts to manage drift. At scale, drift must be addressed continuously and in a way that ties directly back to the organization’s IaC and compliance processes. Several principles guide effective enterprise-grade drift management:

Continuous Detection

Drift doesn’t wait for quarterly reviews. Event-driven monitoring tied to resource lifecycle events is necessary to detect changes as they occur. For example, integrating with AWS Config, Azure Policy, or Kubernetes admission events allows drift to be flagged immediately, before pipelines or audits fail.

Unified Visibility

A single view across all environments, AWS, Azure, GCP, Kubernetes, and unmanaged resources, is critical. Without this, teams are forced to pivot between multiple consoles and scripts. Unified visibility enables platform teams to trace drift across providers and tie resources back to their respective owners.

IaC as the Source of Truth

Reconciliation should flow back through code, not ad-hoc patches. Whether the drift is a console change, a runtime update, or a policy-driven modification, the fix must be represented in Git. This ensures the next pipeline run doesn’t undo or reintroduce the same issue.

For example, consider a Terraform configuration stored in Git that provisions an S3 bucket with versioning explicitly disabled:

resource "aws_s3_bucket" "logs" {

bucket = "web-logs"

acl = "private"

versioning {

enabled = false

}

}This is the intended state of infrastructure, committed as the source of truth. Now imagine an engineer logs into the AWS Management Console and enables versioning on the bucket manually. At this point, the live environment has diverged from what the code declares. When terraform plan is executed, Terraform detects the mismatch and highlights the drift:

~ resource "aws_s3_bucket" "logs" {

id = "web-logs"

versioning {

- enabled = false

+ enabled = true

}

}

Terraform proposes reverting the manual change so that the deployed state aligns with the code. If versioning was not intended, Terraform will reset the bucket to match the HCL. If the change was valid, the configuration must be updated in code and merged through Git. At a small scale, this is manageable; at an enterprise scale, across thousands of S3 buckets, IAM policies, and Kubernetes objects, this workflow is the only sustainable way to ensure changes are deliberate, reviewable, and compliant.

At enterprise scale, across thousands of buckets, IAM policies, and Kubernetes objects, the only sustainable approach is to let IaC remain the single source of truth. If the change is valid, it must be codified back into Terraform and merged via Git PRs; if not, it gets rolled back automatically.

Reconciliation should flow back through code, not ad-hoc patches. Whether the drift is a console change, a runtime update, or a policy-driven modification, the fix must be represented in Git. This ensures the next pipeline run doesn’t undo or reintroduce the same issue.

Automated, Auditable Remediation

Manual fixes don’t scale. Remediation should generate pull requests against the IaC repository, giving teams a reviewable history of how drift was resolved. This not only speeds up response but also provides an audit trail that compliance teams can verify.

Codification of Unmanaged Resources

Unmanaged infrastructure cannot remain invisible. Systems should support generating Terraform, Pulumi, or CloudFormation definitions for resources created manually or inherited through acquisitions. Codifying these resources brings them under governance and prevents further drift.

Policy-Linked Enforcement

Drift management must be connected to compliance rules. A drifted IAM policy, for example, isn’t just a mismatch; it’s a potential audit failure. By linking drift detection to frameworks like SOX, GDPR, or HIPAA, enterprises can prioritize remediation based on security and regulatory impact.

Firefly’s Differentiated Approach

Firefly approaches drift as a closed-loop system: detect it as it happens, show engineers exactly what changed, bring unmanaged resources into code, remediate through Git, and tie the entire process back to compliance.

Real-Time Drift Awareness

Drift can’t be left to quarterly audits or nightly scans. Firefly integrates with cloud events and Kubernetes signals so engineers see changes the moment they happen. A console edit in AWS, a Kubernetes manifest patched with kubectl edit, or an Azure Policy modification will appear immediately in Firefly’s Event Center, tagged with who made the change and how it was introduced.

Context Through Side-by-Side Diffs

Knowing drift exists isn’t enough; engineers need to see the exact differences. Firefly presents a live configuration next to the IaC definition, whether it’s a Terraform module, OpenTofu configuration, Helm chart, or Pulumi stack. Instead of parsing a massive terraform plan output, you can see directly: code allows only port 443, live environment exposes 0.0.0.0/0. This shortens root-cause analysis and makes the fix unambiguous.

Codifying Unmanaged Resources

Every enterprise has unmanaged resources, legacy VMs, one-off databases, or services spun up under deadline pressure. These unmanaged assets are the fastest to drift because no pipeline governs them. Firefly’s codification engine generates IaC code for these resources across multiple providers —like Terraform, Pulumi, and OpenTofu —so they can be brought under IaC governance and stop drifting unseen.

Remediation Through GitOps

Traditional drift tools stop at alerts without any remediations, but Firefly goes further by generating pull requests in multiple VCSs like GitHub, GitLab, Azure DevOps, or Bitbucket. Engineers review and merge as they would any other change, keeping IaC the single source of truth. This removes the need for manual console fixes or brittle scripts and creates an auditable history of every remediation.

Compliance-Linked Governance

Drift management is not just about consistency; it’s about risk. Firefly evaluates drift against 600+ prebuilt checks covering SOX, HIPAA, GDPR, and CIS benchmarks, and supports custom policies. This turns an out-of-sync IAM role or Kubernetes configuration into a prioritized compliance issue, not just a technical mismatch. Compliance teams get continuous evidence trails, while engineers see drift in the context of security and governance requirements.

Unified Inventory and Visibility

All of this feeds into Firefly’s unified asset inventory. Engineers and auditors can see codified, unmanaged, ghost, and drifted resources in a single view. This gives platform teams a way to measure IaC coverage, identify hotspots of unmanaged resources, and track how remediation improves compliance and operational hygiene over time.

Drift Management Before vs. After Firefly

Before Firefly

Enterprises often discover drift late, either during an audit or when a Terraform pipeline fails unexpectedly. Detection is fragmented: AWS Config flags one issue, Azure Policy another, and custom scripts attempt to cover the rest. Each of these tools works in its own silo, leaving no unified view of which resources are codified, unmanaged, or drifting out of sync. Remediation ends up being manual, inconsistent, and hard to audit.

To see how this plays out in practice, consider a simple example using Terraform on GCP. A storage bucket and service account are provisioned through code. The bucket baseline in IaC has versioning disabled, no lifecycle rules, and a single label environment=main. Terraform applies cleanly, and the state matches what’s in the repo.

Later, changes are made directly in the GCP console: versioning is enabled, a lifecycle rule is added to move objects to Nearline, and a new label owner=dev is attached. These edits stabilize the workload but never flow back into the code.

On the next terraform plan, Terraform highlights drift:

❯ terraform plan

var.bucket_name

Base name for the GCS bucket (a random suffix will be added automatically). Must be globally unique.

Enter a value: instance-logs

random_id.bucket_suffix: Refreshing state... [id=deWgyA]

google_service_account.main: Refreshing state... [id=projects/sound-habitat-462410-m4/serviceAccounts/drift-sa@sound-habitat-462410-m4.iam.gserviceaccount.com]

google_storage_bucket.main: Refreshing state... [id=instance-logs-75e5a0c8]

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_storage_bucket.main will be updated in-place

~ resource "google_storage_bucket" "main" {

id = "instance-logs-75e5a0c8"

name = "instance-logs-75e5a0c8"

# (16 unchanged attributes hidden)

- lifecycle_rule {

- action {

- storage_class = "NEARLINE" -> null

- type = "SetStorageClass" -> null

}

- condition {

- age = 0 -> null

- days_since_custom_time = 0 -> null

- days_since_noncurrent_time = 0 -> null

- matches_prefix = [] -> null

- matches_storage_class = [] -> null

- matches_suffix = [] -> null

- no_age = false -> null

- num_newer_versions = 0 -> null

- send_age_if_zero = true -> null

- send_days_since_custom_time_if_zero = false -> null

- send_days_since_noncurrent_time_if_zero = false -> null

- send_num_newer_versions_if_zero = false -> null

- with_state = "LIVE" -> null

# (3 unchanged attributes hidden)

}

}

~ versioning {

~ enabled = true -> false

}

# (1 unchanged block hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.Explanation:

- The console’s lifecycle rule is absent in code, so Terraform plans to delete it.

- Versioning is enabled live but set to false in code, so Terraform plans to revert it.

This is a textbook drift scenario:

- Detection without context: The plan flags differences but gives no explanation of who made the change or why.

- Difficult reconciliation: The team must decide whether to accept the console edits into code or let Terraform undo them, risking service impact if the edit was intentional.

- Operational noise: Multiply this by thousands of buckets, databases, and IAM policies across projects, and drift signals become impossible to review in a pipeline.

- Compliance blind spots: Labels like owner introduced manually never flow into cost or compliance reporting. Auditors and finance teams see gaps, while security teams lose visibility into runtime changes.

This example is small, but at an enterprise scale, the problem compounds until IaC pipelines lose credibility. Engineers either override them with manual fixes or delay remediation until drift becomes a disaster.

After Firefly

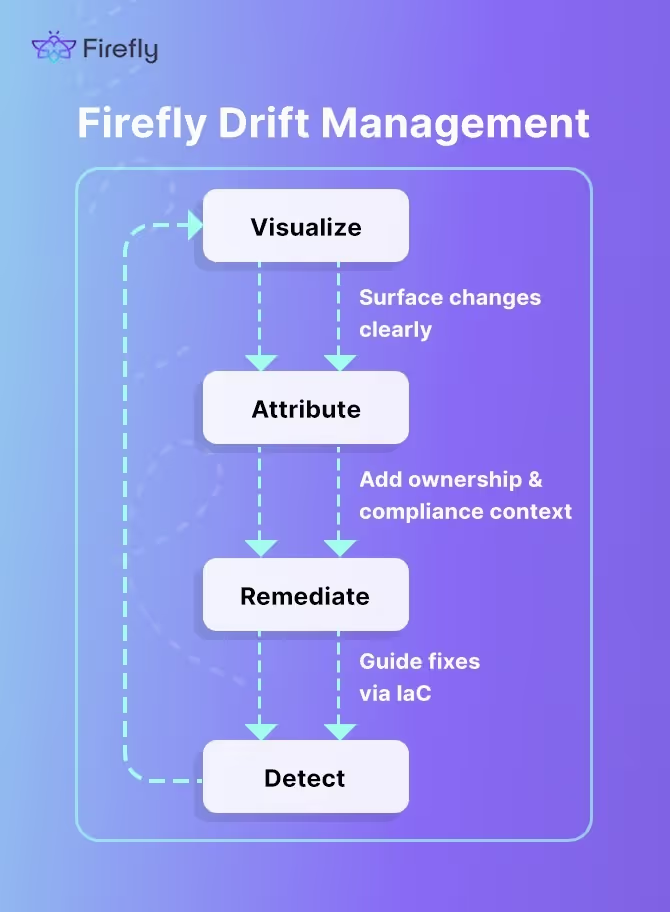

With Firefly, drift management isn’t just about detection; it’s about surfacing changes in real time, adding ownership and compliance context, and guiding remediation through IaC. The platform creates a continuous loop:

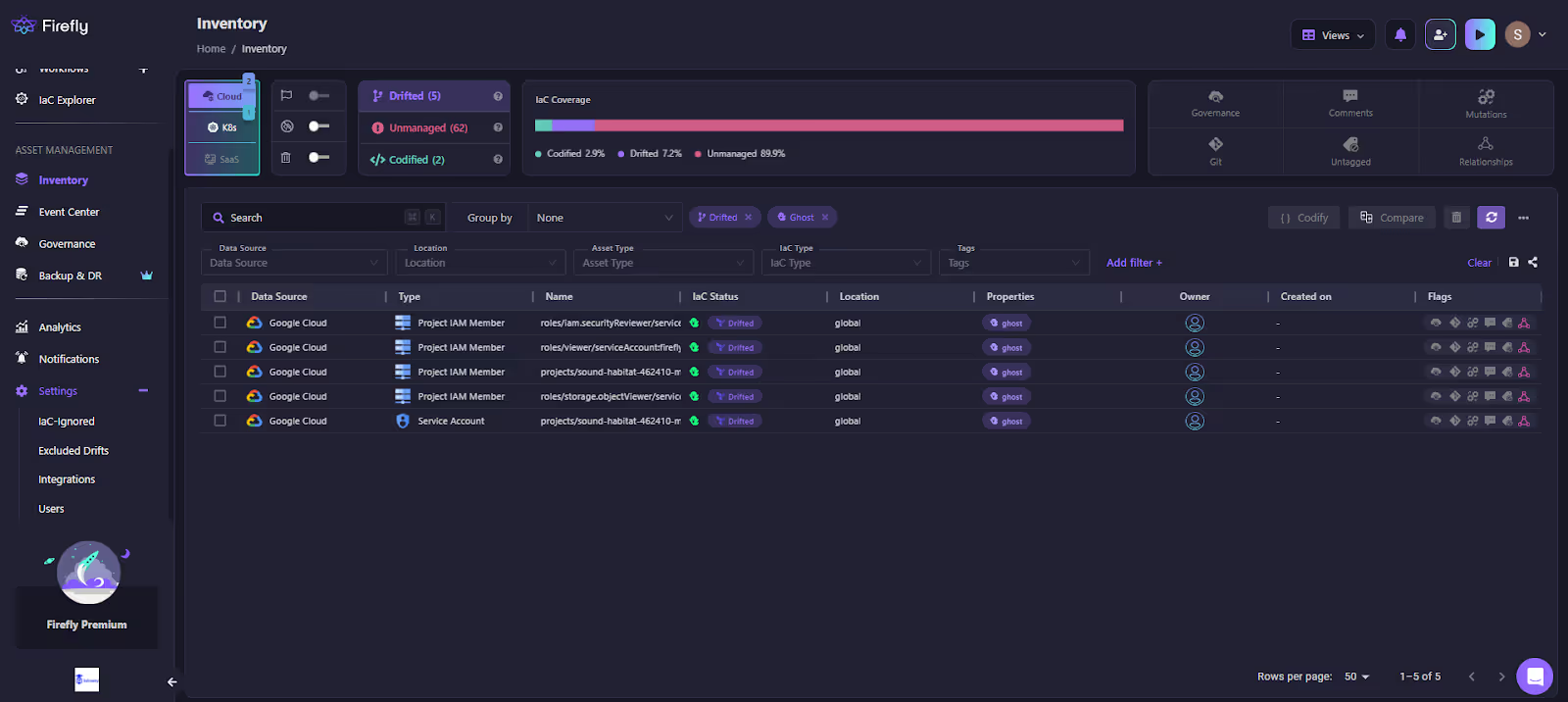

Unified Inventory as the Starting Point

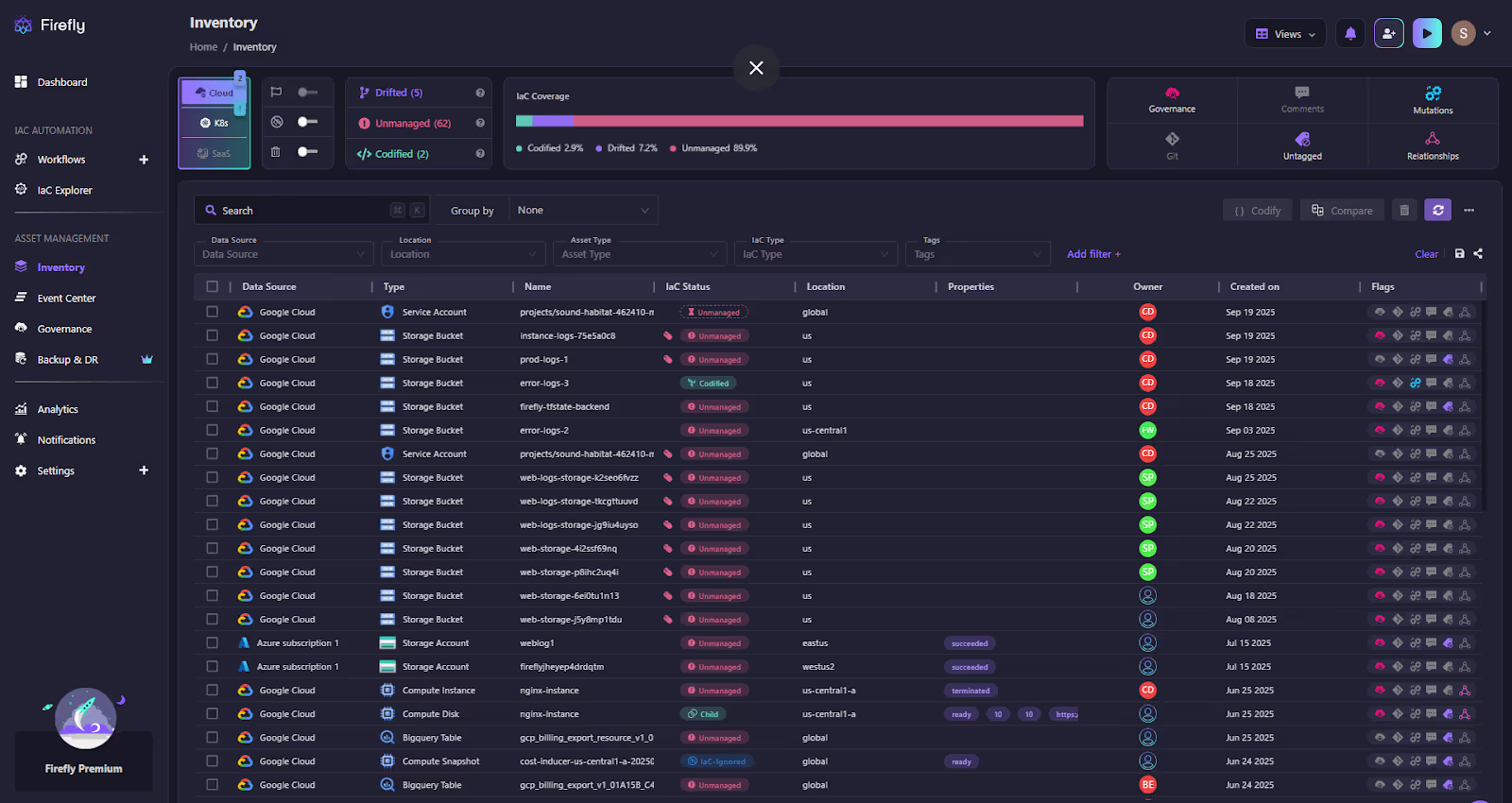

The Inventory dashboard is the control plane for drift. Resources are categorized as codified, unmanaged, or drifted, with coverage quantified at the top (codified 2.9%, drifted 7.2%, unmanaged 89.9%).

As shown in the snapshot below:

This gives platform teams more than just a count. It shows the ratio of infrastructure under governance versus assets at risk. Instead of chasing Terraform plans across projects, engineers know exactly where drift is concentrated and which unmanaged resources need codification.

From Inventory to Resource Detail

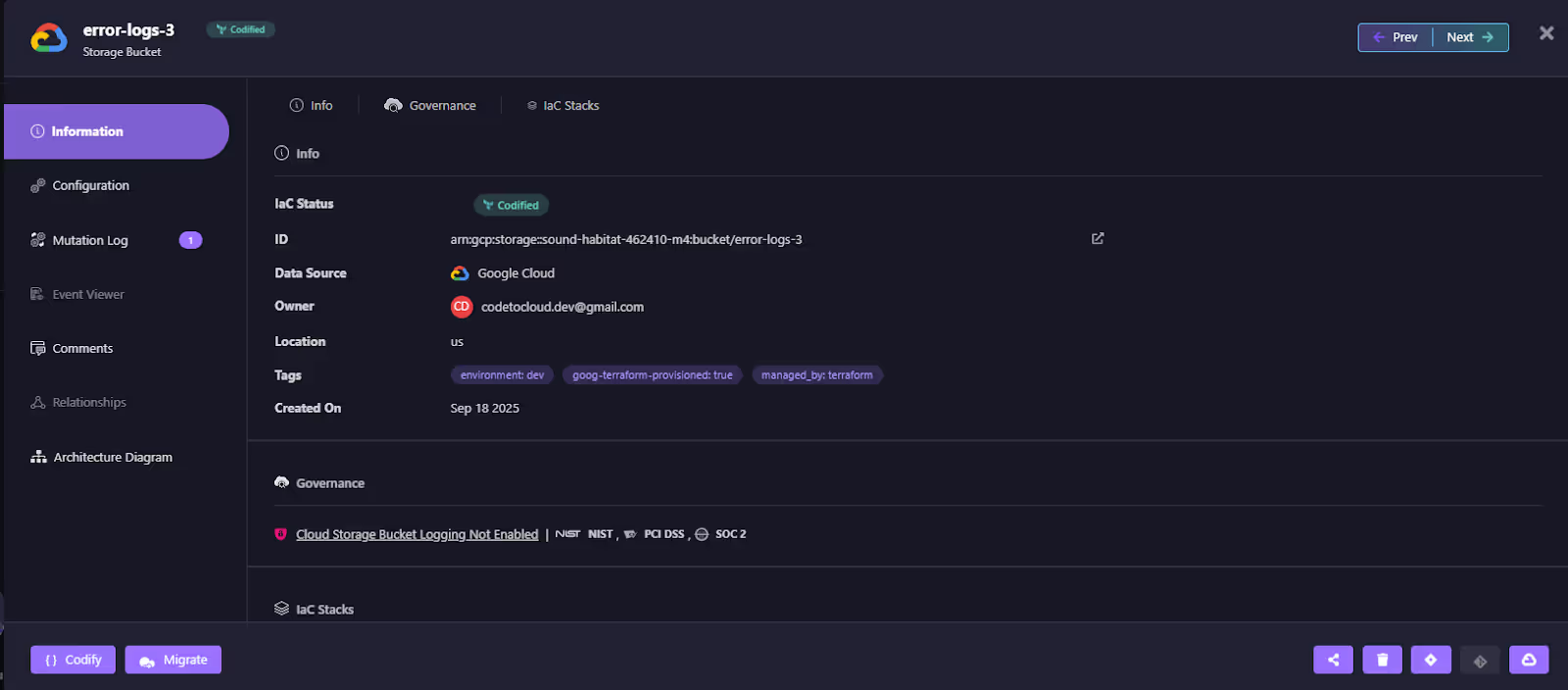

Within the inventory, the bucket error-logs-3 appears as codified, but it also has a flagged mutation. This tells the team the resource is under Terraform, yet has diverged in live configuration. Clicking into the bucket reveals the details: IaC status, owner, tags, and compliance checks.

In the snapshot below:

The bucket is codified but flagged for a compliance violation, logging not enabled. This immediately reframes drift: it’s not just a mismatch, it’s a violation tied to PCI DSS and SOC 2 requirements.

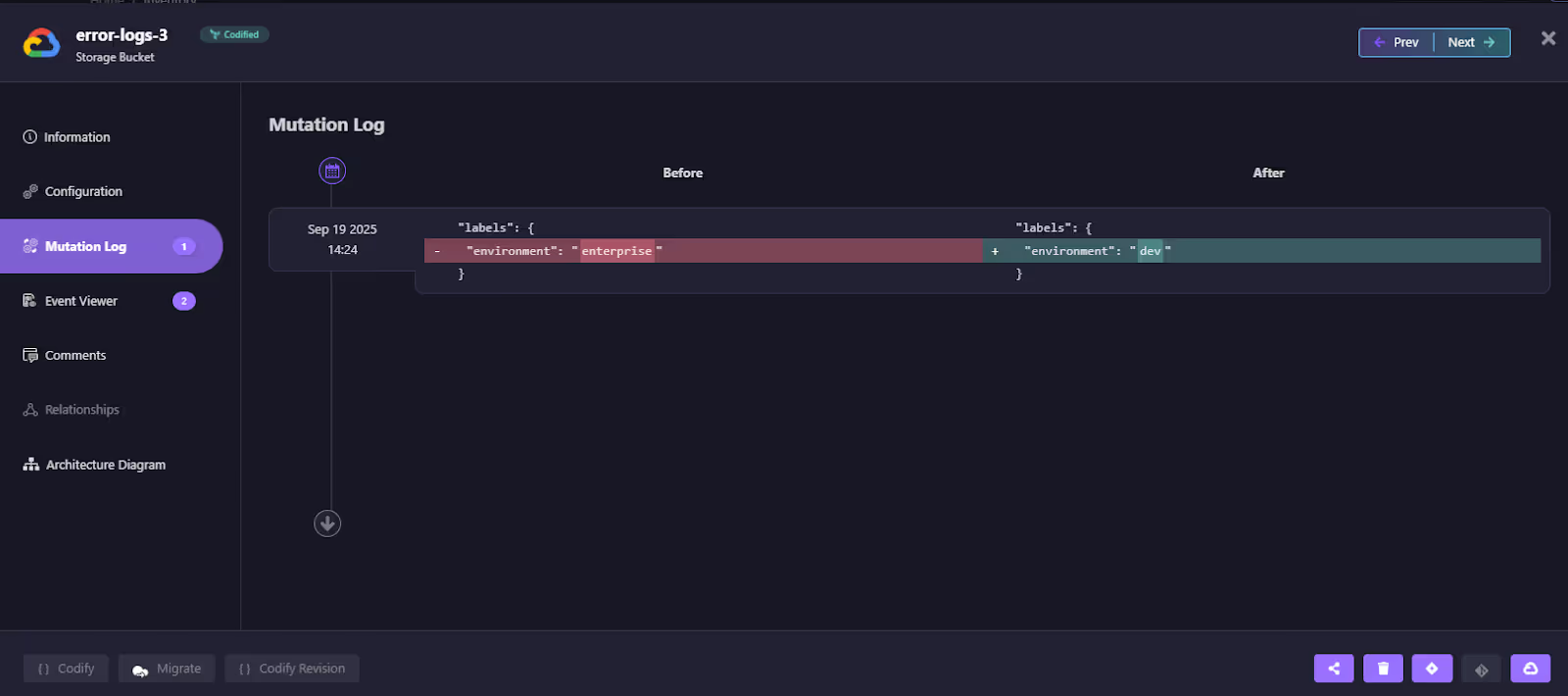

Mutation Log for Exact Drift Diffs

Drilling deeper, the mutation log shows the precise differences between IaC and the live state. The label environment was changed from enterprise to dev in the console. Here’s how the diff is shown in the mutation logs:

Terraform would only have shown this as a plan update. Firefly captures it with before/after values, timestamped, and tied to the resource. This makes runtime edits explicit and auditable.

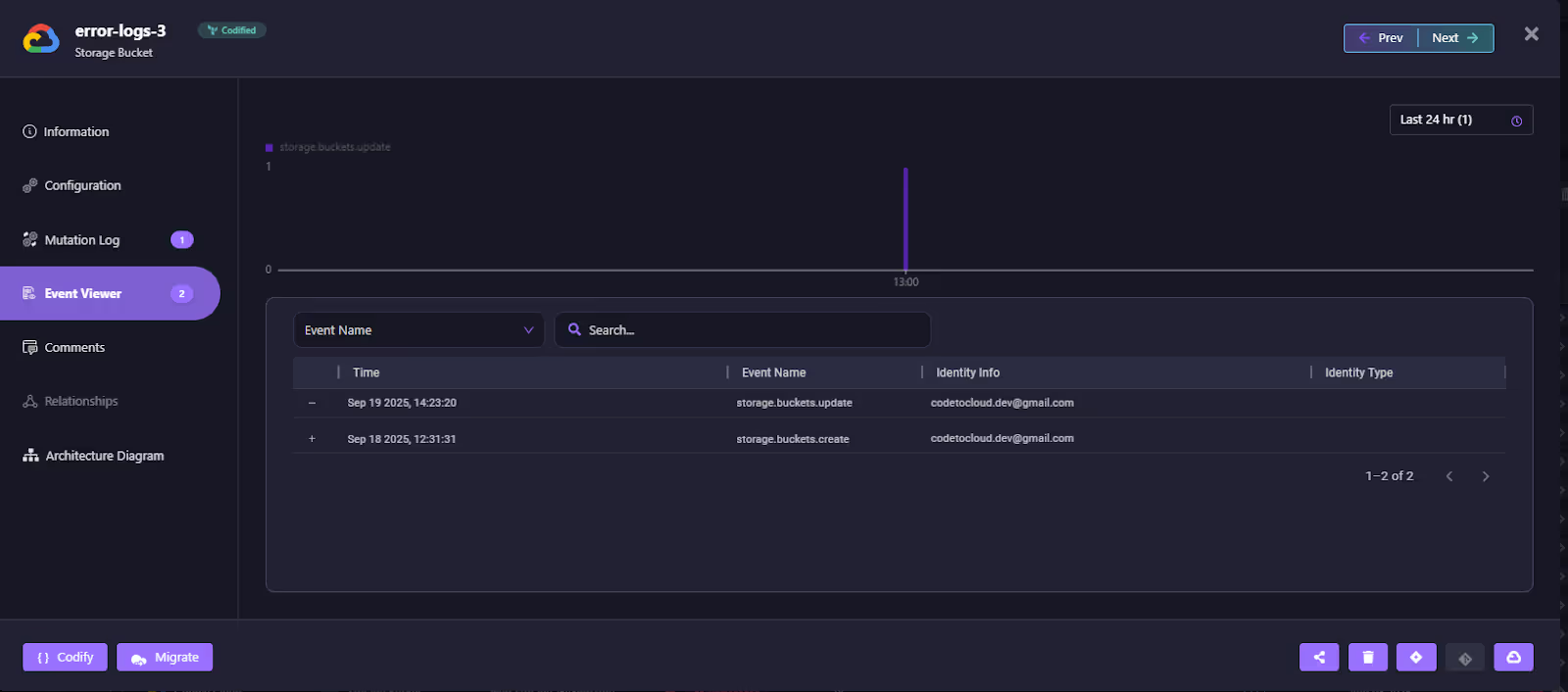

Event Attribution

The event viewer shows who made the change and when. The update to the bucket is linked to a specific identity (codeto********.dev@gmail.com) along with the action type (storage.buckets.update) as shown in the event viewer below:

This closes the attribution gap left by Terraform, where drift is flagged, but no actor is identified.

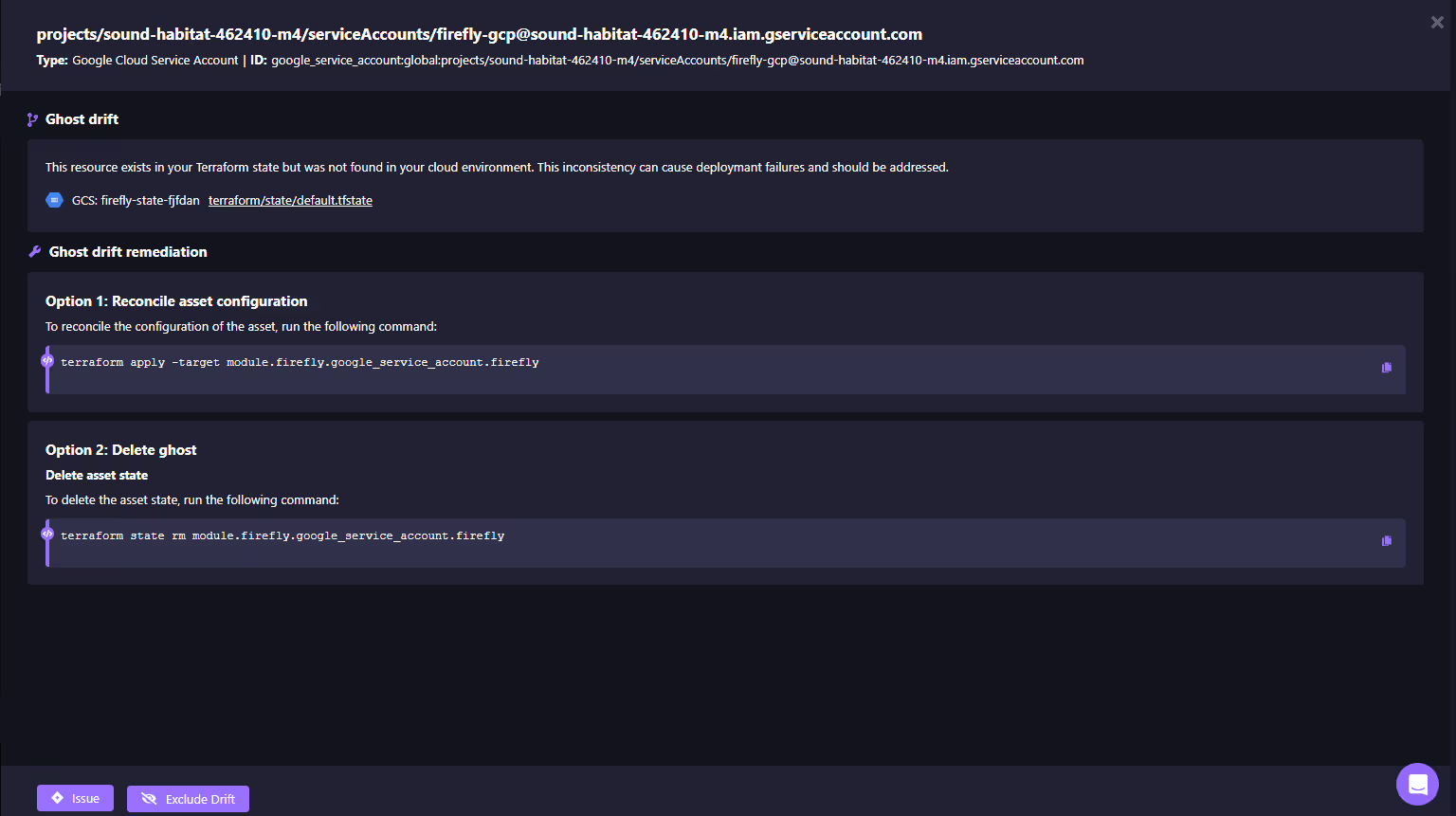

Ghost Resource Handling

Firefly also surfaces ghost resources, assets that exist in Terraform state but not in the cloud. Here's how Firefly flags them:

As shown in the list above, a GCP service account is marked as drifted because it’s still in a state but missing from GCP. Firefly categorizes it explicitly as ghost drift.

Guided Remediation Through IaC

For every drift, Firefly generates clear remediation options.

The ghosted service account can either be re-applied (terraform apply -target) to bring it back, or removed from state (terraform state rm) to clean up as we go through the drift details. Here is how Firefly suggests remediation:

Instead of engineers writing ad-hoc fixes, the exact commands are provided, ensuring consistent, auditable remediation. For configuration drift, Firefly can also generate pull requests with updated Terraform or Pulumi code, so remediation flows through GitOps pipelines and preserves IaC as the source of truth.

Outcomes of Structured Drift Remediation

Instead of parsing noisy Terraform plans and chasing changes across consoles, senior engineers get:

- A unified view of codified vs. unmanaged vs. drifted assets.

- Precise before/after diffs of every change.

- Actor attribution and timestamps for audit trails.

- Compliance mapping of drift to standards like SOC 2 or PCI DSS.

- Guided remediation options, PRs into Git repos, or CLI commands, that keep fixes under version control.

This transforms drift management from reactive firefighting into a structured, auditable process that scales with enterprise complexity.

Operational Gaps in Existing Drift Detection Approaches

In most organizations, the only practical way to surface drift is by running terraform plan. This works for a few stacks, but it quickly breaks down in enterprise environments:

- Slow and noisy plans: Running plans across hundreds of workspaces can take hours. Much of the output is clutter from autoscaling changes, ephemeral runtime updates, or provider defaults, making it hard to isolate meaningful drift.

- No context for changes: A plan shows that a bucket label or IAM policy has changed, but not who made the change or why. Tracing ownership requires digging into cloud audit logs or Slack threads, slowing remediation.

- Blind spots in unmanaged infra: Anything outside Terraform, legacy VMs, SaaS accounts, and Kubernetes objects edited with kubectl is invisible to the plan. These unmanaged resources are usually where drift accumulates fastest.

- Fragmented alternatives: Some teams supplement with AWS Config, Azure Policy, or GCP SCC, but those tools only show drift within their own cloud and don’t tie findings back to IaC repos. Scripts and one-off automations are brittle and partial, creating more overhead than clarity.

Firefly replaces this fragmented, manual approach with a continuous workflow:

- Unified coverage: One inventory across codified, unmanaged, and drifted resources in all clouds and clusters.

- Actionable diffs: Before/after views of every change, so engineers see exactly what diverged without parsing through pages of plan output.

- Attribution: Each drift event is tied to the actor and timestamp, closing the gap between “what changed” and “who changed it.”

- Compliance linkage: Drifted settings are mapped directly to frameworks like SOC 2 or PCI DSS, so issues can be prioritized by regulatory impact.

- Guided remediation: Fixes flow back into code through pull requests or targeted commands, keeping pipelines trustworthy and remediation auditable.

Instead of chasing drift reactively with plans, console checks, and ad-hoc scripts, teams operate in a structured loop where drift is detected in real time, contextualized, and resolved through IaC.

What Enterprises Gain from Drift Control

The impact of drift management is best measured in outcomes: fewer failures, faster recovery, stronger compliance, and reduced waste. Firefly shifts drift management from a reactive cleanup exercise into a continuous control loop, and that translates into tangible results.

Reduced Drift Incidents

With real-time monitoring and guided remediation, teams cut down on the volume of drift that reaches production. Instead of discovering dozens of mismatches in every quarterly audit or pipeline run, drift is surfaced and resolved continuously. Pipelines stay predictable, and Terraform or Helm plans converge reliably.

Faster Remediation Cycles

Drift resolution that once took hours of log digging and manual edits now completes in minutes. Mutation logs, attribution data, and one-click fixes eliminate guesswork. Engineers spend less time triaging plan diffs and more time delivering features.

Continuous Compliance

Auditors no longer rely on static evidence snapshots. Every drift event is logged with before/after state, actor identity, and remediation steps. This creates a continuous audit trail that demonstrates compliance with SOC 2, HIPAA, GDPR, or internal security policies.

Improved Security Posture

Because drift is tied to compliance policies, security-critical changes, such as an open firewall rule or a disabled bucket log, are prioritized and remediated first. Blind spots caused by unmanaged or ghost resources are reduced as those assets are codified and brought under IaC governance.

Operational Efficiency

Firefly reduces the overhead of running large IaC estates. Engineers no longer need to run massive plan jobs just to find drift or maintain brittle scripts across clouds. Time saved here is significant: instead of days spent reconciling pipelines after an audit, remediation happens in real time through GitOps.

Cost Savings

Drift often introduces waste: untagged resources, oversized instances, or orphaned services that continue running unnoticed. By continuously detecting and codifying unmanaged assets, Firefly makes it easier to eliminate ghost resources and enforce cost allocation tags, directly cutting spend. For a deeper understanding of how infrastructure drift impacts costs and how Firefly’s Drift Cost Analysis feature can help mitigate these costs, check out this detailed blog post on Firefly.

FAQs

1. What is IaC drift?

IaC drift happens when the actual state of infrastructure in the cloud doesn’t match what’s defined in Infrastructure-as-Code. For example, if Terraform defines a storage bucket without versioning but someone enables it through the console, the live state diverges from code. Drift leads to unreliable pipelines, failed applications, and compliance gaps if not managed.

2. How to minimize drift?

Drift can be minimized by enforcing GitOps workflows, limiting direct console access, and using policy guardrails at creation time. Regular drift detection tools or event-driven monitoring help catch runtime changes early. The most effective strategy is to codify unmanaged resources and always reconcile fixes back into the IaC repo.

3. What is Terraform drift?

Terraform drift occurs when the live state of infrastructure no longer matches what is defined in Terraform configuration files. This typically occurs when resources are modified outside of Terraform workflows, such as through manual console edits, automated policies, or external processes that alter configurations or delete resources.

4. How do you detect Terraform drift?

Drift can be detected using Terraform commands. Running terraform refresh updates the local state file with the latest information from the cloud provider, and terraform plan then compares this refreshed state against the configuration files. Any differences shown in the plan output highlight where drift has occurred and what actions Terraform would take to reconcile it.