As cloud environments grow, access control becomes one of the hardest problems to get right. It’s not just about spinning up resources anymore; it’s about making sure the right people have the exact level of access they need, without overexposing sensitive systems.

Every cloud provider handles this differently. In Google Cloud, permissions are managed with IAM roles and conditions, or in some cases with legacy ACLs at the object level. GitHub organizations keep it simpler: you have roles like Owner, Member, and Billing Manager that directly map to what actions a person can take across repositories and teams. Different platforms, but the same idea, control access cleanly and consistently.

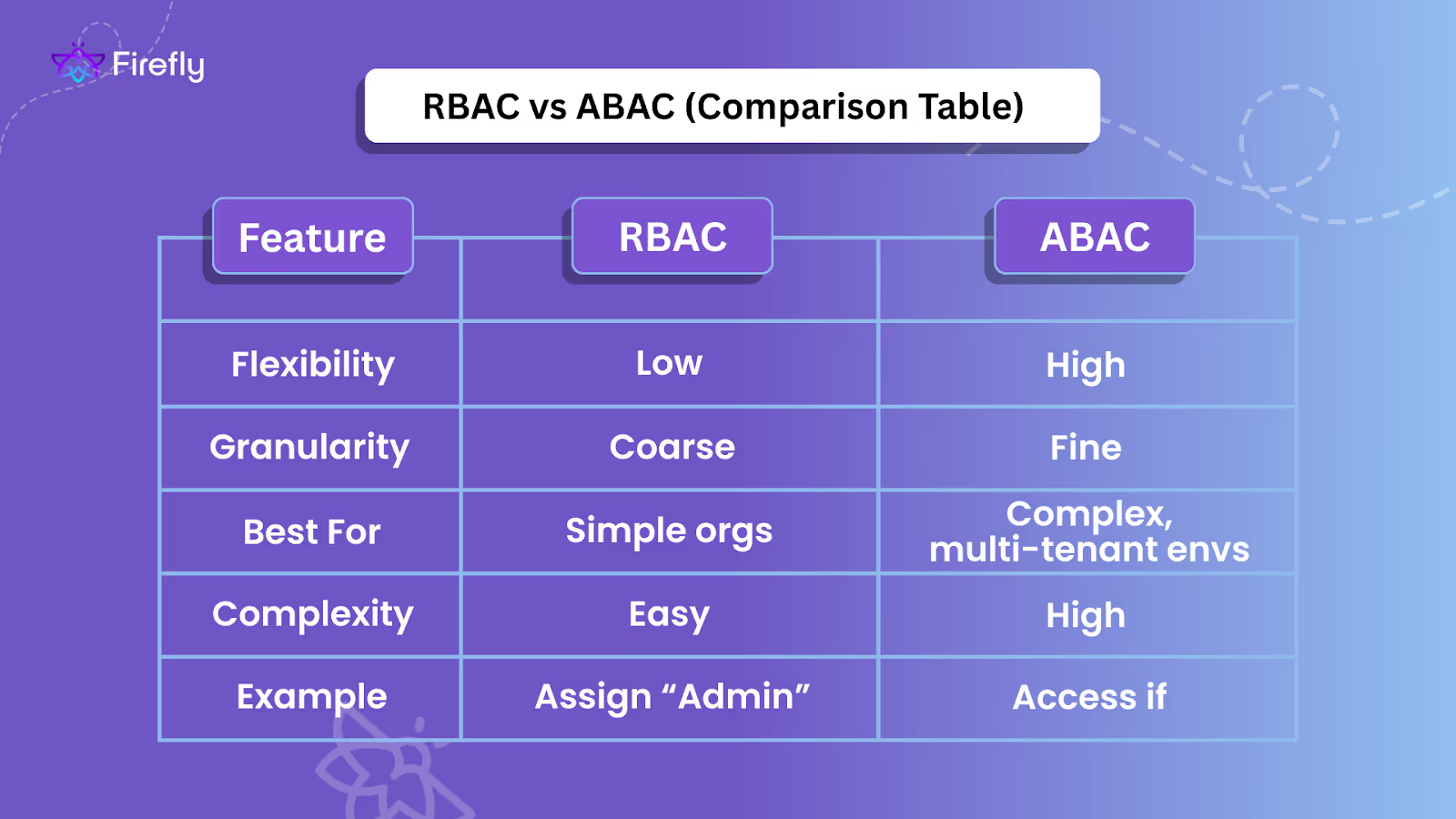

The two main models you’ll run into are Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC). RBAC is role-driven; you assign someone a role, and that role comes with a fixed set of permissions. ABAC goes deeper by evaluating attributes of the user, the resource, and sometimes the environment before granting access.

RBAC is straightforward and easy to use when roles are well-defined. ABAC gives you more flexibility in complex, multi-tenant, or dynamic environments where roles alone don’t scale.

This post breaks down how RBAC and ABAC work in cloud infrastructure, compares where each one fits, shows how to apply them with Terraform, and then looks at how Firefly can take away a lot of the operational overhead of managing these policies. Since RBAC is the model most platforms started with, and the one nearly every engineer has worked with in some form, we’ll begin there before moving on to ABAC.

What is RBAC (Role-Based Access Control)?

At its core, RBAC is a chain: user → role → permissions. A role is just a bundle of allowed actions, and when you assign it to a user, group, or service account, those permissions take effect automatically.

How RBAC Works in Practice

Different services implement RBAC in slightly different ways, but the pattern stays the same:

- Google Cloud (GCP): You bind a principal to a role like roles/viewer or roles/storage.admin. Each role is a set of API permissions, such as storage.objects.create or storage.objects.delete.

- AWS IAM: Users or services assume IAM roles with attached policies. A role may grant broad access (AdministratorAccess) or be narrowed down to specific services or resources.

- GitHub Organizations: RBAC is applied at the org level. Owners manage everything, Members have repo and team access, and Billing Managers handle billing.

- Google Chronicle: Uses fixed roles like Admin, User, and Viewer, each with tightly scoped permissions inside the platform.

Why RBAC is Useful in the Cloud

RBAC isn’t just convenient, it directly supports key security and operational needs:

- Principle of Least Privilege (PoLP): Users only get the minimum permissions they need, reducing blast radius if accounts are compromised.

- Operational efficiency: Instead of assigning permissions user by user, you manage them at the role level. Adding someone new is just assigning them the right role.

- Scalability: Works the same way whether you’re running a small dev shop or a global enterprise.

- Centralized management: Roles provide a single place to see and adjust who has access to what.

- Compliance and auditing: Standards like HIPAA, GDPR, and SOX require proof of access control. RBAC simplifies audits by tying access to clearly defined roles.

- Mitigation of insider threats: Tighter privileges mean fewer chances for accidental or malicious data exposure.

- Service-to-service authorization: In microservice-heavy setups, RBAC can also restrict how services talk to each other.

Where RBAC Breaks Down

RBAC works well when environments are stable and responsibilities don’t shift much. But at scale, two issues show up quickly. First is role sprawl: you end up creating dozens of nearly identical roles, developers with read-only storage in prod, developers with write in staging, ops with full access, support with EC2 read-only, and so on. Second is the lack of context awareness: RBAC doesn’t look at tags, user attributes, or conditions like time of day or IP address. If a role allows it, the action is allowed everywhere.

This is why RBAC works as an initial starting point, but doesn’t hold up well in modern, dynamic cloud setups with multiple users and resources. That gap is exactly what ABAC was designed to fill.

What is ABAC (Attribute-Based Access Control)?

RBAC gives you fixed roles, but it doesn’t adapt well when teams, projects, or environments keep changing. ABAC fixes that by checking attributes — things like tags on resources, tags on users, or request context — before allowing an action. Instead of saying “devs role can write buckets”, you say “allow writes only if the bucket has env=dev and the caller’s team tag is engineering.”

ABAC in AWS

- How it works: You tag both IAM principals (users/roles) and AWS resources. A single IAM policy can then allow actions only when the tags match.

- Example: A Secrets Manager policy might allow full CRUD on secrets, but only if the secret’s tags match the principal’s access-project, access-team, and cost-center tags. Engineers can create or update their own project’s secrets, and only read across their team.

- Scaling benefit: You don’t create a new policy every time a new project starts. Just tag the resources and the roles correctly, and the same policy applies.

ABAC in GCP

GCP doesn’t have a single “ABAC service,” but you get the same effect by combining features:

- IAM Conditions: Add conditions to a role binding, like “allow only if resource has tag env=dev” or “allow only between 9 am–6 pm”.

- Resource Tags: Tags can be attached at the org, folder, or project level and inherited down. Conditions can check these tags to gate access.

- Access Context Manager (ACM): Adds context checks, like “allow only from corporate IPs” or “only on managed devices.”

- Example: A role binding might let a group act as storage.admin, but only if the bucket has env=dev and the request comes from the corporate network (ACM). Another binding could deny risky actions if the resource is tagged env=prod.

Why ABAC Matters

ABAC cuts down policy sprawl by using attributes instead of duplicating roles. A single policy can cover multiple teams and projects, and new resources are included automatically when tagged correctly. It also adds context awareness, allowing access to depend on the environment, network, or device, something RBAC alone cannot handle.

To move from theory into practice, the next step is to see how RBAC looks when codified in Terraform, where roles and bindings are tracked, versioned, and enforced consistently across cloud providers.

Implementing RBAC with Terraform

Talking about RBAC in theory is one thing, but most of us enforce it in Terraform. Having roles and bindings in code means you can track who has access, roll back mistakes, and stop people from creating one-off assignments in the console. Let’s look at how this plays out in AWS, Azure, and GCP with real config examples.

AWS: IAM role for a read-only ops team

In AWS, the RBAC building block is an IAM role. You bind permissions (policies) to it and decide who can assume it. Instead of writing JSON inline everywhere, I usually use a module to keep the setup consistent across accounts.

Here’s a role that gives the ops team read-only access to EC2, S3, and CloudWatch. The trust policy lets GitHub Actions (OIDC provider) assume it:

module "ops_readonly_role" {

source = "tf-mod/rbac-role/aws"

name = "ops-readonly"

description = "Read-only access for ops dashboards"

assume_role_principals = [

{

type = "Federated"

identifiers = ["arn:aws:iam::123456789012:oidc-provider/token.actions.githubusercontent.com"]

}

]

policy_statements = [

{

sid = "AllowReadOnly"

effect = "Allow"

actions = [

"ec2:Describe*",

"s3:ListAllMyBuckets",

"cloudwatch:ListMetrics",

"cloudwatch:GetMetricData"

]

resources = ["*"]

}

]

}

This saves you from creating ad-hoc read-only users or copying JSON by hand. You check this into Git, review it like any other code, and reuse it across dev/test/prod accounts. If the ops team needs more actions, you add them here and push a PR.

Azure: role assignment with a condition

In Azure, RBAC works the same way at a high level, you assign a principal to a role on a scope. But Azure also lets you add conditions to fine-tune those permissions. That’s useful when you don’t want to create a whole new role just for “read only if env=dev.”

Here’s how you let a group read blob containers, but only when the container is tagged

env=dev:

resource "azurerm_role_assignment" "storage_reader_dev_only" {

scope = azurerm_storage_account.sa.id

role_definition_name = "Storage Blob Data Reader"

principal_id = azuread_group.dev_readers.object_id

condition_version = "2.0"

condition = <<-JSON

{

"Version": "2.0",

"Expression": "(

@Resource[Microsoft.Storage/storageAccounts/blobServices/containers:tags.env] == 'dev'

)",

"Actions": {

"Include": [

"Microsoft.Storage/storageAccounts/blobServices/containers/blobs/read",

"Microsoft.Storage/storageAccounts/blobServices/containers/read"

],

"Exclude": []

}

}

JSON

}Without the condition, you’d either give this group read access everywhere (too broad) or create a bunch of duplicate roles (ugly to maintain). With the condition, the assignment is self-filtering; only containers with env=dev are visible. The trick here is keeping your tag taxonomy consistent, because the condition only works if the tag key and value are right.

GCP: binding a group to a GKE Hub scope role

On GCP, RBAC shows up differently depending on what you’re working with. For Kubernetes fleets, you manage org-wide RBAC through GKE Hub scopes. This lets you define who can act across a set of clusters without touching each one manually.

Here’s a binding that gives a Google Group “apps-viewers@example.com” the view role across everything in the “apps” scope:

resource "google_gke_hub_scope" "apps_scope" {

scope_id = "apps"

project = var.project_id

}

resource "google_gke_hub_scope_rbac_role_binding" "apps_viewers" {

project = var.project_id

scope_id = google_gke_hub_scope.apps_scope.scope_id

role = "clusterrole/view"

group {

group = "apps-viewers@example.com"

}

}This is pure Kubernetes-style RBAC, not IAM Conditions. The idea is simple: instead of binding view on each cluster, you bind once at the scope. If a new cluster joins the scope, the group already has access. Good for consistency across many clusters.

Enforcing ABAC in GitHub Actions with OPA

This section provides a step-by-step walkthrough of implementing ABAC policies within GitHub Actions. Using OPA and Conftest, the workflow validates who is raising the pull request and allows only approved users to proceed with running terraform plan.

First, define the Rego policy, which specifies which GitHub actors are allowed to trigger Terraform. Save this as policy/abac.rego:

package terraform.abac

default allow_plan = false

# Approved GitHub actors

allowed_users = {"byteBardShivansh", "senior-dev"}

# Allow plan only if the actor is in the allowed set

allow_plan {

input.github.actor == user

allowed_users[user]

}In this policy:

- default allow_plan = false ensures the plan is denied unless explicitly allowed.

- The rule checks the GitHub actor (input.github.actor) against the allowed_users set.

- Only actors defined in allowed_users can trigger Terraform plan; all others are blocked.

GitHub Actions workflow

Create .github/workflows/terraform-plan.yml to run this policy before any Terraform command:

name: Terraform Plan with ABAC

on:

pull_request:

branches:

- main

jobs:

plan:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Install Conftest

run: |

wget https://github.com/open-policy-agent/conftest/releases/download/v0.34.0/conftest_0.34.0_Linux_x86_64.tar.gz

tar xzf conftest_0.34.0_Linux_x86_64.tar.gz

sudo mv conftest /usr/local/bin

- name: Install OPA

run: |

wget https://openpolicyagent.org/downloads/v0.40.0/opa_linux_amd64_static

chmod +x opa_linux_amd64_static

sudo mv opa_linux_amd64_static /usr/local/bin/opa

- name: Create Workflow Context File

run: |

echo '{"github": {"actor": "'${{ github.actor }}'"}}' > workflow_context.json

- name: Run OPA Policy Check

id: opa_check

run: |

result=$(opa eval --format=json --input workflow_context.json --data policy/abac.rego "data.terraform.abac.allow_plan")

allowed=$(echo $result | jq -r '.result[0].expressions[0].value')

if [ "$allowed" != "true" ]; then

echo "User is not authorized to run Terraform Plan."

exit 1

fi

- name: Run Conftest Policy Check

run: conftest test workflow_context.json --policy policy/abac.rego

- name: Terraform Init and Plan

if: success()

env:

GCP_PROJECT_ID: ${{ secrets.GCP_PROJECT_ID }}

GCP_CREDENTIALS_JSON: ${{ secrets.GCP_CREDENTIALS_JSON }}

run: |

echo "$GCP_CREDENTIALS_JSON" > gcp-key.json

terraform init

terraform plan -var="gcp_project_id=$GCP_PROJECT_ID" -var="gcp_credentials_file=$(pwd)/gcp-key.json"Workflow breakdown

- Checkout Code: Pulls repository content, including Terraform configs and Rego policies.

- Install Conftest and OPA: Adds the tools needed to evaluate Rego policies.

- Create Workflow Context File: Generates workflow_context.json containing the GitHub actor of the PR.

- Run OPA Policy Check: Evaluates abac.rego. If the actor isn’t approved, the workflow exits with an error before Terraform runs.

- Run Conftest Policy Check: Provides an additional validation of the same policy.

- Terraform Init and Plan: Executes only if both policy checks pass, ensuring that only approved GitHub actors can run infrastructure plans.

This setup ties authorization to a user attribute (GitHub actor) instead of static roles. By enforcing ABAC in the pipeline, unauthorized contributors can still raise pull requests, but their changes will never reach terraform plan or apply.

Comparative Analysis: RBAC vs ABAC

RBAC and ABAC both control access, but the way they scale and what they look at under the hood is very different. The right fit depends on the type of workloads and how fast things change in the environment.

Flexibility

- RBAC: In AWS, roles like ReadOnlyAccess, EC2FullAccess, or custom IAM roles bundle permissions. Once a user or service account assumes one of these roles, the permissions are fixed everywhere. For example, if a developer has EC2FullAccess, they can start or stop any EC2 instance in that account, regardless of environment.

- ABAC: In AWS or GCP, policies can reference attributes like tags (env=dev, team=qa) or request context (ip=corp-vpn). A policy might say “allow s3:GetObject only if the bucket has tag env=dev and the principal’s team tag is qa.” The same policy automatically applies to any new dev bucket with that tag, no need to create a new role.

Granularity

- RBAC: Permissions are coarse. A role like roles/storage.admin in GCP or Storage Blob Data Contributor in Azure grants access across all matching resources, whether they are production or development.

- ABAC: Access is fine-grained. In Azure, a role assignment can include a condition: “allow read on containers only if tag environment=dev is present.” This means a QA engineer can see dev blobs but won’t see prod data, even though they have the same broad role definition.

Implementation Complexity

- RBAC: Easy to start with. Assign viewer, editor, and admin roles in GCP or IAM roles in AWS, and the model works. But over time, teams need special cases, “read-only prod buckets but write access in staging.” This leads to “role sprawl”: dozens of custom roles with overlapping permissions.

- ABAC: Harder to implement cleanly. Requires a disciplined tagging strategy (env, cost-center, owner) and careful policy design. Debugging access issues is trickier; a denied request might be caused by a missing tag on the resource or a mismatch between user attributes and resource tags.

Use Cases

- RBAC:

- AWS accounts with well-separated environments (one account per stage), where each team can just assume dev-admin or prod-readonly.

- GCP projects with small, stable teams where predefined roles like roles/viewer or roles/editor are enough.

- Environments where auditors want a clear mapping: “this user has this role with these permissions.”

- AWS accounts with well-separated environments (one account per stage), where each team can just assume dev-admin or prod-readonly.

- ABAC:

- Multi-project or multi-tenant GCP orgs where the same engineers jump between dev, test, and prod projects. Policies use IAM Conditions with resource.tag.env to allow or deny access dynamically.

- AWS environments where S3 buckets and EC2 instances are spun up often. ABAC policies can enforce “developers can only modify resources tagged with their own project name” without writing a new role for each project.

- Azure subscriptions where the same app team runs dev and prod resources under one account. A single role assignment with conditions can gate prod changes more tightly than dev.

- Multi-project or multi-tenant GCP orgs where the same engineers jump between dev, test, and prod projects. Policies use IAM Conditions with resource.tag.env to allow or deny access dynamically.

Putting It Together

RBAC is best at providing a baseline set of permissions through fixed roles like admin, viewer, or dev-ops-role. It works well when responsibilities are stable and environments are separated.

ABAC complements that by applying context-driven restrictions based on attributes such as resource tags (env=dev, team=finance), user identity attributes, or request context (source IP, device). This makes ABAC effective in fast-moving cloud setups where resources, projects, and teams are created frequently.

Most real-world cloud environments don’t choose one over the other. They layer the two:

- RBAC establishes the broad capability: who can be an editor, viewer, or admin.

- ABAC adds the precision: which environments, which resources, and under what conditions those permissions can actually be used.

This layered approach balances manageability with flexibility and is the model used in AWS IAM (roles + tag conditions), GCP (IAM roles + IAM Conditions/Tags), and Azure (role assignments + conditional expressions).

Simplifying RBAC and ABAC Management with Firefly

RBAC

Firefly enforces Role-Based Access Control (RBAC) at the Project level using the firefly_project_membership resource. Projects are organizational units that act as boundaries for managing Workspaces, Variable Sets, Guardrails, and user access.

In this example, we’ll define a CRM Project in Firefly and assign specific users with roles (admin or member), ensuring that only authorized users can view or trigger workspace runs inside that project.

Start by creating a Terraform configuration that provisions:

- A Firefly Project (CRM).

- An Admin user (full access).

- A Member user (limited access).

This policy-driven configuration is stored in main.tf:

terraform {

required_providers {

firefly = {

source = "gofireflyio/firefly"

version = "~> 0.0.2"

}

}

required_version = ">= 1.5.0"

}

provider "firefly" {

access_key = var.firefly_access_key

secret_key = var.firefly_secret_key

api_url = "https://api.firefly.ai"

}

# Create a Firefly project

resource "firefly_workflows_project" "crm_project" {

name = "CRM"

description = "Test project"

labels = ["demo", "testing"]

}

# Admin membership

resource "firefly_project_membership" "admin" {

project_id = firefly_workflows_project.crm_project.id

user_id = "Shivansh"

email = "shivansh@infrasity.com"

role = "admin"

}

# Member membership

resource "firefly_project_membership" "member" {

project_id = firefly_workflows_project.crm_project.id

user_id = "Shan"

email = "shan@infrasity.com"

role = "member"

}Configure Access Keys Securely

To authenticate Terraform with Firefly, define the credentials in variables.tf:

variable "firefly_access_key" {

type = string

description = "Firefly access key"

sensitive = true

}

variable "firefly_secret_key" {

type = string

description = "Firefly secret key"

sensitive = true

}Tip: Store these values in your CI/CD secrets or a secure backend like Vault.

Deploy the Configuration

Run the following commands to deploy:

terraform init

terraform plan

terraform apply -auto-approveOnce applied, Firefly provisions the CRM project and enforces the defined RBAC rules.

How RBAC Works After Deployment

Here’s what happened after the successful apply:

1. Project Creation

- A CRM project is created in Firefly.

- It becomes the RBAC boundary for:

- Workspaces

- Variable Sets

- Runner Pools

- Guardrails

- Any resource created under this project is only visible and accessible to assigned users.

2. Role Assignments

Admin: Shivansh

- Role: admin

- Permissions:

- Full control of the CRM project.

- Can create/edit/delete workspaces.

- Can add/remove members.

- Can attach variable sets and configure guardrails.

- Can trigger and approve runs.

Member: Shan

- Role: member

- Permissions:

- Can view workspaces, logs, and plans.

- Can trigger workspace runs.

- Cannot modify project configurations or add/remove users.

3. RBAC Enforcement

- If you create a workspace inside the CRM project

- Shivansh (admin) → can configure, run, and manage it.

- Shan (member) → can trigger runs and view logs, but not change configuration.

- Any other user not assigned → cannot see or access this workspace.

- Shivansh (admin) → can configure, run, and manage it.

- Variable Sets attached to the CRM project are only visible to Shivansh and Shan.

- Guardrails applied at the project level enforce policy checks automatically on all workspaces.

Firefly’s Role in ABAC

Firefly extends its governance capabilities beyond RBAC by supporting Attribute-Based Access Control (ABAC). With ABAC, access decisions are made based on resource attributes, user attributes, or tags, enabling fine-grained security policies.

Firefly provides two options for implementing ABAC:

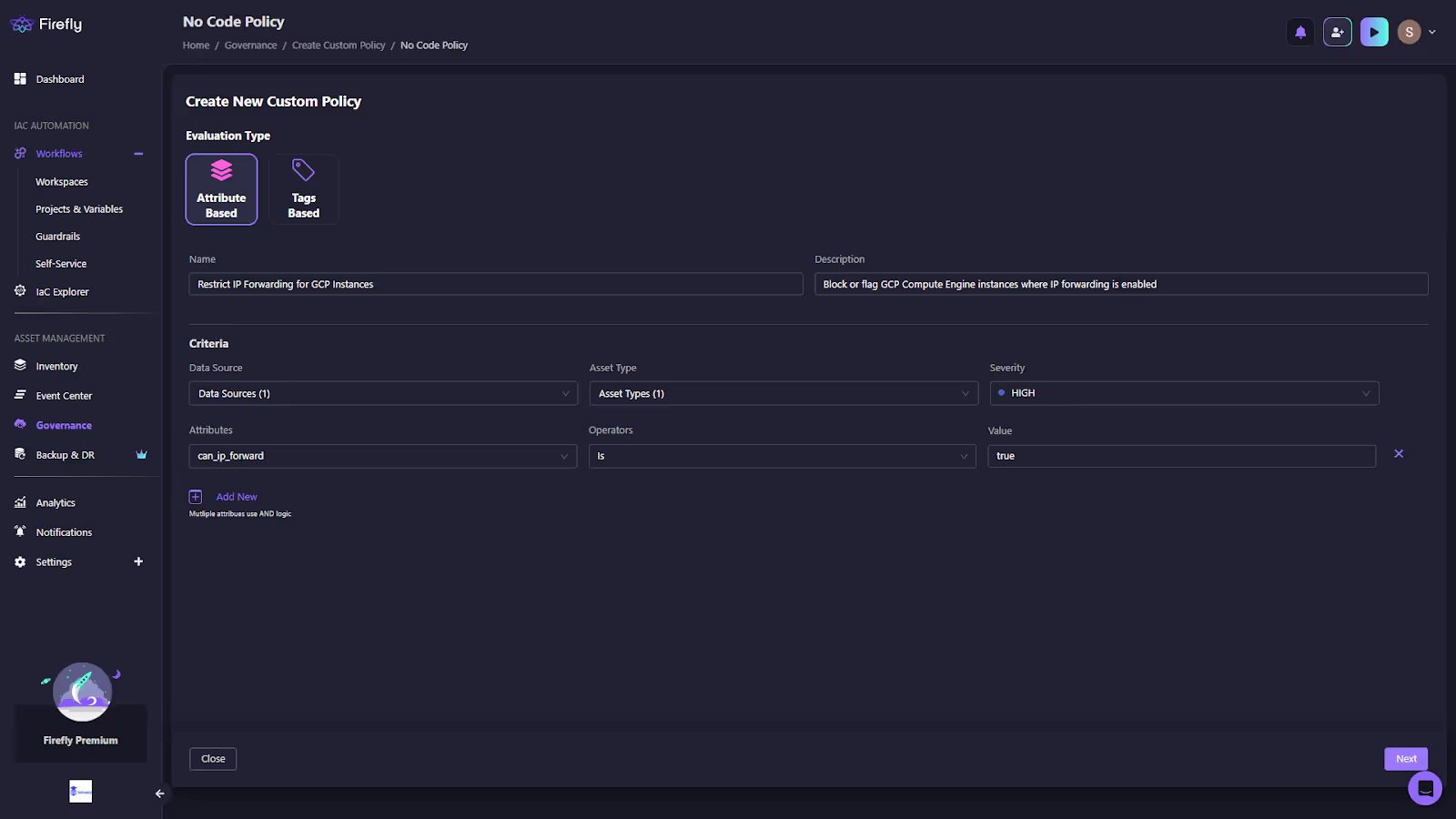

1. No-Code Policy Builder (Recommended for Security & Compliance Teams)

The No-Code Policy Builder in Firefly allows teams to create and enforce governance rules without writing code. It supports two policy flows:

- Attribute-Based Policies: Define rules based on resource properties

(e.g., encryption status, IP forwarding, public access) - Tags-Based Policies: Enforce required tags or validate specific tag values

(e.g., owner, environment, cost-center)

Restricting IP Forwarding for GCP Instances

We want to ensure that no GCP Compute Engine instances are created or managed with IP forwarding enabled (can_ip_forward = true) unless they’ve been explicitly approved. This helps prevent unauthorized network routing and enforces a security baseline.

Start by navigating to Governance → + Custom Policy in the Firefly dashboard and selecting No-Code Policy Builder with the Attribute-Based option.

Fill in the policy details to clearly define its purpose and scope as shown in the snapshot below:

After defining the basic details, configure the evaluation criteria that drive the policy logic. Here, the attribute can_ip_forward is selected, the operator is set to Is, and the value is true. This ensures the policy applies specifically to GCP VM instances where IP forwarding is enabled.

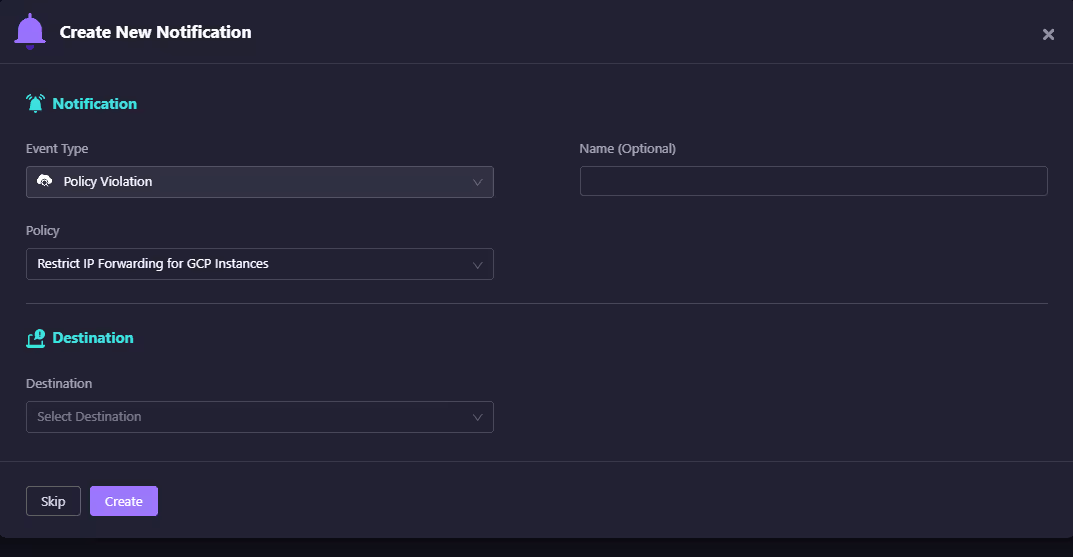

Notification setup:

Firefly also supports real-time notifications whenever a violation occurs, as shown in the snapshot below:

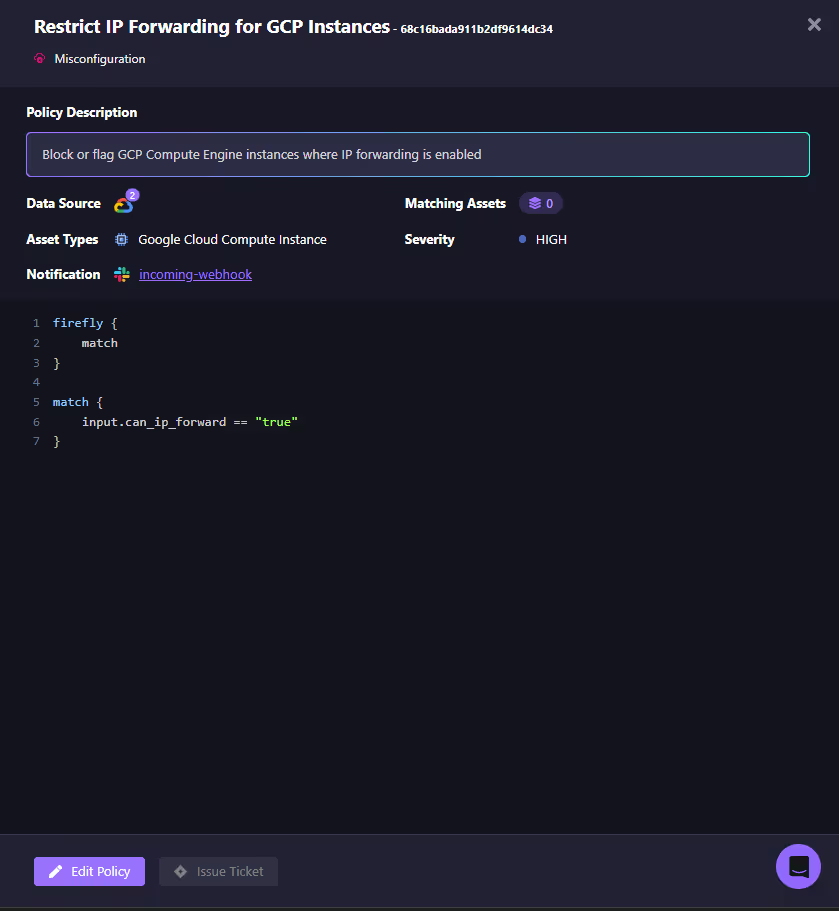

You can integrate alerts directly with collaboration and ticketing tools such as Slack or Email to keep your teams informed immediately when a misconfigured resource is detected. Once created, Firefly automatically generates the Rego-based rule for you. This provides transparency into the enforcement logic:

- If the attribute can_ip_forward is true, the resource matches the violation criteria.

- The policy will flag or block the asset depending on the enforcement mode.

How the Policy Works

In this policy, any GCP Compute Engine instance where can_ip_forward is set to false or where the attribute is not configured at all will be allowed without any restrictions. However, if the instance has can_ip_forward explicitly set to true, it will be blocked or flagged based on the chosen enforcement mode, ensuring that only compliant resources are deployed or managed within the environment.

FAQs

1. What is the difference between RBAC and ABAC?

RBAC (Role-Based Access Control) grants permissions based on a user’s role (e.g., Admin, Developer, Viewer). ABAC (Attribute-Based Access Control) uses attributes like resource tags, user departments, or environments to decide access. RBAC is static and role-driven, while ABAC is dynamic and context-driven.

2. What is the difference between Databricks RBAC and ABAC?

In Databricks, RBAC (Role-Based Access Control) assigns permissions based on predefined roles, making it ideal for environments where responsibilities are clearly defined and relatively static. ABAC (Attribute-Based Access Control), on the other hand, offers greater flexibility by granting or restricting access dynamically based on attributes such as region, project, data sensitivity, or user properties. While RBAC is simpler to manage, ABAC provides fine-grained and scalable access control, making it better suited for dynamic and complex Databricks environments.

3. What is the difference between RBAC and ABAC security models for private cloud?

In a private cloud, RBAC defines access based on fixed roles like Admin or Developer at the cluster or namespace level. ABAC goes further by evaluating attributes such as labels, tags, or user properties for fine-grained control. Best practice: use RBAC for baseline permissions and ABAC for conditional access.

4. What is RBAC and ABAC in IAM?

Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC) are two widely used methods for implementing access control in organizations. RBAC grants or denies access based on a user's role within the company, such as Admin, Developer, or Viewer, making it straightforward and easy to manage. In contrast, ABAC determines access dynamically by evaluating various attributes, such as user department, resource tags, environment, or request context. While RBAC offers a simpler, role-driven model, ABAC provides fine-grained, conditional control, making it more suitable for complex and dynamic environments.

.svg)