AI workloads are driving one of the fastest infrastructure expansions we have seen in years. Gartner projects global IT spend to reach $5.43 trillion in 2025, with data center systems growing by over 40%, fueled mainly by demand for GPU-optimized capacity. This growth is translating directly into larger, more complex estates on AWS, Azure, and GCP, and most of these deployments are now being standardized through Terraform.

All three hyperscalers are pushing official Terraform support for their AI platforms. Google documents full Vertex AI provisioning through the Google provider, covering datasets, endpoints, Feature Store, Vector Search, and Workbench. Microsoft publishes examples using AzureRM and AzAPI to create Azure AI Foundry projects, resource groups, and GPT-based model deployments. AWS provides a Bedrock reference stack written entirely in Terraform, covering agent lifecycle automation, knowledge bases, OpenSearch Serverless, Lambda orchestration, and S3 integrations.

HashiCorp’s partner ecosystem reinforces this direction. Terraform integrates directly with AWS SageMaker, Bedrock, and Lex, Azure Machine Learning and AI Foundry, and GCP Vertex AI. On the technology side, partnerships with Databricks, Aisera, and CloudFabrix extend Terraform into data platforms and AIOps.

The pattern is clear: as AI spending accelerates, the scale and frequency of Terraform deployments grow with it. Teams are not experimenting; they are standing up production-grade GPU clusters, storage pipelines, and AI endpoints across clouds, all codified and managed through Terraform with the same plan-review-apply workflow used for core infrastructure.

Terraform for GenAI Infrastructure

As AI workloads scale, using Terraform to manage infrastructure has become crucial for consistency and control. Terraform helps automate the creation and management of resources across AWS, GCP, and Azure, making it easier to provision and maintain infrastructure for AI workloads.

Here are some of the key platforms where Terraform is commonly used for provisioning and managing AI infrastructure:

AWS

1. SageMaker: AWS SageMaker is a fully managed service for building, training, and deploying AI models. Terraform helps automate the setup of SageMaker environments. With Terraform, you can define and create resources like SageMaker training jobs, model deployments, and endpoints. This removes manual steps and ensures environments are reproducible and consistent.

For example, the following Terraform code provisions a SageMaker AI app (like a Jupyter notebook server) where users can interact with the model:

resource "aws_sagemaker_app" "example" {

domain_id = aws_sagemaker_domain.example.id # Reference to the SageMaker domain

user_profile_name = aws_sagemaker_user_profile.example.user_profile_name # User profile linked to the app

app_name = "example" # Name of the app

app_type = "JupyterServer" # Type of app, here it's a Jupyter notebook server

}- domain_id links the app to a specific SageMaker domain, which is a shared environment.

- user_profile_name assigns a profile for a specific user.

- app_name defines the name of the app.

- app_type specifies the app type (in this case, a JupyterServer for data science work).

With this, SageMaker environments are easily set up and repeatable.

2. Bedrock: AWS Bedrock allows you to use pre-built AI models for generative AI tasks. Terraform lets you automate the deployment of these models by referencing the Bedrock model.

Here's an example of how you would pull a Bedrock foundation model in Terraform:

data "aws_bedrock_foundation_model" "test" {

model_id = data.aws_bedrock_foundation_models.test.model_summaries[0].model_id # Model ID for the foundation model

}Explanation:

- model_id refers to a specific model from AWS Bedrock that you want to use.

- This data source makes it easy to integrate pre-trained models into your Terraform setup, automating their deployment and use in your infrastructure.

GCP

1. Vertex AI: Google's Vertex AI is a platform that helps you build, train, and deploy AI models at scale. Terraform simplifies the setup of Vertex AI endpoints, making it easy to deploy and scale models automatically.

For instance, this example shows how to set up a Vertex AI index for efficient AI data searches:

resource "google_vertex_ai_index" "index" {

display_name = "test-index" # Name of the index

region = "us-central1" # Region where the index will be deployed

description = "index for test" # Description of the index

metadata {

contents_delta_uri = "gs://${google_storage_bucket.bucket.name}/contents" # Location of the data in a Cloud Storage bucket

config {

dimensions = 2 # Dimensions of the vectors used in the index

approximate_neighbors_count = 150 # How many neighbors to search for in the index

shard_size = "SHARD_SIZE_SMALL" # Size of the shards (parts of the index)

distance_measure_type = "DOT_PRODUCT_DISTANCE" # Type of distance used in search

}

}

index_update_method = "BATCH_UPDATE" # Method for updating the index (batch updates)

}Explanation:

- display_name and description define basic properties of the index.

- contents_delta_uri points to the Cloud Storage location where the data for the index is stored.

- dimensions specify the number of dimensions in each vector (used for searches).

- approximate_neighbors_count and distance_measure_type define how the model finds similar data points.

This setup automates the creation of an index to quickly search for similar data points in large datasets, which is essential for AI tasks like recommendation systems.

Azure

1. Azure AI Foundry: Azure AI Foundry is a platform for building and deploying AI models. With Terraform, you can automate the creation of AI Foundry resources like projects and deployments. The following Terraform code provisions an AI Foundry resource for building and deploying models:

resource "azapi_resource" "ai_foundry" {

type = "Microsoft.CognitiveServices/accounts@2025-06-01"

name = "aifoundry${random_string.unique.result}" # Unique name for the resource

parent_id = azurerm_resource_group.rg.id # The resource group where the Foundry resource will reside

location = var.location # The region for deployment

schema_validation_enabled = false

body = {

kind = "AIServices" # Specifies this as an AI services resource

sku = {

name = "S0" # SKU for the resource (service tier)

}

identity = {

type = "SystemAssigned" # Uses Azure's system-assigned identity for authentication

}

properties = {

allowProjectManagement = true # Enables project management in the AI Foundry

customSubDomainName = "aifoundry${random_string.unique.result}" # Custom subdomain for the Foundry resource

}

}

}Explanation:

- name sets a unique name for the AI Foundry resource using a random string.

- parent_id links this resource to a specific Azure resource group.

- sku defines the pricing tier for the AI Foundry.

- allowProjectManagement enables management of AI projects in the Foundry environment.

This Terraform configuration automates the creation of an AI Foundry instance, ready to build and deploy models such as GPT for large-scale AI applications.

1. OpenAI Models on Azure: With AzAPI, Terraform can also provision and manage OpenAI models on Azure. The example below shows how to deploy an OpenAI GPT-4 model within Azure:

resource "azurerm_cognitive_deployment" "aifoundry_deployment_gpt_4o" {

depends_on = [

azapi_resource.ai_foundry # Ensures the AI Foundry resource is created first

]

name = "gpt-4o" # Name of the deployment

cognitive_account_id = azapi_resource.ai_foundry.id # Links to the AI Foundry resource

sku {

name = "GlobalStandard" # Pricing tier

capacity = 1 # Number of units to deploy

}

model {

format = "OpenAI" # Specifies the use of OpenAI models

name = "gpt-4o" # Model name (GPT-4)

version = "2024-11-20" # Model version

}

}Explanation:

- cognitive_account_id links the deployment to an AI Foundry instance.

- model specifies the details of the model being deployed (GPT-4 in this case), including the version.

This ensures that OpenAI models are easily deployed and managed across your Azure environment using Terraform.

Automating Google Cloud Storage Bucket Creation with Terraform

Following the discussion on how Terraform helps automate the deployment of infrastructure resources for GenAI workloads, it’s time to take a practical approach and see how it works in action. In this hands-on section, Terraform will be used to provision a Google Cloud Storage (GCS) bucket. The example will use a Python script (main.py) to generate Terraform HCL based on user input. If OpenAI or LangChain is unavailable, the script will switch to interactive mode, allowing users to manually input the necessary details for the bucket configuration. This script not only automates the generation of the Terraform configuration but also deploys it, eliminating the need for engineers to manually write or apply the configuration.

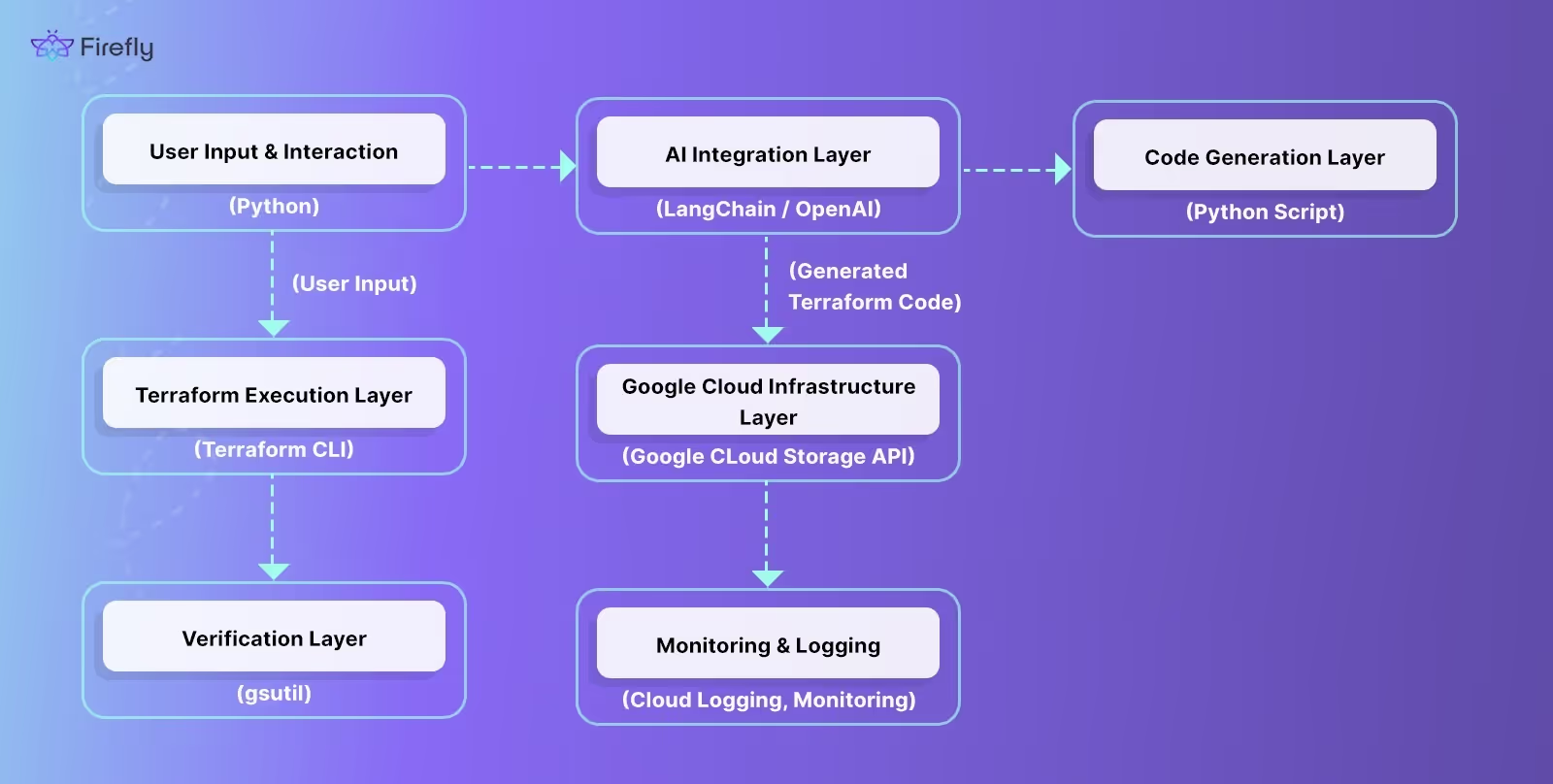

System Architecture Overview

To better understand the automation process, refer to the diagram below, which illustrates the high-level architecture of the system:

The diagram visualizes how different layers interact to automate the provisioning of a GCS bucket, from user input to actual deployment using Terraform. Here's how each layer functions:

- User Input & Interaction Layer (Python): This layer collects the user input, either through a prompt or an interactive mode. The user describes the desired GCS bucket configuration (e.g., enable versioning, set location, etc.). If OpenAI or LangChain is available, it will help auto-generate the Terraform configuration; otherwise, the script switches to manual input.

- AI Integration Layer (LangChain/OpenAI): In case OpenAI or LangChain is available, this layer generates the Terraform code based on the user's description of the GCS bucket. It uses AI models to interpret the input and create the necessary Terraform configuration.

- Code Generation Layer (Python Script): Once the configuration is generated (either by AI or manually through the interactive mode), this Python script generates the Terraform HCL (HashiCorp Configuration Language) code required to provision the GCS bucket.

- Terraform Execution Layer (Terraform CLI): After generating the Terraform configuration, the Terraform CLI is invoked to initialize, format, plan, and apply the infrastructure changes. This is where the actual provisioning happens.

- Google Cloud Infrastructure Layer (Google Cloud Storage API): Terraform communicates with the Google Cloud API to create the actual GCS bucket based on the configuration. The Terraform provider for Google Cloud ensures that the configuration matches the desired state.

- Verification Layer (gsutil): After the bucket has been created, the verification step uses the gsutil tool to list all GCS buckets and confirm that the new bucket exists and is correctly configured.

- Monitoring & Logging Layer (Cloud Logging/Monitoring): Continuous monitoring ensures the infrastructure is healthy. Google Cloud’s native logging and monitoring tools are used to track events, errors, and resource usage.

Execution:

Before running the script, ensure that you have the necessary environment set up. First, verify that Terraform is installed by running:

terraform --version

If Terraform is not installed, follow the instructions on HashiCorp’s website to install it.

To run the Python script, you'll need to set up the necessary Python libraries. Install them using the command:

pip install langchain openai dotenvThese libraries are important for interacting with LangChain and OpenAI (if available), as well as for securely loading environment variables from a .env file, ensuring proper configuration for the script’s execution.

Google Cloud Credentials

Ensure that you have Google Cloud SDK installed and authenticated to manage GCS buckets:

gcloud auth loginMake sure your account has the Storage Admin role to create and manage storage resources.

Run the Python Script

Once your environment is set up, execute the Python script (main.py) by running the following command in your terminal:

python main.pyThe script will prompt you for a description of the GCS bucket you wish to provision. For example:

Describe the GCS bucket to provision: deploy a GCS bucket with versioning enabledBased on the description provided, the script will proceed to the next steps, generating Terraform code for the resource.

Interactive Mode (If OpenAI is Unavailable)

If OpenAI or LangChain is unavailable or not configured, the script switches to interactive mode and requests input for each necessary parameter, as shown in the snapshot below:

Explanation:

- Bucket Name:

Bucket name (3-63 chars; lowercase, digits, -, _, .): error-logs-2- Location/Region:

Location/region (e.g., US, EU, us-central1). Leave blank to use provider default: us-central1- Storage Class:

Storage class [STANDARD, NEARLINE, COLDLINE, ARCHIVE] (default STANDARD):- Uniform Bucket-Level Access:

Enable uniform bucket-level access? [y/N]: y- Object Versioning:

Enable object versioning? [y/N]: yOnce the necessary details are entered, the script automatically generates the Terraform HCL (HashiCorp Configuration Language) code for the GCS bucket. Here’s an example of the generated Terraform configuration:

resource "google_storage_bucket" "bucket" {

name = "error-logs-2"

location = "US-CENTRAL1"

storage_class = "STANDARD"

uniform_bucket_level_access = true

versioning {

enabled = true

}

}gsutil ls

This Terraform configuration:

- Creates a GCS bucket named error-logs-2 in the US-CENTRAL1 region.

- Sets the storage class to STANDARD.

- Enables uniform bucket-level access and object versioning.

.png)

Running Terraform Config generated

Once the Terraform configuration is generated, the script runs the following commands: terraform init initializes the environment and downloads the required plugins. terraform fmt -recursive formats the code for readability. terraform plan -out main.tfplan previews the changes, confirming the creation of the GCS bucket.

Afterward, terraform apply -auto-approve main.tfplan applies the changes and provisions the GCS bucket in Google Cloud based on the generated configuration.

Verifying the Bucket Creation

After running the terraform apply command, you can verify the creation of the GCS bucket by listing all buckets in your project:

gsutil lsAfter running it displays all the available GCS buckets, including the newly created error-logs-2 bucket as shown in the terminal below:

By automating infrastructure provisioning with Terraform, you ensure that your GenAI infrastructure is scalable, reproducible, and consistent.

Best Practices for Managing GenAI Infrastructure with Terraform

As AI workloads scale and evolve, maintaining efficient and secure infrastructure is critical. Here are some best practices to follow when managing GenAI infrastructure with Terraform:

1. Modularize Terraform Code

One of the key best practices for managing large-scale infrastructure is to modularize your Terraform code. Breaking your configurations into reusable modules makes it easier to scale, maintain, and update your infrastructure. For example, instead of having a single, monolithic Terraform file, you can create modules for:

- GPU cluster provisioning

- AI model endpoints (e.g., SageMaker or Vertex AI)

- Storage resources (e.g., S3, GCS)

This modular approach not only ensures that your Terraform code is scalable and maintainable, but it also makes it easier to share common infrastructure code across different projects or teams.

2. Ensure Security

Security is paramount, especially when managing AI infrastructures that may contain sensitive data and complex workloads. To enhance security:

- Use Role-Based Access Control (RBAC) to manage who can access and modify cloud resources. This ensures that only authorized users or services can make changes to AI resources like Vertex AI or SageMaker endpoints.

- Set up private endpoints for sensitive data processing or machine learning models. For instance, deploying AI models using private endpoints ensures that traffic does not traverse the public internet, reducing exposure to security vulnerabilities.

3. Optimize Costs

AI workloads, especially those involving GPU instances and high-performance computing, can be expensive. Cost optimization is essential for keeping your cloud expenses manageable:

- Use Terraform cost estimation tools, such as the terraform plan command with cost estimation flags, to preview how much the infrastructure will cost before applying the plan.

- Automate the shutdown or termination of idle resources like GPU instances or unused AI models. This helps ensure you’re only paying for what you actually use, avoiding unnecessary charges.

4. Leverage LangChain with Inbound AI Services

When managing GenAI infrastructure, it’s beneficial to use LangChain in conjunction with the inbound AI services of the cloud provider you're using. For instance:

- If you're working with Google Cloud (GCP), leverage Vertex AI (formerly Gemini), which can be easily integrated with LangChain to convert natural language prompts into Terraform modules for provisioning resources.

- Similarly, if you're using AWS, Bedrock can be integrated with LangChain to transform natural language requests into Terraform code. This integration simplifies the process of generating and managing infrastructure by converting natural language into the required configurations.

Using LangChain with cloud-native AI services ensures that you can automate the generation of Terraform code based on high-level descriptions, making it easier to deploy and manage GenAI infrastructure at scale.

Automating Terraform GenAIWorkflows with Firefly's Self-Service

Firefly simplifies the process of generating and deploying Terraform code through its Self-Service feature, allowing users to create infrastructure configurations from scratch with minimal effort. Without Firefly, to generate Terraform configurations using natural language, you would typically need to use LangChain-based applications or custom setups to translate plain text prompts into Terraform code. This process involves manually integrating AI models and custom scripts to generate the configurations.

With Firefly, this process becomes seamless. Instead of manually setting up AI models or writing complex scripts, you can use Thinkerbell AI within Firefly to automatically convert your natural language descriptions into fully functional Terraform configurations. This integration makes it easy to describe your infrastructure needs, such as creating a GCS bucket, and have Firefly generate the necessary Terraform code without additional setup or external tools.

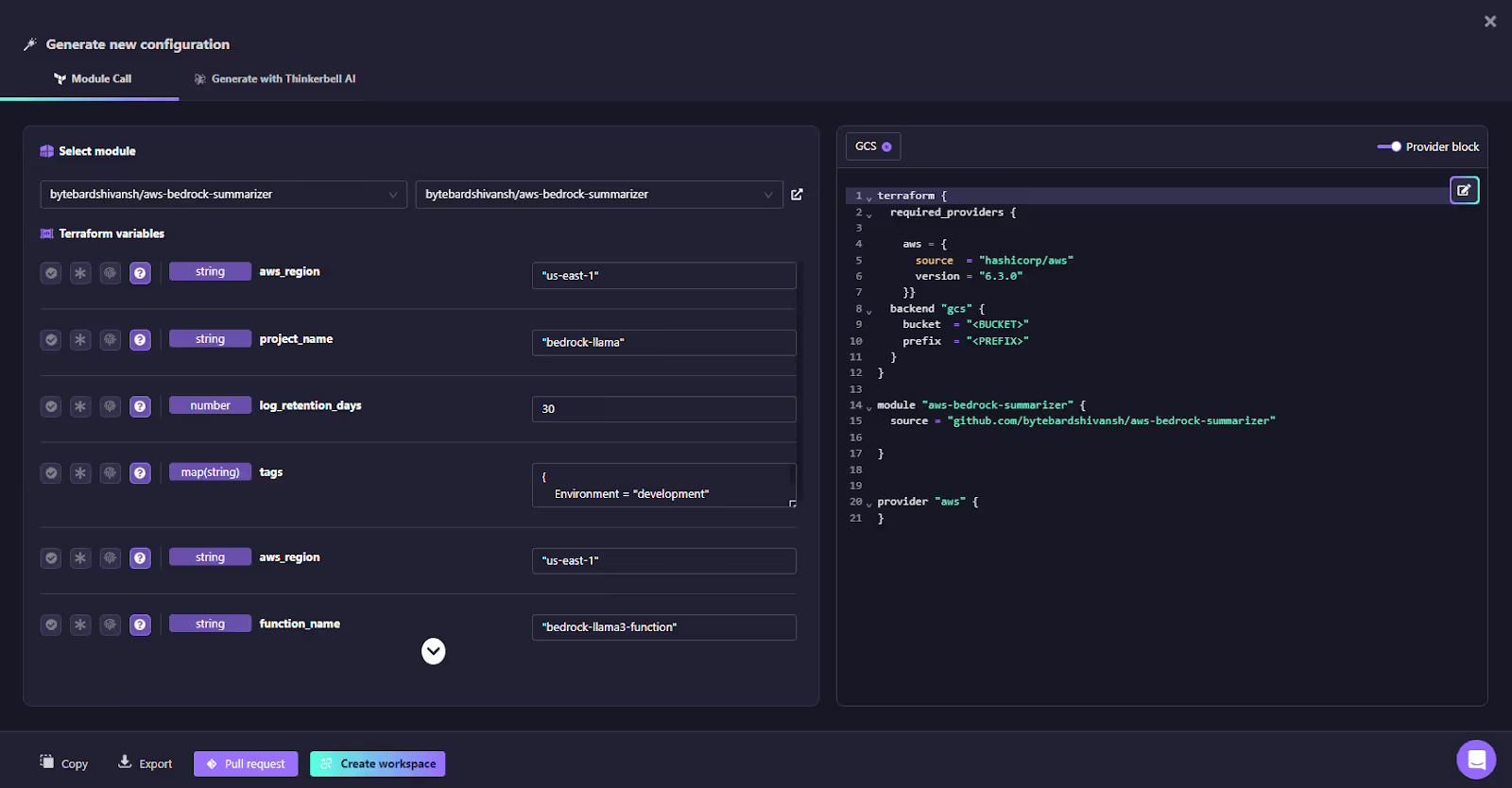

1. Firefly Self-Service Flows: Module Call and Thinkerbell AI

Firefly offers two primary methods for generating Terraform configurations:

- Module Call: This option allows users to select and configure existing Terraform modules from public or private repositories, which can then be tailored to their specific needs.

- Generate with Thinkerbell AI: This feature enables users to generate custom Terraform code by simply describing the desired infrastructure in plain English.

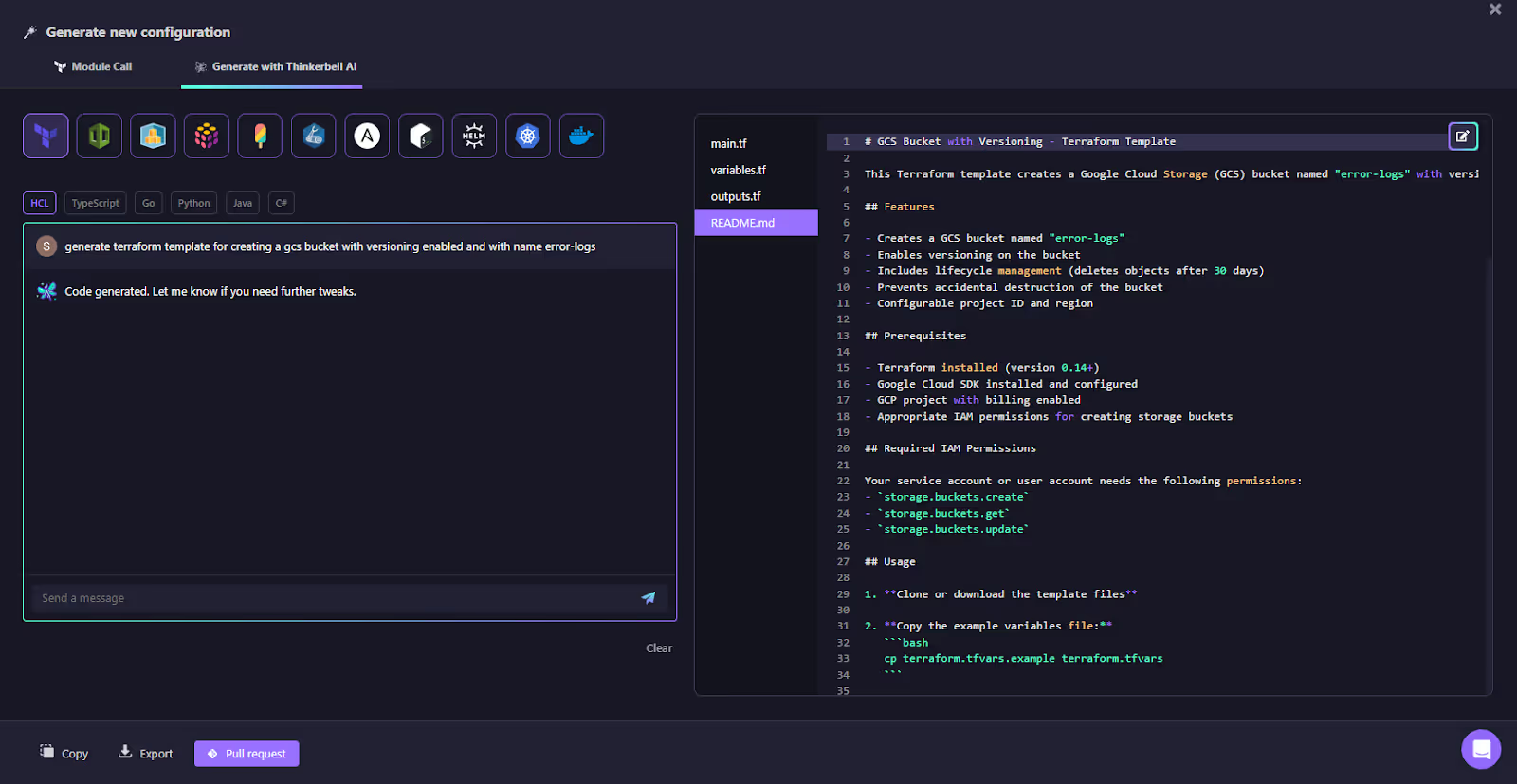

Using Thinkerbell AI to Generate Terraform Code

In this example, Thinkerbell AI is used to generate a Terraform configuration for a Google Cloud Storage (GCS) bucket with versioning enabled, named error-logs, through Firefly’s Self-Service.

To create a GCS bucket configuration using Thinkerbell AI, follow these steps:

1. Access the Self-Service Interface

Go to the Self-Service section within the Firefly interface:

- Navigate to Workflows > Self-Service > Generate with Thinkerbell AI.

2. Select Your IaC Tool

Choose Terraform from the list of supported Infrastructure-as-Code (IaC) tools.

3. Describe Your Desired Configuration

As shown in the snapshot above in the prompt field, enter a detailed description of the resource you want to create. For example:

"Generate a terraform template for creating a GCS bucket with versioning enabled and with the name error-logs."4. Generate the Configuration

Click Generate. Thinkerbell AI will process your input and generate the corresponding Terraform HCL code.

5. Review the Generated Code

The generated Terraform configuration will appear in the Firefly interface. Here's an example of the generated code for the GCS bucket:

resource "google_storage_bucket" "bucket" {

name = "error-logs"

location = "US-CENTRAL1"

storage_class = "STANDARD"

uniform_bucket_level_access = true

versioning {

enabled = true

}

lifecycle {

prevent_destroy = true

rule {

action = "Delete"

condition {

age = 30

}

}

}

}6. Export or Create a Pull Request

Once the Terraform code is generated:

- Export: You can either download the code or copy it to your clipboard.

- Pull Request: If integrated with a Version Control System (VCS), you can create a pull request directly to your repository, making collaboration seamless.

7. Modify or Iterate as Needed

If you need to tweak the configuration, you can modify the generated code in the Firefly interface. This flexibility allows you to adjust the infrastructure details to match your exact requirements.

Firefly Runners: Deploying the Codified Resources

Once the Terraform code is ready, Firefly Runners handle the deployment process by automating the Terraform plan and apply workflows. These secure, managed execution environments are designed to streamline the deployment of cloud resources.

Automated Plan and Apply Workflows

When a pull request is submitted with the generated Terraform code:

- Firefly Runners automatically detect the changes, trigger a terraform plan to preview the deployment, and validate the changes against your infrastructure.

- After the pull request is approved and merged, Firefly Runners will automatically execute terraform apply, provisioning the GCS bucket with versioning enabled as per the configuration.

Intelligent Plan Automation

Firefly ensures that the plan is executed using the correct configuration and variable values, accurately reflecting the live cloud environment. This minimizes human error and ensures that the deployment process is consistent.

Guardrails for Policy Enforcement

Before applying the configuration, Firefly Guardrails automatically checks for any security, cost optimization, or compliance issues. If any violations are detected, Firefly will prevent the apply phase and notify the appropriate team members for review in communication channels such as Slack.

In short: Firefly for Terraform Deployment

Integrating Firefly into your Terraform workflows simplifies the process of generating and deploying infrastructure. Firefly’s Self-Service feature, powered by Thinkerbell AI, allows you to create Terraform code using natural language descriptions, making it easy to provision cloud resources like GCS buckets and other infrastructure components. With Firefly Runners, your IaC deployments are automated, streamlined, and seamlessly integrated with your VCS, ensuring consistent, secure, and efficient cloud infrastructure management.

.avif)

FAQs

1. Does Terraform use AI?

Terraform itself doesn’t directly use AI, but it can integrate with AI tools to enhance workflows. AI-powered platforms like Amazon CodeWhisperer and GitHub Copilot assist in generating Terraform code, while the Terraform MCP server enables AI models to access real-time registry data for more accurate configurations. Additionally, AI agents can interact with Terraform Cloud to manage infrastructure intelligently.

2. What are Terraform agents?

Terraform Cloud Agents (formerly HCP Terraform Agents) are lightweight binaries deployed in private, isolated, or on-prem environments. They enable secure communication and execution of Terraform operations with Terraform Cloud or Terraform Enterprise, facilitating infrastructure management in both public and private environments.

3. Is Terraform agent or agentless?

Terraform is primarily an agentless tool, meaning it communicates directly with cloud provider APIs and uses SSH for tasks like configuration. However, with Terraform Cloud and Terraform Enterprise, optional self-hosted agents can be deployed to execute runs from private networks or integrate with internal tools, enabling more control over the execution within specific environments.

4. Does Cursor AI work with Terraform?

Yes, Cursor AI works with Terraform. Cursor, an AI-powered code editor built on Visual Studio Code, integrates AI functionalities that significantly enhance writing and managing Infrastructure as Code (IaC) with Terraform. It provides code suggestions, error detection, and automates Terraform code generation, streamlining the process of creating and managing Terraform configurations.

.svg)