On October 20, 2025, the AWS region in North Virginia, experienced a severe outage for nearly 16 hours: disrupting 113 services and taking major platforms like Zoom, DoorDash, Capital One, Coinbase, and Reddit offline. These companies (which, collectively, were spending eight to nine figures annually on backup and disaster recovery solutions) were completely powerless.

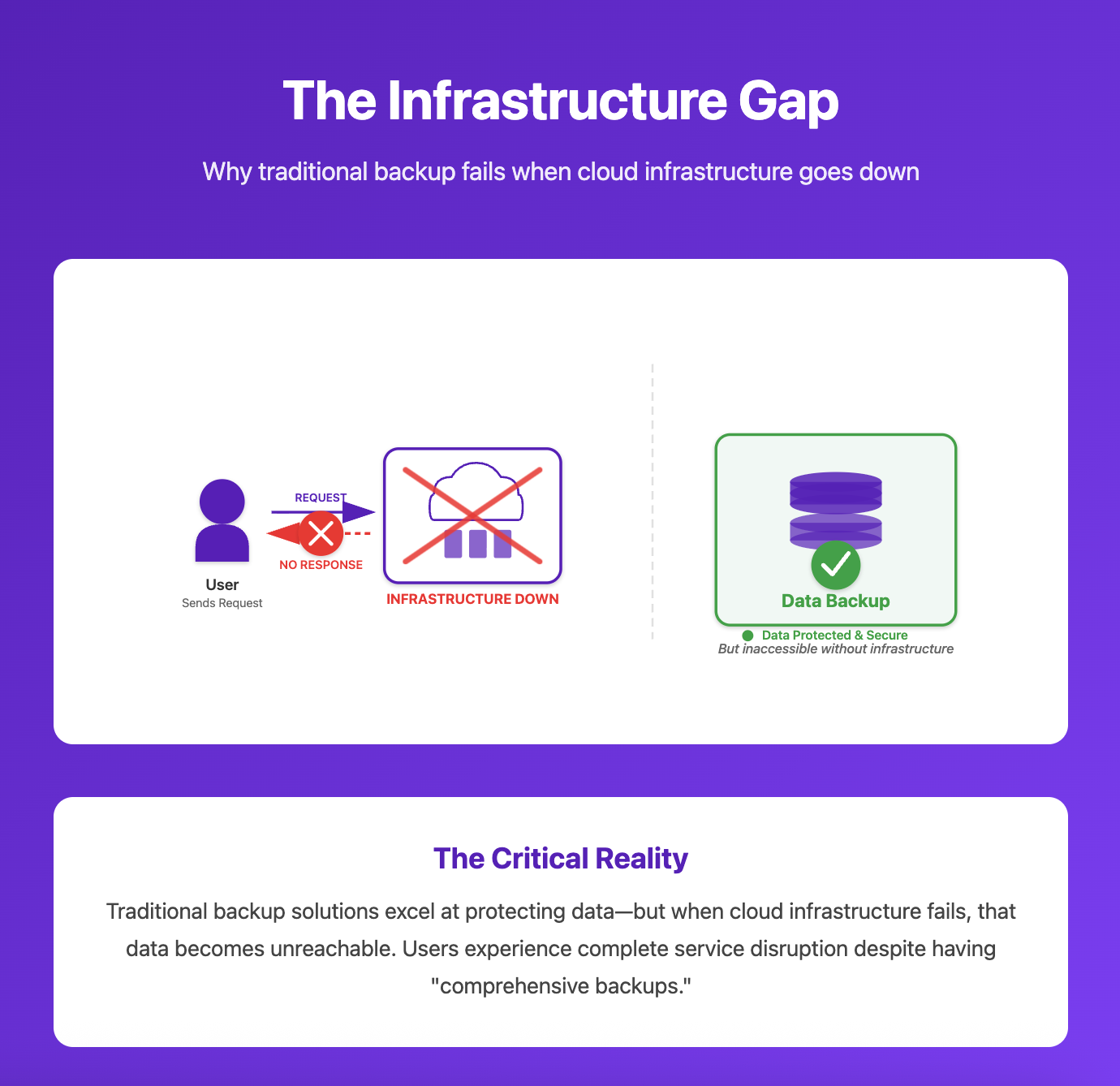

The incident exposed an underacknowledged truth: backups protect data, not business continuity and availability. And without available infrastructure, your data isn’t worth much.

The $100 Million Question Nobody Wants to Ask

Our dependency on cloud is complete.

Zoom: a company so critical to modern work. DoorDash, serving millions of meals daily. Capital One and Coinbase, handling billions in financial transactions.

- All of them have disaster recovery plans, teams, and audits.

- All of them invest heavily in backup “and DR” tools.

- All of them went down through AWS's US-EAST-1 region outage (and some companies were down even longer).

The hard truth is: backing up your data is critical, but it does almost nothing for business continuity. And if some of the most seemingly well-prepared brands are paying for solutions and still not truly disaster-ready, what’s in store for the rest?

A Pattern of Failure: All Clouds, All Regions Are at Risk

This wasn't an AWS problem. It's a cloud infrastructure problem.

Just one week after the AWS outage, Azure experienced its own major disruption. Over the past five years, the major cloud providers have collectively suffered dozens of significant outages. Beneath the headlines are even more small-scale events that never reach the news cycle, but the companies affected suffer catastrophic losses. The cumulative damage runs into the billions.

The uncomfortable truth is that no cloud provider is immune. No region is guaranteed uptime. AWS's US-EAST-1 may be notorious, but every cloud and every region carries risk.

Which means every cloud-dependent company needs robust infrastructure disaster recovery: not as a nice-to-have, but as a fundamental requirement for business continuity.

Enter CAIRS: The Category That Changes Cloud Resiliency

This kind of widespread trouble with disaster recovery is precisely why Gartner created a new category in 2025: Cloud Application Infrastructure Recovery Solutions (CAIRS).

According to Gartner, CAIRS solutions automate discovery, protection, and restoration of full-stack cloud applications: not just data, but infrastructure and configurations, too. For decades, backup and disaster recovery practices focused almost exclusively on safeguarding data, leaving a critical gap: if infrastructure and configurations are compromised, protected data remains inaccessible.

Cloud resiliency is two things: it tells you which parts of your cloud are backup-ready, and then it couples it with the ability to automate the deployment of the backup and infrastructure. But not all solutions make true cloud resiliency possible.

CAIRS adoption is trending up rapidly, and in light of the major outages of October 2025 (AWS, Azure, and even Claude), it's becoming a standard for cloud-dependent companies who can't afford extended downtime

The False Promise of Traditional DR

Here's the shameful little secret about traditional disaster recovery no one talks about: it was designed for on-prem IT in the 90’s.

Legacy DR solutions assume your infrastructure is static, predictable, and owned by you. They focus obsessively on data backup: creating snapshots, replicating databases, archiving files. But in cloud-native environments, data is only half the equation. Without the infrastructure layer, like the compute instances, networking configurations, security groups, and IAM policies, your backed-up data might as well not exist.

When AWS's US-EAST-1 region failed, companies discovered they couldn't simply "restore from backup." They needed to:

- Spin up infrastructure in a different region

- Understand which resources ran in US-EAST-1

- Replicate and reconfigure networking and security

- Update DNS and load balancers

- Restore application state and reconnect integrations

All while their SLAs were bleeding, and their competitors were gaining ground.

Why ClickOps Is Your Single Point of Failure

The AWS outage exposed another uncomfortable truth: large portion of cloud infrastructure exists as undocumented, manually configured resources that can't be easily replicated or recovered. This is the ClickOps problem.

Engineers log into the AWS console, click through wizards, and deploy resources directly. It's fast. It's intuitive. And it's a disaster waiting to happen.

When those manually configured resources fail (or when an entire region goes dark), how do you recreate them? From memory? From scattered documentation? From screenshots?

But even if you have Infrastructure-as-Code, how do you manage it across multiple clouds, accounts, and regions? Do you have a system of record that shows your dependency on a single region or service? The organizations recovering fastest are the ones with IaC coverage everywhere, centralized visibility into multi-cloud dependencies, and automated deployment pipelines that actually work across their entire footprint.

What Should You Do Right Now?

The answer isn't spending more on traditional backup solutions. It's fundamentally rethinking your approach to cloud resilience:

Step 1: Audit Your Infrastructure Resilience

Can you answer these questions right now? (Hint: If you can't answer confidently, you're vulnerable.)

- Which parts of your cloud infrastructure are backed up?

- Can you recreate your entire stack in a different region or account?

- How long would recovery actually take?

- What percentage of your infrastructure exists as undocumented ClickOps resources?

- Do you have a solution to automate recovery quickly and effectively?

Step 2: Adopt Infrastructure-as-Code Everywhere

Every resource in your cloud should be defined as code. Not some. Not most. All of it. This isn't optional anymore. When (not if) the next outage hits, you need to be able to redeploy your entire infrastructure with a single command. This approach has many additional benefits, apart from enabling cloud infrastructure disaster recovery.

Step 3: Implement CAIRS

Don't wait for the next outage to discover you're unprepared. Business Continuity and Cloud Resiliency demands effective CAIRS strategy. Gartner's recognition of CAIRS underscores the industry's shift toward solutions that restore the entire cloud stack, so organizations can resume operations quickly after an incident.

Step 4: Test Your Actual Recovery Capabilities

Most disaster recovery plans look great on paper and fail spectacularly in practice. When was the last time you actually tested failing over to a different region? Not a tabletop exercise but an actual test with your production workloads.

Step 5: Invest in Purpose-Built Cloud Resilience Tools

Cloud infrastructure is being deployed faster than ever, and unfortunately for many, quality and reliability are taking the hit. Cloud teams need to shift budget from traditional backup solutions to actual resilience infrastructure.

Those following Gartner's guidance will adopt solutions that enable fast, automated recovery through IaC, reducing recovery times from days to minutes. And purpose-built tools like Firefly's Disaster Recovery and Cloud Resiliency Posture Management (CRPM) are designed to help cloud-native infrastructure withstand disasters and deliver true business continuity: not just data protection.

The Moment of Truth Is Coming

It took learning the hard way for some, and watching a cautionary tale unfold for others. But finally, cloud leaders are starting to understand: they must pay more attention to resiliency, not just data backup. The threat landscape is evolving faster than traditional DR strategies can adapt.

October 2025, showed us how small errors at AWS and Azure can cascade into global outages. The difference between those who stay afloat and those who go down is a comprehensive approach to preparedness: one that factors in your data as well as your infra and all its configurations.

🔗 Dive into an overview of CAIRS solutions and why they matter

🔗 Explore Firefly’s disaster recovery and cloud backup capabilities

🔗 Get the scoop direct from Gartner