TL;DR

- Terraform Cloud solved remote state + remote runs, but its Terraform-only model creates visibility and governance blind spots in multi-IaC, multi-cloud orgs.

- Pick replacements by operating model, not features: decide who owns runners, where policies run, and where state lives before choosing tooling.

- Prioritise predictable pricing, multi-IaC support, OPA/Sentinel policy placement, CI/CD integration, and state flexibility when evaluating platforms.

- Use CI (GitHub/GitLab/Azure) for execution control and rapid iteration; use PR-driven tools (such as Atlantis) for strict GitOps Terraform workflows.

- Add a visibility/control plane (e.g., Firefly or a similar solution) when you need to track cross-tool inventory, monitor real-time drift, and codify unmanaged resources.

- For enterprise-scale adoption, consider using layered infrastructure and platform-centric patterns: a foundation (stable) versus an app (fast), module registries, identity-first pipelines, and isolated runner pools.

Terraform Cloud became popular mainly because it solved a few pains that every infra team hits early on: where to keep remote state, how to run plans without exposing local credentials, and how to enforce guardrails through Sentinel or OPA. Add workspaces, variable sets, and remote execution, and it gives teams a structured place to manage Terraform workloads at scale.

But enterprise infrastructure has moved far beyond a single IaC tool. Most large setups today run a mix of Terraform for core infra, Pulumi for app-centric stacks, CloudFormation or CDK for AWS-first teams, and ARM or Bicep on the Azure side. Even Kubernetes manifests and Helm charts end up living in the same ecosystem of configuration that needs visibility and governance.

Because of this spread, the conversation across DevOps teams has shifted. The real challenge is no longer just managing Terraform state. It’s about getting a unified understanding of everything deployed across clouds, spotting drift no matter which IaC tool created the resource, and enforcing consistent policies across AWS, Azure, and GCP even when different teams pick different stacks.

This blog looks at practical alternatives to Terraform Cloud that align better with enterprise multi-cloud patterns. Instead of focusing on theoretical features, the goal is to break down how these platforms handle IaC diversity, governance, drift detection, and large-scale automation in real environments.

Evolving Terraform Cloud Usage in Enterprise Multi-Cloud Environments

Terraform Cloud works well when the infrastructure is fully Terraform-based. It gives teams a managed state backend, locks state correctly, handles concurrent runs, and keeps execution on workers instead of relying on local machines. The permissions model around workspaces and variable sets also makes environment separation predictable.

The limits show up once the environment grows beyond Terraform. Most enterprises don’t stick to one IaC tool. You’ll see Terraform for foundational infra, Pulumi for application stacks, AWS CDK or CloudFormation for teams that want tighter AWS integration, and ARM or Bicep for Azure-specific builds. Terraform Cloud isn’t designed to coordinate these different execution models.

A few problems consistently appear:

- Multiple IaC frameworks running in parallel: Terraform Cloud can’t track resource state, drift, or lifecycle events for Pulumi, CDK, or CloudFormation. Each tool maintains its own state backend and workflow.

- Centralised governance: Enterprises want OPA-style policies applied across all IaC types. Terraform Cloud’s policy checks only work on Terraform plans, so anything deployed through another tool bypasses governance.

- Unmanaged resources: Cloud accounts always have resources created outside IaC, either manually, via console, or through other automation. Terraform Cloud only knows what exists in Terraform state, so it can’t surface gaps in coverage.

As organisations scale, these blind spots create operational risks and fragmented visibility. That’s what pushes teams to look beyond Terraform Cloud for platforms that give unified governance, drift detection, and inventory across all IaC frameworks and clouds.

Evaluation Framework for Multi-IaC and Multi-Cloud Automation Platforms

When comparing alternatives, the evaluation goes beyond “can it run Terraform.” The real value comes from how well the platform fits enterprise workflows, budget models, and the mix of IaC tools already in use. These are the criteria that matter when the environment is large, multi-cloud, and built around CI/CD.

1. Pricing model and cost predictability

Terraform Cloud’s RUM (Resources Under Management) pricing introduces unpredictable billing because the cost scales with the number of managed cloud resources, not actual usage. Most enterprises prefer platforms with run-based or concurrency-based pricing since they map cleanly to pipeline activity and are easier to forecast. The evaluation should include what each tier actually unlocks, such as concurrency limits, policy features, and team management.

2. Multi-IaC support

If the environment includes only Terraform or OpenTofu, a Terraform-centric alternative is fine. But if teams also use Pulumi, CloudFormation, CDK, Kubernetes manifests, or Ansible, the tool needs to ingest these workflows natively. Otherwise, you end up with fragmented policy and drift detection across stacks.

3. Governance and policy-as-code

Many alternatives adopt OPA because it’s the standard most security teams already use. The details matter: some platforms allow policies to run before the plan, after the plan, during approvals, or as part of periodic scans. Terraform Cloud’s Sentinel applies only within Terraform runs, so it never sees Pulumi or native cloud activity.

4. Workflow and CI/CD integration

For most enterprises, IaC runs are driven by GitHub Actions, GitLab CI, or Azure DevOps. An alternative tool must integrate cleanly with these pipelines, not force a parallel workflow. Features like custom lifecycle hooks, PR-driven plan/apply flows, and support for self-hosted runners inside private networks matter a lot when the infra estate spans multiple VPCs or restricted environments.

5. Scalability and performance

Large organisations routinely push multiple runs across dozens of repositories at the same time. The platform should handle high concurrency without queue bottlenecks. It should also keep performance stable when dealing with large or deeply nested state files. Permissions and policy inheritance must scale across many teams and projects without turning into a manual maintenance problem.

6. State management flexibility

Some teams want a fully managed backend like Terraform Cloud provides. Others need state in their own S3 buckets, Azure Blob accounts, or GCS buckets for compliance, audit, or residency reasons. A good alternative should offer both models, not lock you into one.

7. Ease of adoption and migration effort

“Drop-in replacements” reduce migration time because they mirror Terraform Cloud’s workflow. More customizable platforms might introduce new concepts, richer hooks, multi-IaC orchestration, or deeper policy controls, which provide more power but require additional ramp-up.

Overall, the decision usually comes down to three factors:

- whether the pricing model is predictable,

- whether the tool can support mixed IaC stacks, and

- whether the governance model remains consistent across all deployments.

A strong alternative should improve visibility and control without forcing the organisation to redesign its entire delivery pipeline.

Terraform Cloud Alternatives for Enterprise Environments [2026]

1. Firefly

Firefly is a multi-cloud IaC control plane that covers both visibility and orchestration. It connects directly to AWS, Azure, GCP, Kubernetes, and several SaaS providers to build a live resource inventory, correlates each asset with its IaC source, and highlights anything unmanaged or drifting. On top of that, Firefly includes Firefly Runners, a full execution engine for Terraform, OpenTofu, and Terragrunt, with managed and self-hosted runner options. This makes it suitable for teams that want unified drift detection, policy enforcement, IaC generation, and pipeline automation across their cloud footprint.

Key Features

1. IaC Execution (Terraform / OpenTofu / Terragrunt)

- Managed and self-hosted runners

- plan/apply with full run history

- PR-driven plans and merge-driven applies

- Manual or auto-apply gates

- Periodic plans for scheduled drift checks

- Version pinning for Terraform/OpenTofu/Terragrunt

2. Unified Cloud Inventory

- Reads live cloud state directly from provider APIs

- Cross-cloud inventory for AWS, Azure, GCP, Kubernetes, and SaaS integrations

- Resource-level metadata, configuration, and dependency graph

- Identifies unmanaged resources (no associated IaC)

3. Continuous Drift Detection

- Real-time drift based on provider state

- Attribute-level diffs with exact changed values

- CloudTrail / audit-log correlation to show who made the change

- Alerting to Slack, Teams, Email, PagerDuty

4. Codification (Auto-Generate IaC)

- Generates Terraform, OpenTofu, Pulumi, CloudFormation, CDK, Bicep/ARM, Crossplane, Helm, and Kubernetes manifests

- Captures related dependencies (e.g., SGs, NICs, volumes)

- Useful for onboarding legacy accounts or enforcing IaC coverage

5. Policy & Guardrails

- No-code policy builder and full OPA/Rego support

- Policies run on PR plans, merges, periodic scans, and live inventory

- Blocks deployments or flags misconfigurations

- Produces compliance insights across clouds and IaC types

6. Workflow & CI/CD Integration

- Integrates with GitHub, GitLab, and Azure DevOps

- Can run as the primary IaC runner or plug into existing CI pipelines

- Supports approval workflows, notifications, and audit trails

7. Governance & Access Control

- Projects for workspace grouping and delegation

- Variable sets with inheritance across project/org levels

- Workspace-level RBAC and audit logs

Hands-On with Firefly: IaC Runs, Guardrails, and Real-Time Inventory

Firefly becomes most useful once your cloud accounts and IaC repositories are connected. After onboarding, Firefly automatically builds a real-time inventory from AWS, Azure, GCP, Kubernetes, and any SaaS integrations you’ve enabled. From there, workspaces, variable sets, guardrails, drift detection, and run history all live in one place.

Below is a walkthrough of what the actual workflow looks like inside the platform.

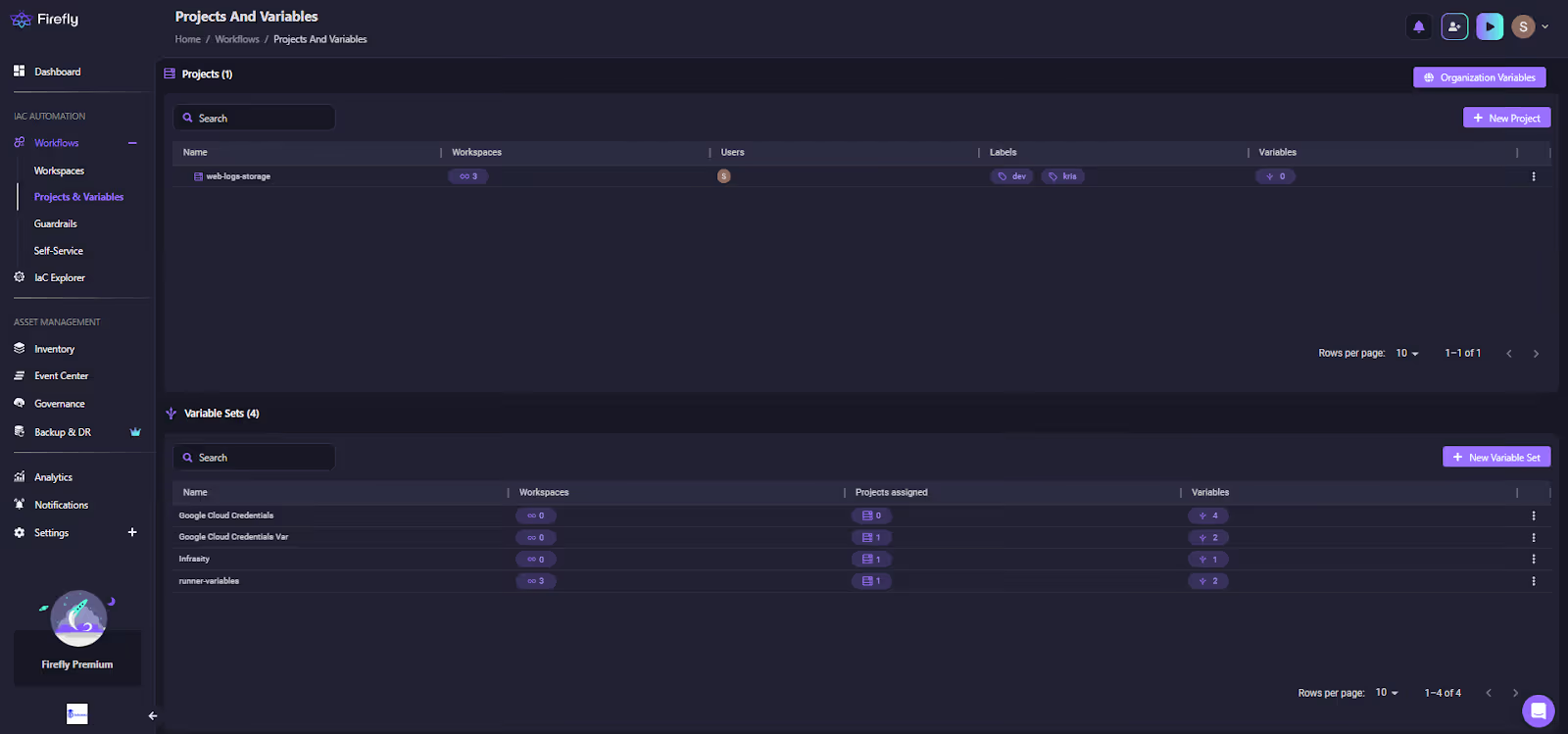

Projects and Variable Sets

Firefly organises IaC execution using Projects (logical groupings) and Variable Sets (shared environment variables, credentials, and configuration inputs). Variable inheritance makes it easy to distribute provider credentials, runner inputs, and shared parameters across multiple workspaces. Here’s how the Projects & Variables view shows a project with multiple workspaces and variable sets mapped to different IaC contexts:

In the above visual:

- The project hosts three active workspaces.

- Variable sets are neatly separated (GCP creds, runner variables, infra vars).

- Each variable set shows where it's attached and how many workspaces consume it.

This structure keeps Terraform/OpenTofu inputs aligned without scattering secrets inside repos or pipelines.

Workspaces and Run History

Workspaces are where Terraform/OpenTofu/Terragrunt execution actually happens. Each workspace maps to a repo, branch, and IaC directory. Firefly pulls the code, detects the IaC version, and runs the workflow (init, plan, guardrails and apply).

Here you can clearly see:

- Workspaces tied to different repos and branches

- IaC versions like Terraform 1.5.7 / 1.12.2 automatically detected

- Last run status (plan failed, blocked, applied, completed)

- Number of historical runs for auditability

This becomes the control plane for all IaC execution, with everything centralised and predictable behaviour across repositories.

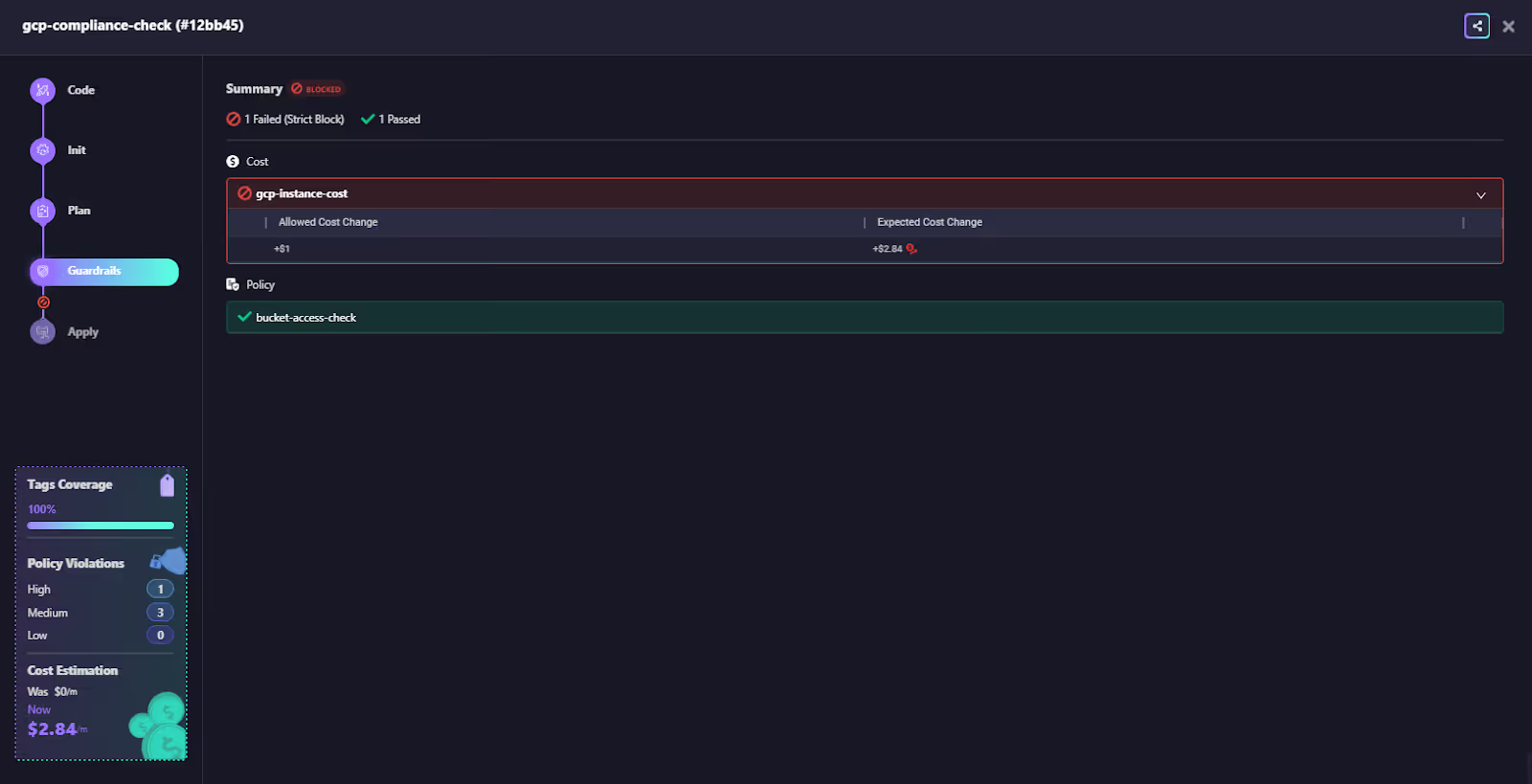

Guardrails (Policy + Cost + Security Checks)

When a workspace run hits the Guardrails phase, Firefly runs policy evaluation using OPA/Rego or no-code rules you’ve configured. This includes cost checks, security rules, and resource-level validations.

In this run:

- Firefly blocked the deployment because a GCP VM exceeded the allowed cost delta.

- The bucket IAM check passed successfully.

- Tag coverage, cost estimation, and violation counts are visible on the left panel.

This gives you deterministic, enforceable policy behaviour without building your own admission logic in CI.

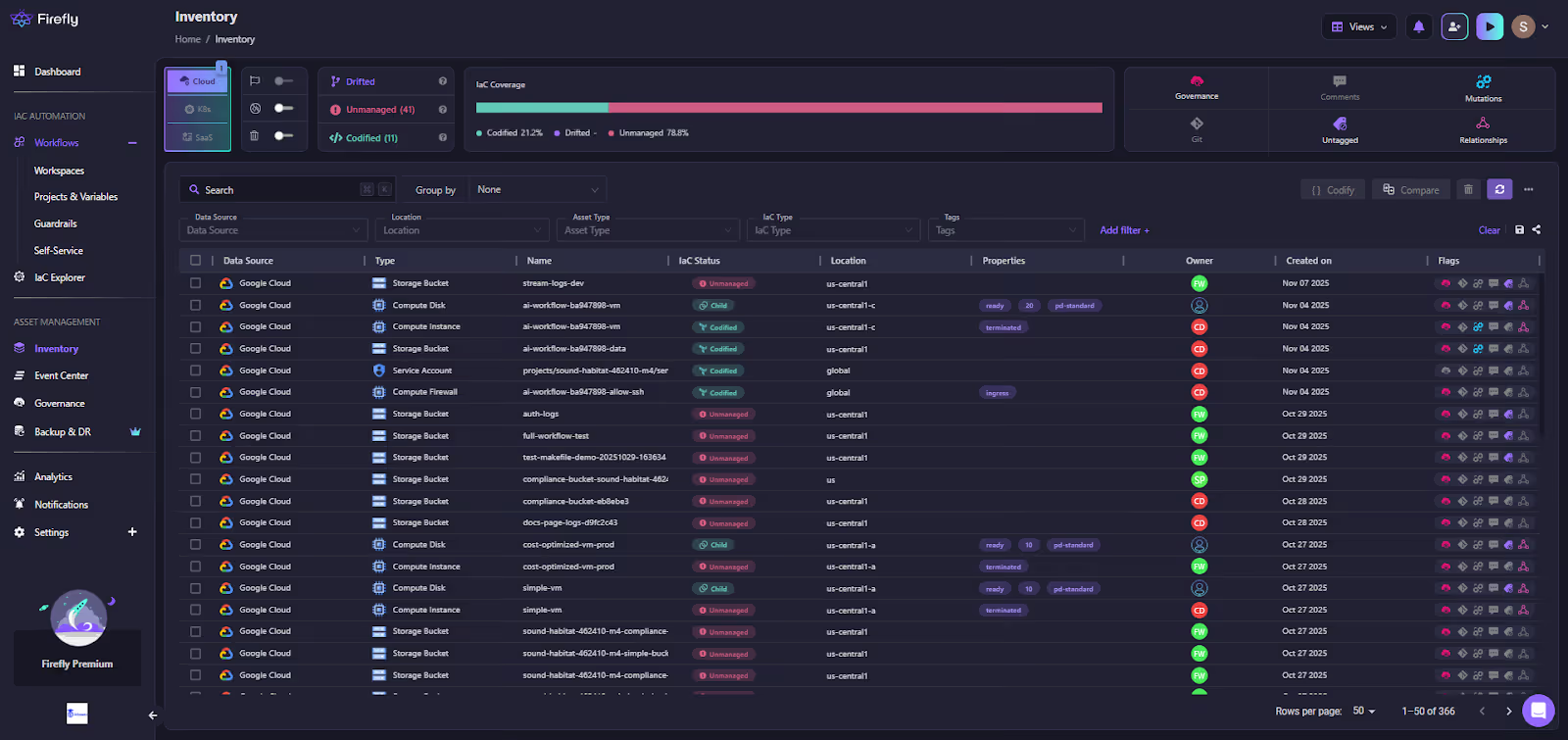

Inventory and IaC Coverage

The Inventory view aggregates every asset across all cloud accounts, grouped by data source, asset type, or IaC coverage status. Firefly highlights what’s managed by IaC, what’s unmanaged, and what’s drifting.

Here, the system shows:

- Full GCP inventory (buckets, disks, instances, firewall rules)

- Per-resource IaC status (Codified / Drifted / Unmanaged)

- Asset owners, creation timestamps, and flags

- High-level IaC coverage metrics (e.g., 21.2% codified)

This makes it straightforward to identify gaps in IaC adoption, enforce standards, and onboard legacy infrastructure.

2. Pulumi Service

Pulumi Service (Pulumi Cloud) is the managed control plane for Pulumi’s multi-language IaC framework. It handles state storage, secrets management, policy enforcement, and deployment orchestration for Pulumi stacks written in TypeScript, Python, Go, C#, Java, and YAML. Unlike Terraform-style declarative workflows, Pulumi uses general-purpose languages, which makes it appealing to application teams and platform engineers who want tighter integration between infra code and application logic. Pulumi Service focuses on enabling fast iteration, rich SDK-driven abstractions, and direct integration with modern CI/CD pipelines.

Key Features

1. Multi-Language IaC Execution

- Native execution for Pulumi programs in TypeScript, Python, Go, C#, Java, and YAML

- Handles preview (plan) and update (apply) operations

- Tracks stack history, diffs, and outputs

- No need for local state files or backend configuration

2. Managed State & Secrets

- State stored and versioned in Pulumi Service

- Encrypted secrets with Pulumi’s built-in KMS or customer-managed KMS

- Automatic checkpointing for previews and updates

- Supports team-based access to individual stacks

3. Cross-Cloud Support Through Pulumi Providers

- Providers for AWS, Azure, GCP, Kubernetes, VMware, and hundreds of SaaS platforms

- Rich SDKs for building higher-level abstractions

- Enables “component” patterns for reusable infra modules

4. Policy-as-Code (CrossGuard)

- Supports OPA-like policies written in TypeScript or Python

- Policies run during preview/update

- Blocks non-compliant deployments (e.g., untagged resources, open security groups)

- Enforces rules across all stacks in an organization

5. VCS & CI/CD Integration

- GitHub, GitLab, Bitbucket, and Azure DevOps integrations

- Stack updates triare ggered automatically on PR or merge

- Works cleanly with GitHub Actions, GitLab CI, and Azure Pipelines

- Supports self-hosted runners and ephemeral CI agents

6. Deployment Pipelines (Pulumi Deployments)

- Fully managed execution environment similar to Terraform Cloud runs

- Supports webhook triggers, scheduled deployments, and manual approval steps

- Ideal for teams that want cloud-hosted runs without maintaining CI runners

7. Stack Management & Access Control

- Per-stack RBAC (admin, writer, reader)

- Environments for grouping config values across stacks

- Team-based visibility and audit logs

- Fine-grained control for large orgs with multiple projects

8. Native Kubernetes Support

- Provider integrates with kubeconfig or cluster credentials

- Can model, deploy, and update workloads as code

- Works well with GitOps or hybrid approaches

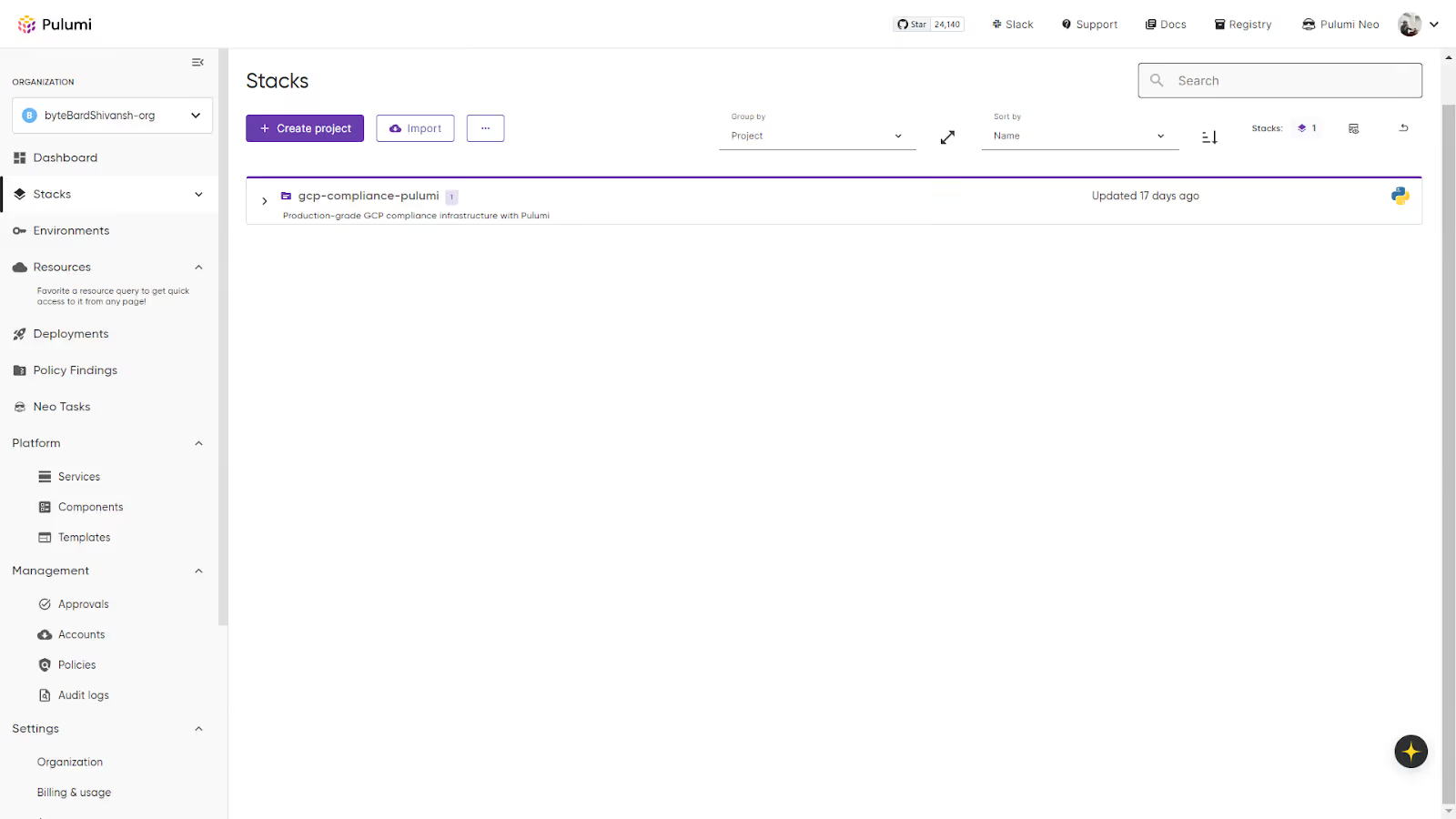

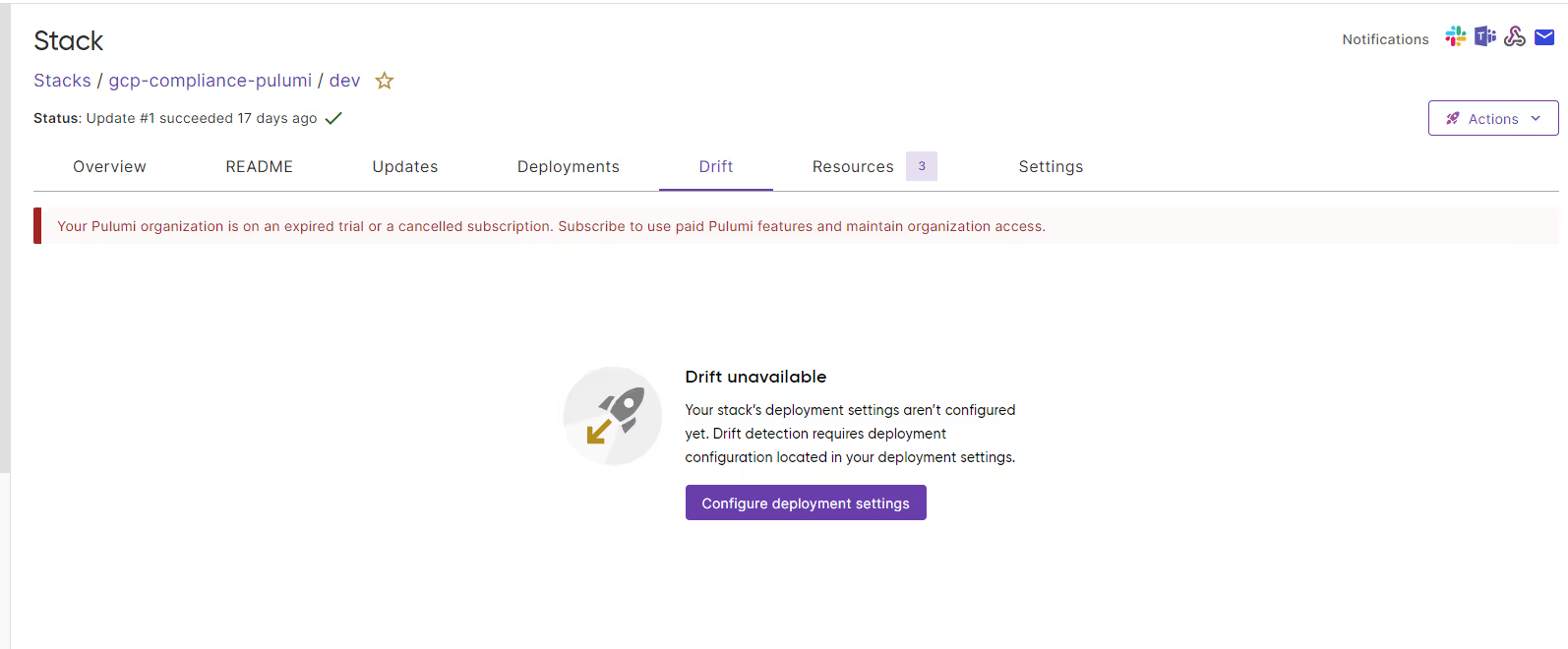

Pulumi Service: Hands-On View

When you open Pulumi Service, you start at the organisation level. The sidebar on the left is the main navigation for stacks, environments, resources, deployments, and admin settings. The dashboard below displays the organisation layout, including the navigation sidebar and available stacks:

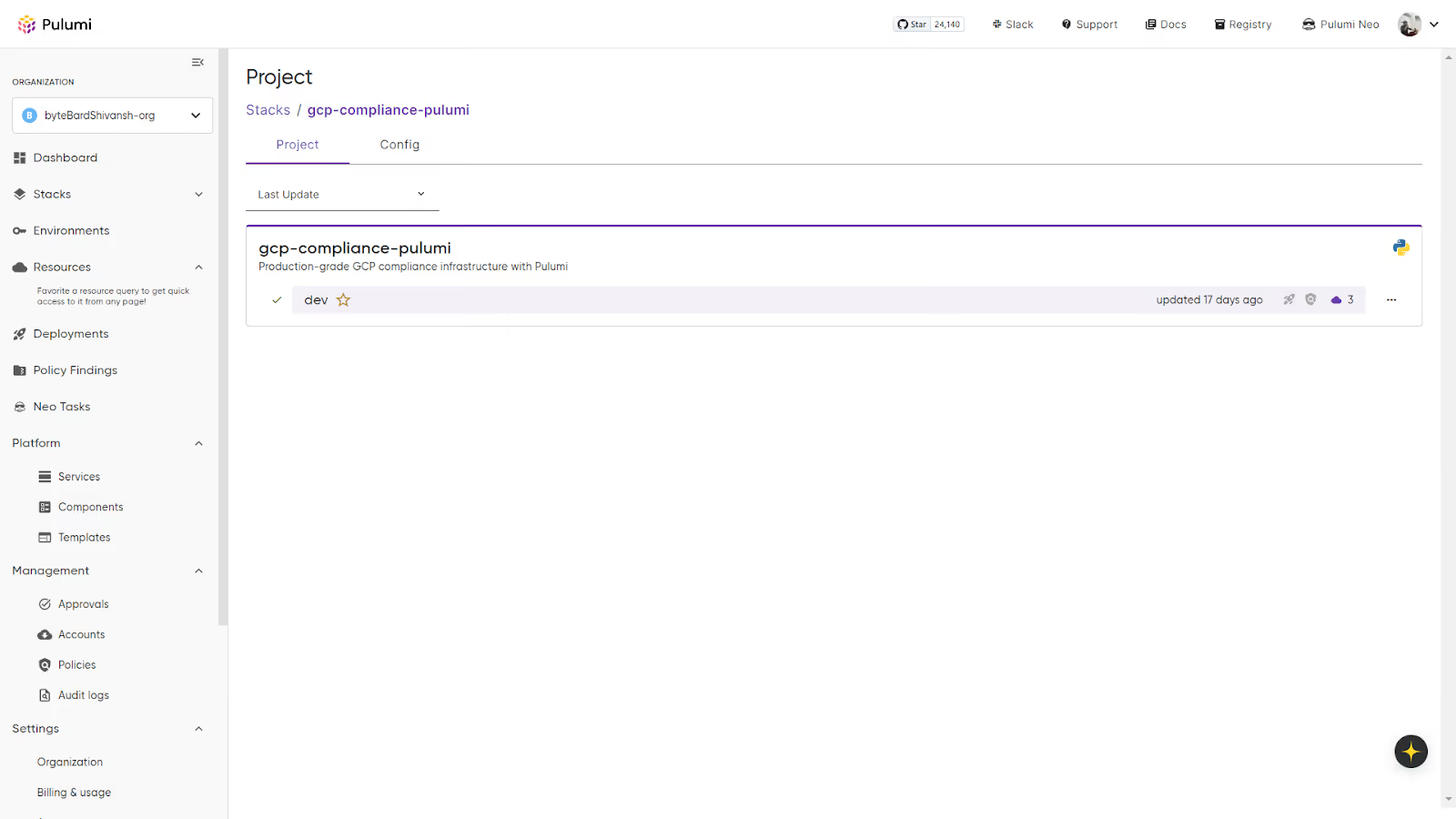

Inside the organisation, a project such as gcp-compliance-pulumi might contain one or more stacks. In this example, the project has a single dev stack. Pulumi treats each stack as its own environment with independent configuration, state, and history.

Working Inside a Stack

Opening the dev stack takes you to the operational panel that you’ll use most often. The panel below displays the project, including its stacks and the latest update information.

A few areas are worth calling out:

Overview

- Quick status of the last deployment

- Who triggered it

- How long did it take

Updates

This becomes the main place you inspect changes.

It shows:

- All previews and updates

- Full execution logs

- Diffs showing exactly what changed

- A complete audit trail of the environment

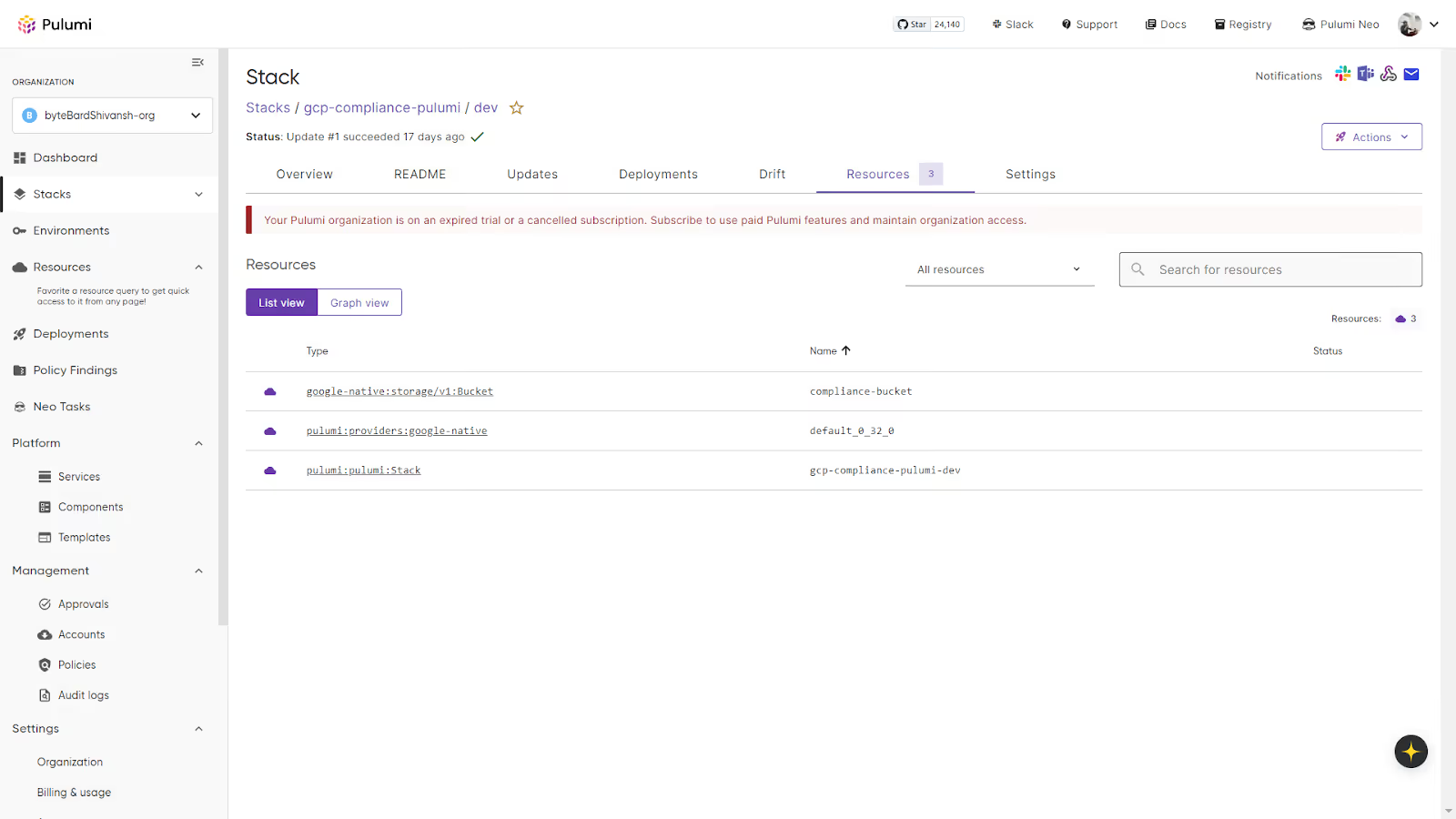

Resource Inventory

The Resources tab shows what Pulumi is managing in the cloud for this stack.

For example, the resources listed in the above snapshot:

- A cloud storage bucket

- The cloud provider instance

- The Pulumi stack root

Pulumi allows switching between list and graph views to make relationships easier to understand.

Drift Detection

Pulumi identifies differences between IaC state and live cloud state. If someone changes a resource directly through the cloud console or CLI, it appears here.

The workflow with Pulumi Service stays simple: you push code, Pulumi runs a preview, and you review the diff in the Updates panel before applying the change. Once the update completes, the Resource view reflects the new state, and any out-of-band edits are picked up in the Drift section. The result is a clean and transparent environment with a clear state, a full history of updates, and an accurate mapping between your code and the cloud resources it manages.

Now, let's move on to the next one on the list, which is GitHub Actions.

3. GitHub Actions

GitHub Actions works well when you want full control over how Terraform, OpenTofu, or Pulumi runs without relying on a dedicated IaC platform. Everything happens inside your repository, and the workflows become part of your version-controlled automation. This is a good fit for teams already using GitHub Enterprise, where application and infrastructure delivery share the same CI/CD system.

Key Features

1. CI/CD as the IaC Engine

- Executes Terraform, OpenTofu, or Pulumi using GitHub-hosted or self-hosted runners

- Simple YAML workflow definitions

- Ability to pin versions of toolchains and providers

- Secrets stored in GitHub Actions secrets or OIDC for cloud auth

2. Flexible Workflow Design

- Plans can run automatically on pull requests

- Applies can run on merges, approvals, or manual triggers

- Supports matrix runs for multi-environment deployments

- Easy to insert linting, security scans, OPA checks, and backend configuration

3. Policy Enforcement

- OPA/Conftest integration for guardrails

- Policy checks can run before plan, after plan, or as a separate job

- Works well with organization-wide reusable workflows

4. State Management

- No built-in state backend (unlike Terraform Cloud or Pulumi Service)

- You choose where to store state:

- S3 + DynamoDB

- Azure Blob + Table

- GCS + locking

- Terraform Cloud remote backend if needed

5. Security and Access Control

- OIDC integration with AWS, Azure, and GCP for short-lived credentials

- Self-hosted runners for private network deployments

- Repository- and org-level permissions create clear access boundaries

6. Event-Driven Automation

- Trigger runs on PRs, merges, tags, schedules, or manual dispatch

- Works well for GitOps-style workflows where git is the single source of truth

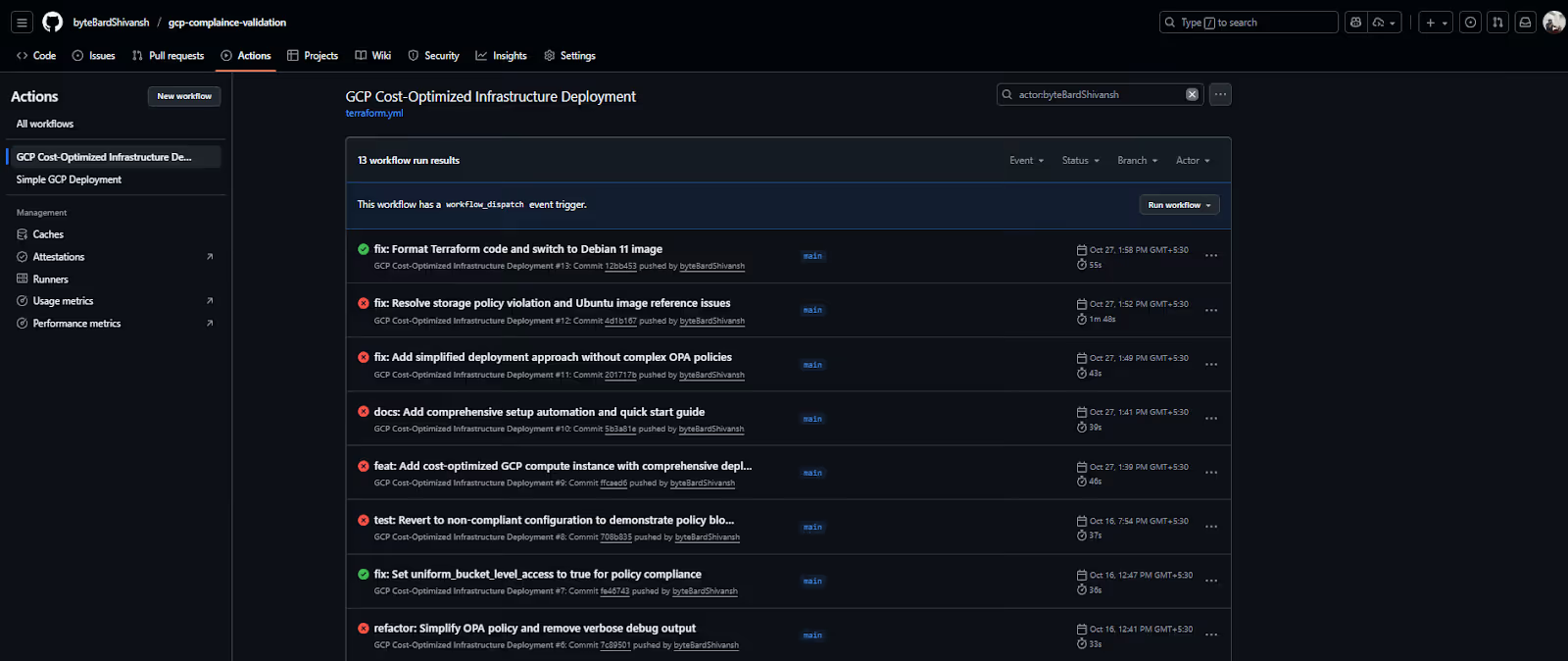

GitHub Actions: Hands-On Workflow Walkthrough

GitHub Actions works well when you want Terraform and OPA validation to run entirely inside the repository. The workflow acts as the orchestration layer, generating the Terraform plan, evaluating it against OPA policies, and determining whether a deployment is allowed to proceed. Every commit or pull request triggers a new run, so you get a clean history of which changes passed compliance and which ones were blocked.

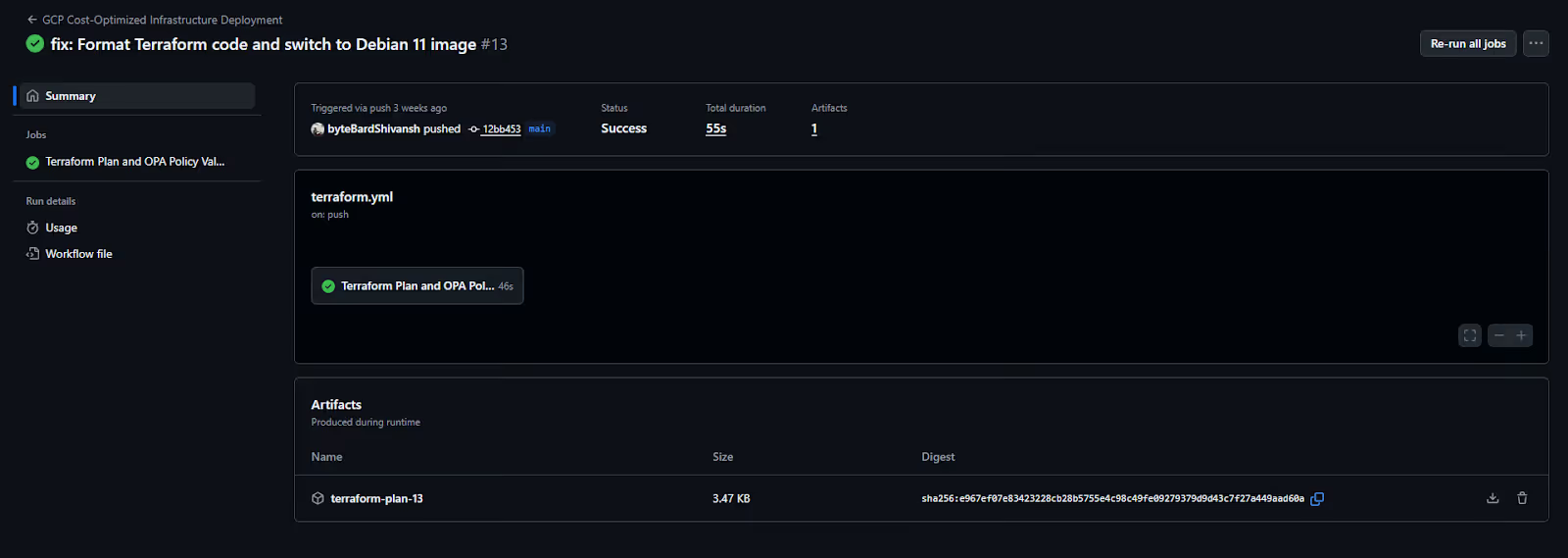

Here’s the workflow run list for the Terraform + OPA validation setup, showing commit messages, status, duration, and the branch that triggered each run:

This view gives you a quick sense of the pipeline’s health. Each row indicates whether the plan passed the OPA checks, the duration of the run, and the commit that triggered it. When you’re scanning for failures or checking if a change cleared compliance, this is usually the first place you look.

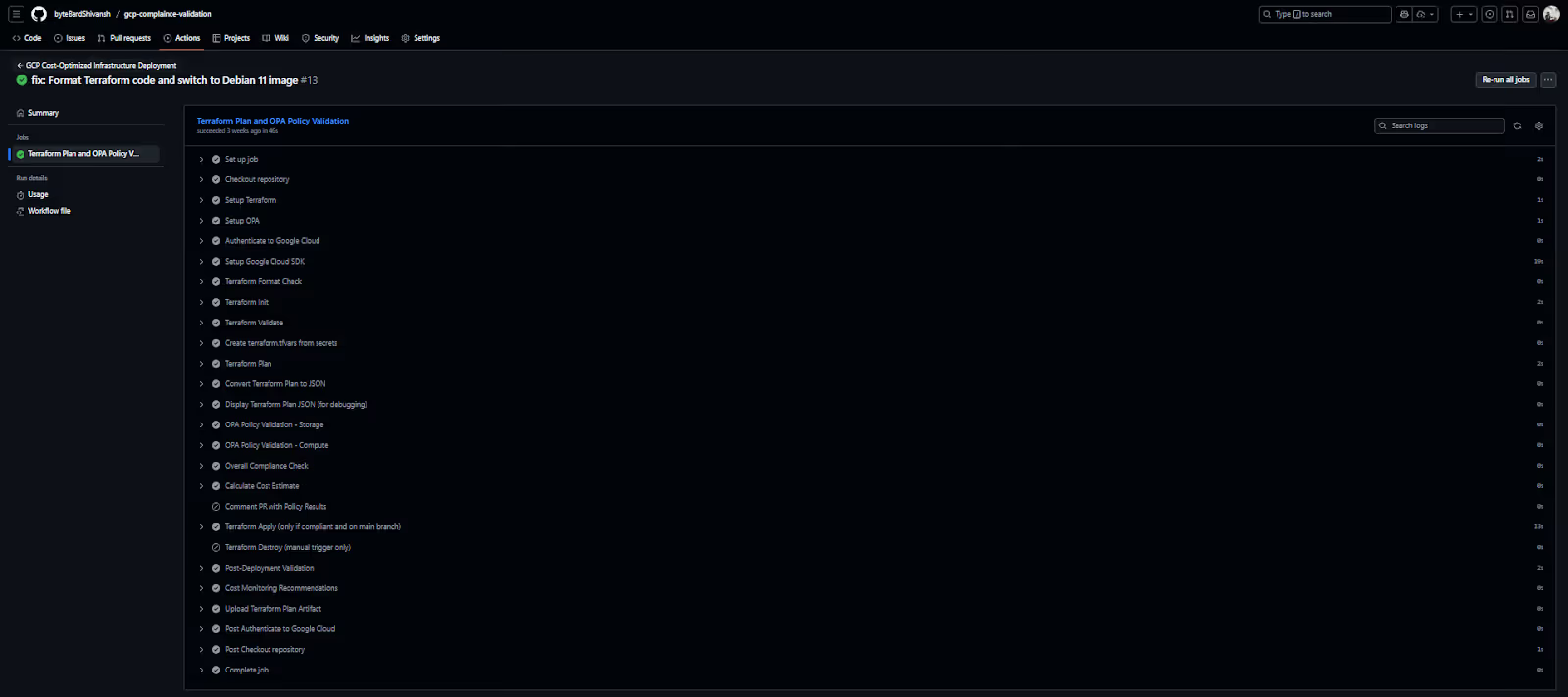

When you open a specific run, you get the detailed job execution. The workflow starts by checking out the repo, authenticating to GCP through GitHub’s OIDC integration, and setting up Terraform and OPA. After authentication, Terraform runs its normal sequence: format check, validate, init, and plan. The plan is then converted to JSON so OPA can evaluate the actual resource definitions.

This part shows each step in order, along with logs for Terraform and OPA. It’s easy to trace exactly what happened during the run, whether the GCP auth succeeded, whether the plan generated cleanly, and which policy rule caused a failure if the run stopped early. This is where you debug compliance issues or Terraform configuration mistakes.

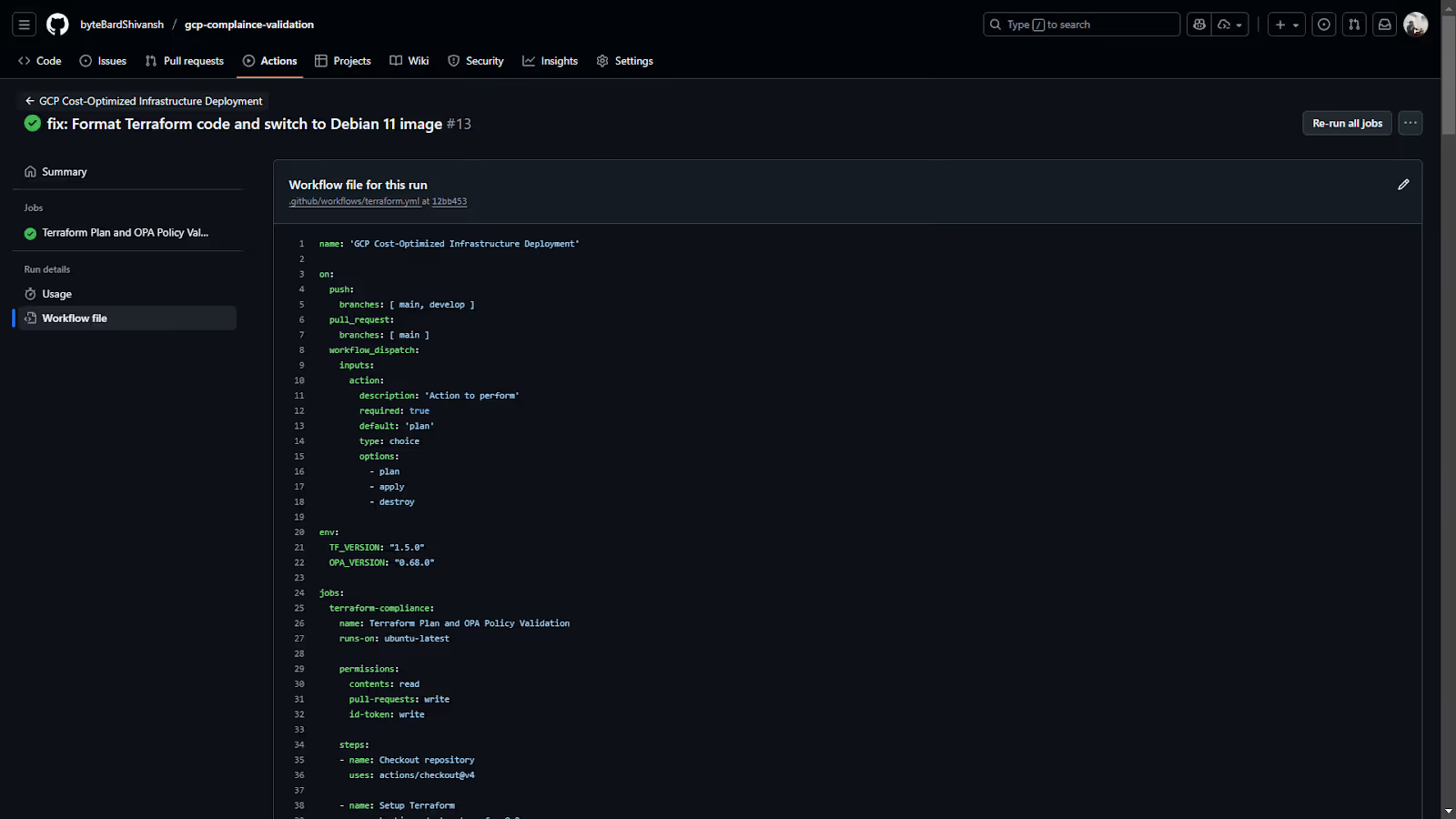

All of this behavior is defined in the workflow file inside the repo. It contains the triggers, environment variables, tool versions, OIDC permissions, and the exact Terraform + OPA execution logic. Because the workflow is versioned alongside the infrastructure code, any changes to how deployments are validated also go through normal review.

This file drives the entire process. You can see the job definition, the steps for Terraform formatting and planning, and the commands that run OPA against the rendered JSON plan. It’s straightforward to update when you want to tighten policies, add extra stages, or fine-tune how the plan is generated.

At the end of a run, GitHub Actions uploads the Terraform plan file as an artifact. This gives you a local copy of the plan without needing to re-run Terraform. If you’re tuning OPA policies, this makes it easier to inspect the exact JSON the policy engine evaluated. The summary page shows the artifact, the commit reference, and the overall status.

This is the final checkpoint for the run. If the apply stage is enabled for the main branch, this is where you confirm that the plan passed all policy checks before being deployed. If the workflow blocked a deployment, the summary tells you exactly why.

4. GitLab

GitLab fits well when infrastructure automation needs to live directly alongside code review, approvals, and security checks. Its CI/CD system is built around a simple pipeline model defined in .gitlab-ci.yml, which makes it easy to embed Terraform, OpenTofu, or Pulumi flows into existing DevSecOps practices. GitLab’s strength is that everything — merge requests, runners, permissions, security scans, and compliance rules — lives in a single platform. For organisations running self-hosted GitLab or operating in air-gapped networks, this becomes a natural place to centralise IaC automation.

Key Features

- Pipeline-Driven IaC Execution: GitLab runners (hosted or self-hosted) execute Terraform and policy checks directly from the repo. Pipelines can enforce plan-on-MR and apply-on-merge patterns, making IaC changes follow the same workflow as application code.

- Built-In Approval and Compliance Controls: Merge request approvals, required reviewers, protected branches, and security policies fit neatly around IaC changes. This allows infrastructure deployments to adopt the same gated workflows that production applications already use.

- Strong Self-Hosted Support: GitLab is widely used in environments that can’t rely on SaaS platforms. Self-hosted runners allow Terraform to execute inside private networks, and the entire system can operate without exposing state or credentials externally.

- Flexible Integration with Policy Engines: OPA, Conftest, and custom scripts run natively inside the pipeline. Policy failures can block merges or stop pipelines long before apply, giving teams full control over enforcement.

- Native Secrets and Environment Scoping: GitLab’s CI variables and environment scoping allow per-environment cloud credentials, region values, or sensitive settings to flow into Terraform without leaking across projects.

GitLab: Hands-On Workflow Walkthrough

GitLab CI/CD fits well when you want Terraform to run through the same review and approval path as application code. The CI pipeline becomes the execution layer — it initializes Terraform, validates the configuration, generates the plan, and optionally applies changes after approval. Every run is tied to a commit or merge request, so you get a clean and traceable history of infrastructure changes.

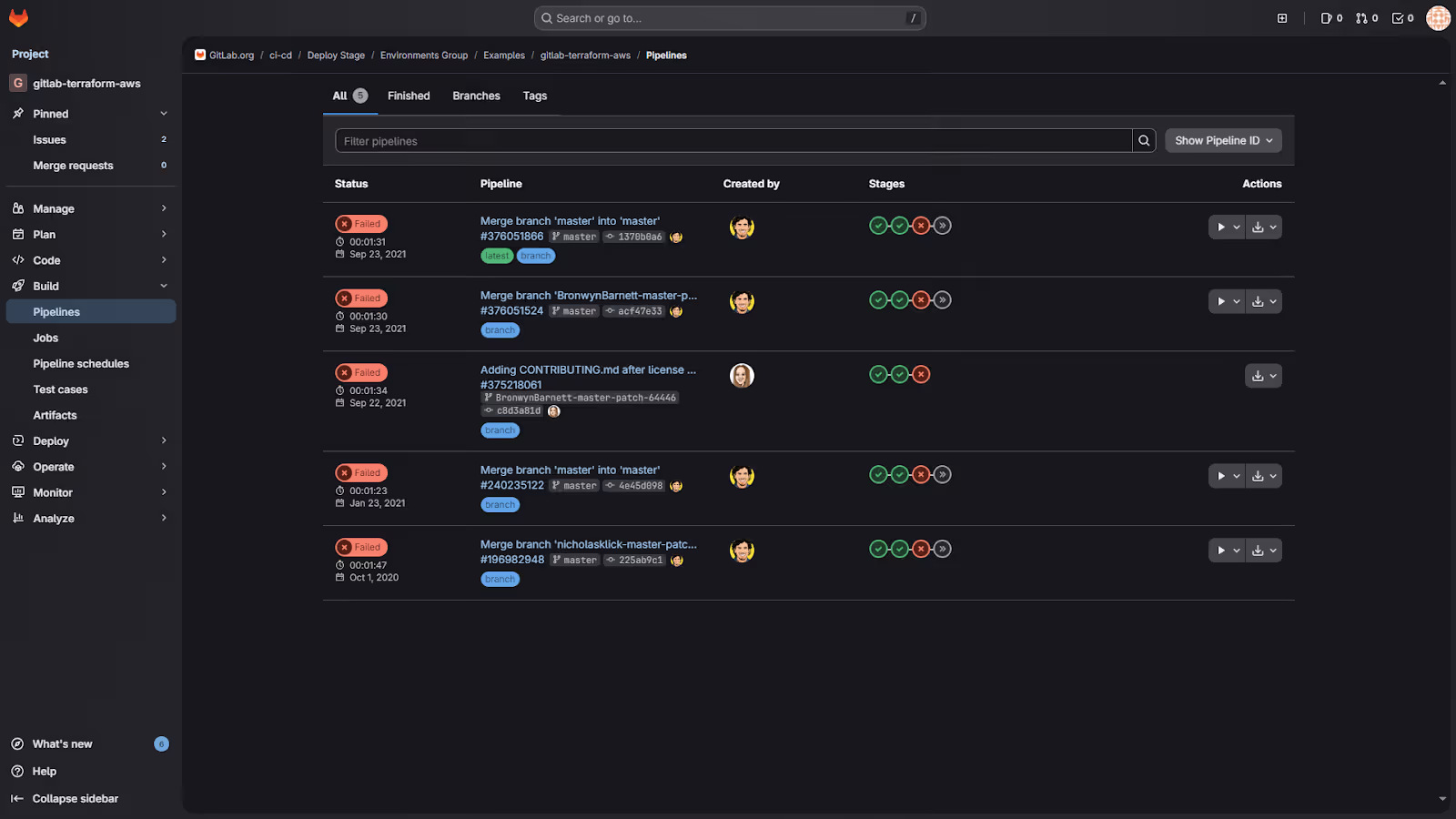

Here’s the pipeline run list for a Terraform project, showing status, run time, and the commit that triggered each workflow:

This view gives a quick sense of how healthy the Terraform workflow is. You can immediately see which runs failed during validation or plan, which ones passed, and how long each stage took. When a change breaks a module or provider configuration, this is the first place you notice it.

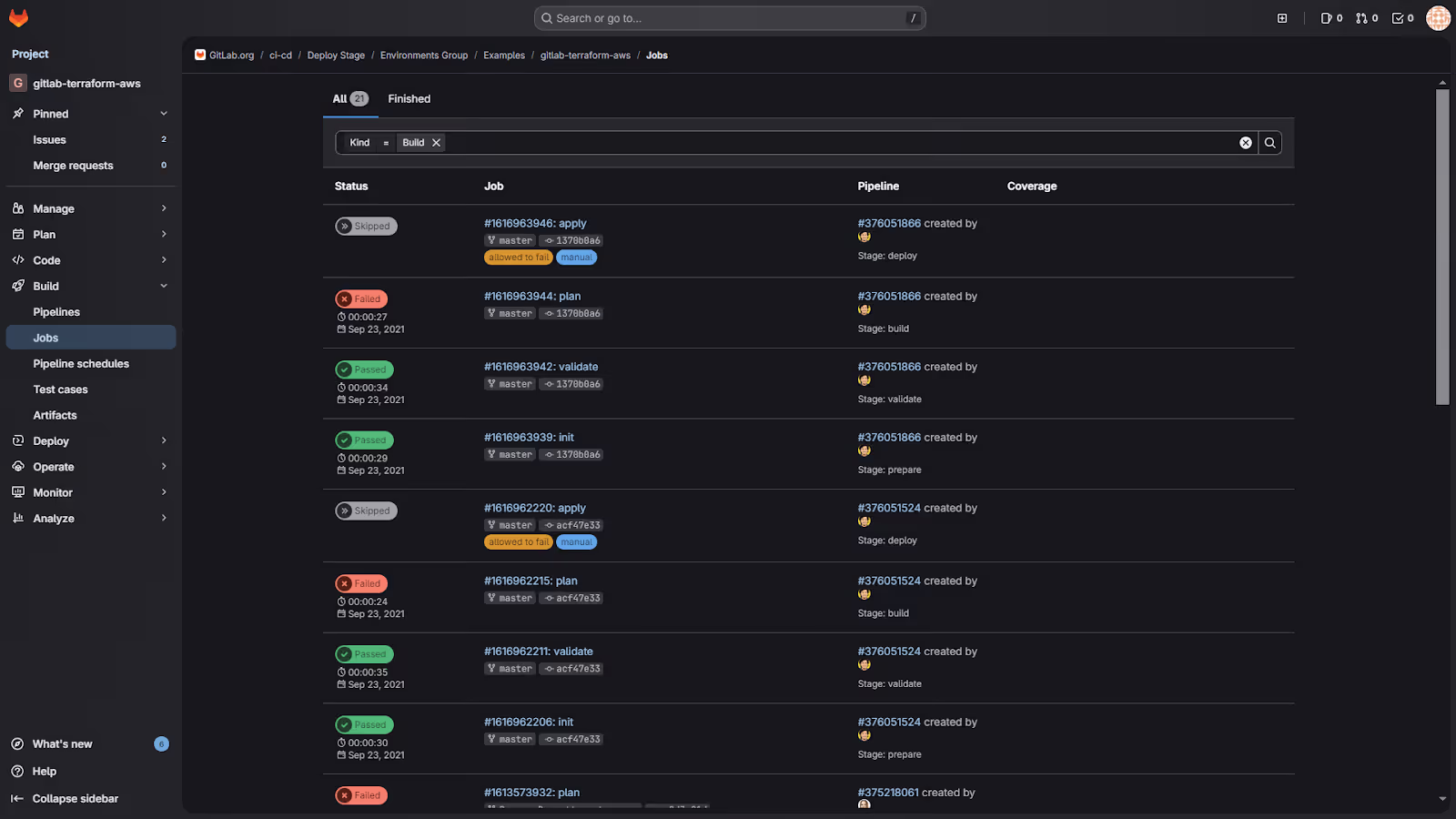

Opening a specific pipeline takes you to the job-level breakdown. GitLab splits Terraform into discrete stages: init, validate, plan, and apply, and each stage logs its own output. This makes troubleshooting easier because you can see exactly where the run stopped and why.

The job list shows which stages passed, which ones were skipped, and which ones failed. For plan jobs, GitLab attaches the generated plan as an artifact, so reviewers can inspect the diff directly inside the interface. The apply stage is intentionally manual, which prevents unintended changes from being pushed into production.

The workflow behind this setup is defined in a small .gitlab-ci.yml file. It uses GitLab’s Terraform image and the built-in gitlab-terraform wrapper. The stages follow a simple pattern: initialize the backend, validate the structure, create the plan, export it for reviewers, and allow a manual apply on the main branch. This keeps the pipeline short while still enforcing safe workflows around production changes.

End-to-end, the flow is straightforward: push a change, let GitLab handle init/validate/plan, review the plan in the MR, and trigger apply only when you're confident the change should go out. Everything, the state backend, logs, plans, approvals, and audit trail — stays tied to the project, which keeps Terraform lifecycle clean and easy to manage.

5. Azure DevOps

Azure DevOps works well when you want your infrastructure workflows to live in the same place as your source control, CI/CD, access control, and approvals. Everything runs under Azure AD, and pipelines authenticate through Service Connections or managed identity, so Terraform or Pulumi can access Azure without storing long-lived credentials. For teams already invested in Azure, this keeps the IaC lifecycle tightly integrated with the rest of the platform.

Key Capabilities

YAML-Based Terraform Execution: Azure Pipelines runs Terraform, Pulumi, or OpenTofu on Microsoft-hosted or self-hosted agents. The flow stays consistent: pull the repo, authenticate, run init/validate/plan, and push changes through an approval gate before apply.

Strong Azure Integration: Service Connections and Key Vault references keep secrets out of repos. Azure Policy and Resource Graph can run alongside the pipeline to enforce baseline configuration and detect drift.

Controlled Environments and Approvals: Environments like dev, stage, and prod can enforce manual approval, checks, or business gates before Terraform is allowed to modify infrastructure.

Full Traceability: Pipelines, logs, variable groups, identity, and permissions stay under one system, making it easy to trace any infrastructure change back to the commit and approval that triggered it.

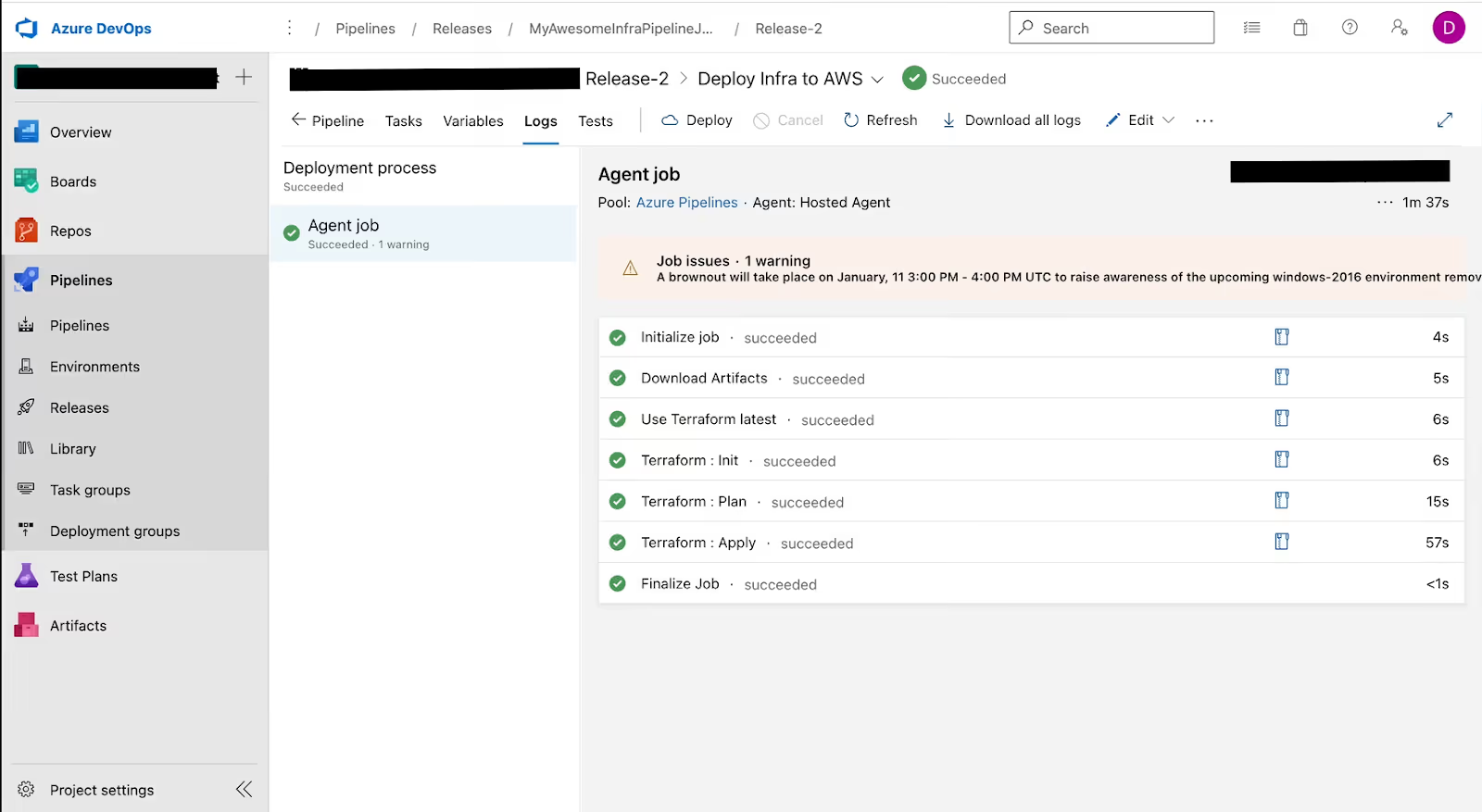

Terraform Orchestration via Azure DevOps Pipelines

Azure DevOps fits well when you want Terraform to run inside a fully managed pipeline tied to Azure AD identity. The release pipeline becomes the control plane: it pulls artifacts, installs Terraform, authenticates using a Service Connection, and runs the usual init/plan/apply sequence. Approvals, RBAC, and audit logs all sit in the same place, which keeps the flow predictable and tightly governed.

Below is how a typical Terraform deployment looks during execution — each task running on a hosted agent, with clear timing and status:

In this run, the pipeline goes through a clean Terraform lifecycle. The agent starts up, downloads the build artifacts, installs the pinned Terraform version, and then runs the three core stages: init, plan, and apply. Each step has its own log stream, so if something fails, provider lock, backend auth, or a plan diff, you know exactly which task broke. The apply step runs last and is usually protected by an environment approval, which means no changes hit the cloud unless someone signs off.

This setup gives you a stable IaC workflow inside Azure DevOps: controlled identities, no long-lived secrets, deterministic runs, and a complete run history that ties every resource change back to a release and approver.

6. Atlantis

Atlantis is a self-hosted system that runs Terraform from pull requests. It listens to webhooks from GitHub, GitLab, or Bitbucket, detects which directories were changed, and runs terraform plan automatically. The plan output is posted back into the PR, and when the change is approved, a maintainer triggers terraform apply using a PR comment. Everything runs inside your own network, using your own credentials, which makes it a solid fit for teams that want GitOps-style workflows without depending on a cloud runner.

Key Features

- PR-Driven Terraform Runs: Atlantis turns every pull request into a controlled Terraform workflow. plan happens automatically or on command, and apply only runs when a maintainer asks for it.

- Workspace and Directory Locking: When Atlantis is running a plan or apply, it locks that Terraform workspace so no other PR can update the same state at the same time. This prevents conflicting updates and makes state behaviour predictable.

- Configurable Workflows: You define behaviour in an atlantis.yaml or through server-side config. You can control which directories are valid Terraform projects, which commands are allowed, and how plans and applies run.

- Self-Hosted Execution: Because Atlantis runs on your infra (VM, container, or Kubernetes), Terraform executes inside the environment where your credentials and network access already exist. No secrets leave your boundary.

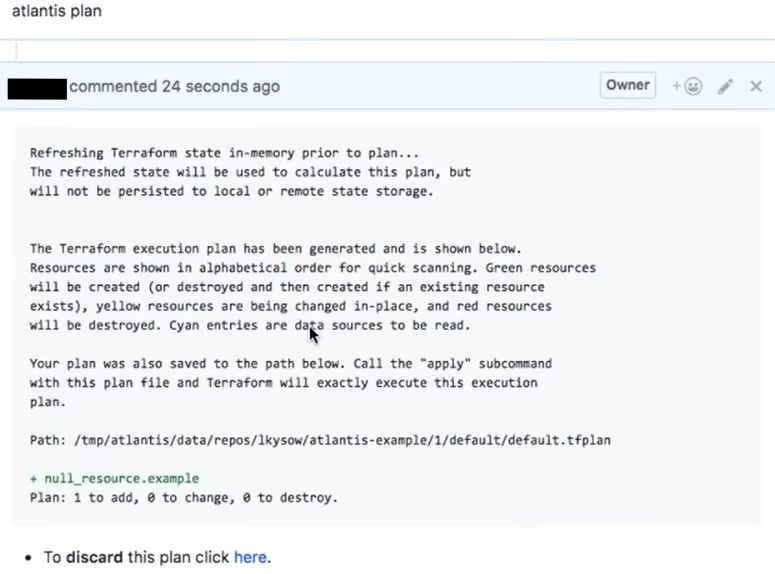

Atlantis: Hands-On Workflow

Atlantis makes Terraform fully PR-driven. Every change starts with a pull request, and Atlantis handles the plan and apply cycle directly inside the PR thread. This keeps the workflow predictable: open a PR, get a plan, review, approve, and apply. No one runs Terraform locally, and no state operations happen outside the controlled flow.

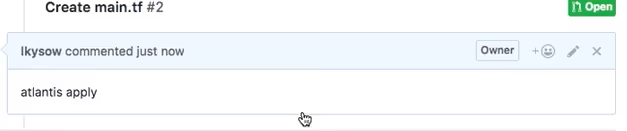

When a pull request is opened, Atlantis automatically runs a plan for the directories it detects as Terraform projects. The plan output is posted straight into the PR so the team can review it before merging. Here’s what that looks like:

This is the full Terraform diff generated by Atlantis. It includes the usual breakdown of resources to add, change, or destroy, along with the saved plan file path. Atlantis locks the project while generating this, so no other PR can apply changes to the same workspace at the same time. This avoids state conflicts and keeps the workflow deterministic.

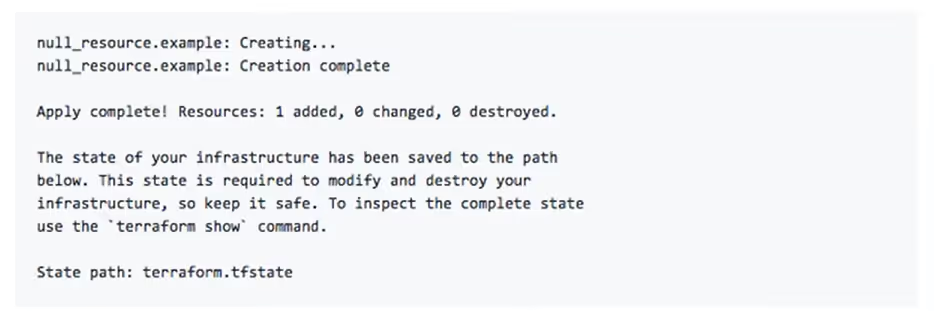

Once the plan looks good, a maintainer triggers the deployment directly from the PR by commenting:

Atlantis listens for this command, validates that the user has permission to run the apply, and then executes the apply step in the same environment where the plan was generated. After the run completes, Atlantis posts the result back into the PR.

You get the final Terraform output, including resource creation details and the updated state path. At this point, the lock is released, and the PR can be merged safely, knowing that the infrastructure now matches the code.

This gives you a clean GitOps flow without any local Terraform runs: every change is reviewed, every plan is visible, and every apply is traceable back to a specific comment in a specific pull request.

Quick Comparison of Terraform Cloud Alternatives [2026]

The comparison table illustrates the differences between each platform in terms of execution model, governance depth, IaC coverage, and cloud visibility. But choosing a platform is based on how well the tool fits the way your organisation builds and operates infrastructure. To make this evaluation useful in real-world environments, it is helpful to examine the common IaC architecture patterns used across enterprises and map these tools to the corresponding operating models.

Enterprise Architecture Patterns That Shape IaC Platform Choices

In enterprise environments, the way teams structure their infrastructure matters as much as the IaC tool they pick. The platforms reviewed earlier, including Terraform Cloud, Firefly, Pulumi Service, GitHub/GitLab CI, Azure DevOps, and Atlantis, all align differently with the operating models that enterprises ultimately adopt. Below are the patterns that actually influence platform selection in real multi-cloud setups (AWS, Azure, GCP, Kubernetes), written from a practitioner perspective.

1. Layered Infrastructure Model (Foundational, Shared, Application)

Enterprises avoid monolithic IaC by separating layers with different change velocities:

- Foundational: identity, org policies, networking, security baselines

Usually Terraform/OpenTofu, executed through controlled runners like GitLab, Azure DevOps, or Firefly-managed runners. - Shared Services: databases, messaging, monitoring, registries

Often managed by central teams with stricter promotion workflows. - Application: compute, serverless, ephemeral infra

Executed through faster CI pipelines (GitHub Actions, GitLab, Pulumi Deployments).

This model matters because different layers require different execution guarantees (locking, approvals, drift control). Tools like Firefly, GitLab, and Azure DevOps align well with stable foundational layers, while app teams often prefer lighter CI workflows or Pulumi.

2. Internal Platform / IaC as a Product

Instead of each team running its own Terraform/Pulumi pipelines, a platform engineering group provides:

- shared runners

- controlled state backends

- policy enforcement (OPA/Rego/Sentinel)

- vetted module catalogs

- service connections and secrets governance

GitHub Actions, GitLab, Azure DevOps, Pulumi Service, and Firefly all integrate into this pattern differently:

- CI systems handle execution and approvals.

- Pulumi Service manages state and CrossGuard policies.

- Firefly adds organization-wide visibility, drift detection, and unmanaged resource identification, acting as a governance platform from console to code.

3. Composable, Versioned Module Architecture

Enterprise IaC moves toward small, independently testable modules rather than giant root modules. Key characteristics:

- versioned module registries

- automated module tests (fmt/validate/plan)

- dependency graphs that avoid hidden coupling

- consistent consumption through pipelines or platforms

This matters for platform selection: Terraform Cloud alternatives must support module-level pipelines, variable inheritance, and cross-repo promotion flows. GitHub/GitLab CI, Firefly, and Azure DevOps all fit differently depending on how opinionated the org wants the workflow to be.

4. PR-Driven Change Control (GitOps for Infra)

For regulated environments, every infrastructure change must be:

- reviewed

- planned on PR

- approved

- Applied only after merge or manual gate

- fully auditable and visible

Atlantis, GitHub Actions, GitLab CI, Azure DevOps, and Firefly runners all support this model, but with different strengths (locking, approvals, inventory awareness, policy hooks). This pattern is often the deciding factor between a CI-only approach and a platform with a state-aware execution engine.

5. Multi-IaC, Multi-Cloud Reality

Few enterprises stay fully Terraform-only. You see combinations like:

- Terraform/OpenTofu for foundational infra

- Pulumi for app-centric infra

- CDK/Bicep for cloud-native teams

- Helm/Kustomize for Kubernetes

- Console-created or third-party-managed resources

This is where tools diverge sharply:

- CI/CD tools: can execute anything, but have no visibility into the cloud state

- Pulumi Service: great for Pulumi, limited for non-Pulumi workflows

- Firefly: optional layer that gives cross-IaC visibility (drift, coverage, inventory), not execution-only

This pattern matters because many “Terraform Cloud replacements” fail once teams adopt multiple IaC frameworks.

FAQs

Is Terraform a cloud-native tool?

Terraform isn’t tied to any single cloud. It’s a cloud-agnostic IaC engine that works across AWS, Azure, GCP, Kubernetes, and on-prem providers. You can use it to build cloud-native environments, but it’s not a “cloud-native only” tool.

How much does Terraform Cloud cost?

Terraform Cloud (HCP Terraform) uses a Resources-Under-Management pricing model. The free tier covers 500 resources; beyond that, you’re billed per resource per hour. Costs scale with the size of your state files, not the number of runs, which can get expensive in large accounts.

Is HCP Terraform the same as Terraform Cloud?

Yes. Terraform Cloud has been rebranded under the HashiCorp Cloud Platform as HCP Terraform. The core workflow, remote state, remote runs, workspaces, and policies remain the same.

What’s the difference between Terraform CLI and HCP Terraform?

Terraform CLI is the local engine you run on your machine or CI runner. HCP Terraform is the managed control plane that handles state, remote execution, policy checks, workspace permissions, and collaboration. Think of CLI as the execution engine and HCP Terraform as the managed orchestration layer on top.

.svg)