In a production EKS cluster, worker nodes are often provisioned using an EC2 Auto Scaling Group (ASG) deployed using Terraform, CloudFormation, or Pulumi. Suppose the ASG is configured to maintain 3 [.code]t3.large[.code] instances and runs stateless services like APIs, background workers, and event consumers.

During a high-traffic event, like a product launch or a spike in background jobs, CPU usage goes up, and new pods can't be scheduled because the nodes are fully allocated.

To unblock workloads, the on-call engineer logs into the AWS Console and manually scales the ASG from 3 to 6 instances. More nodes come online, pods are scheduled, and service stabilizes.

But this manual change isn’t reflected in Terraform. It's not tracked in code and not part of the desired state.

A few days later, a scheduled CI/CD job runs terraform apply. Terraform sees the ASG should be at 3 and scales it back down. Three instances are terminated. Pods get evicted. Services start failing.

No one gets alerted. From Terraform’s perspective, the infrastructure is back to the expected state. But operationally, it just caused an outage.

CloudTrail logs the manual scale-up, but only as a raw API call. There’s no context, no visibility in the pipeline, and no indication that this drift ever happened until it breaks something.

This is the kind of issue that slips through most IaC workflows: a manual fix in production that gets silently undone by automation days later.

What is ClickOps — and Why is it a Problem?

ClickOps refers to the practice of making manual infrastructure changes through the AWS Management Console instead of updating your infrastructure-as-code (IaC) system, usually from Terraform or CloudFormation.

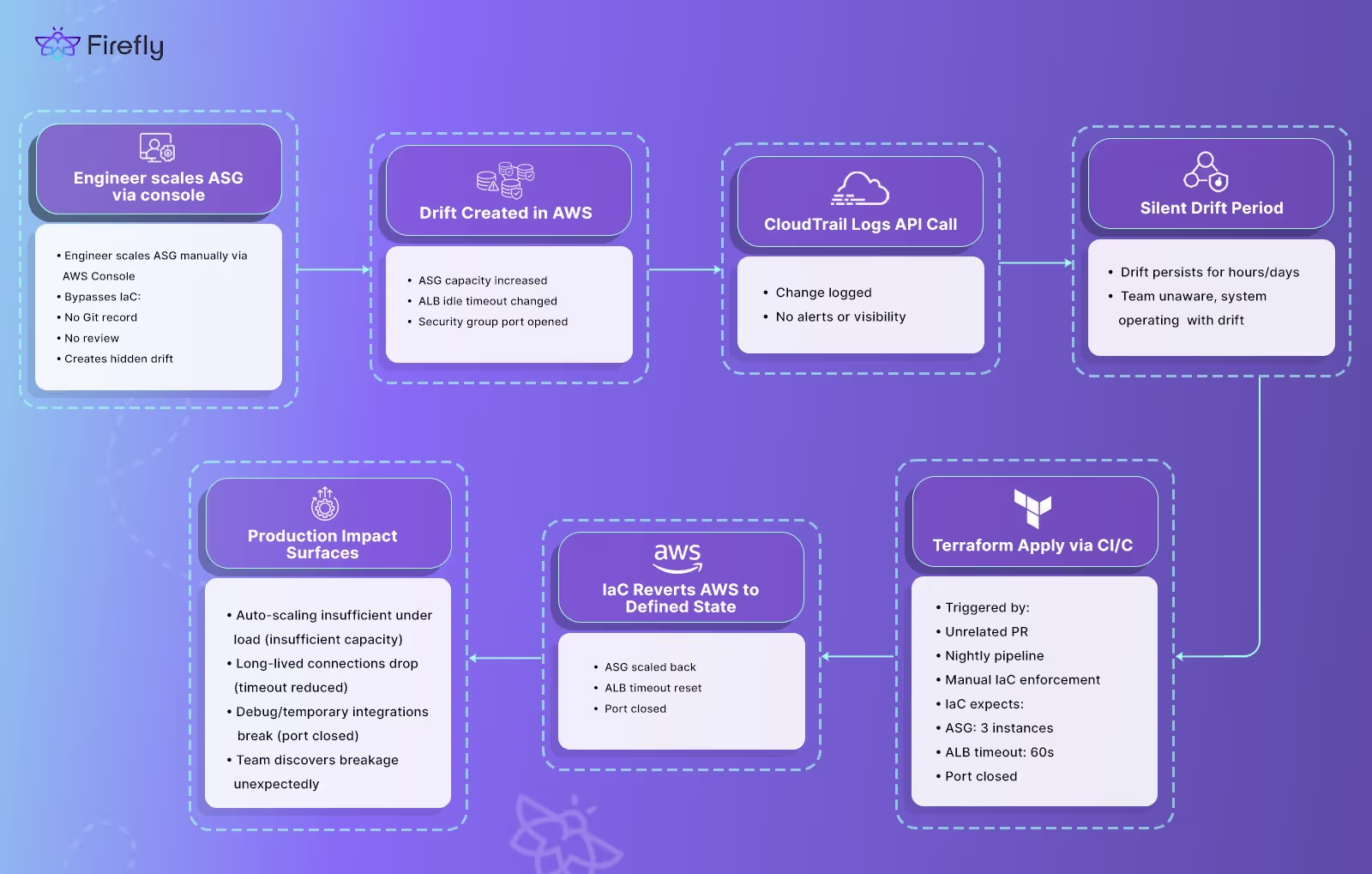

This often happens when an engineer, typically during an incident or while debugging, bypasses the Terraform pipeline and wants a fast, click deploy resolution rather than just updating Terraform.. They log into the AWS Console, make a direct change (e.g., update an S3 bucket policy, modify an ELB target group, or tweak a Lambda timeout), and move on without pushing any changes to the IaC repo. Here's the diagram that visually illustrates the process of how an engineer makes a change to the console, Terraform eventually reverts that change, and no one notices until something breaks.

This bypass usually happens because updating Terraform takes time. In time-sensitive scenarios, the engineer prioritizes immediate impact over long-term consistency.

The ClickOps Problem: Why This Breaks Your Infrastructure

1. No Record in Code

Just like application code should be version-controlled in Git, infrastructure changes should also be logged and reviewed in your IaC files, whether that’s Terraform, Pulumi, or another tool. If a developer modifies an IAM policy in the AWS Console to grant access to a new service, that change isn’t in your Terraform state file. Another engineer reviewing the Terraform configuration sees the old policy and assumes it’s still applied in production. The docs, the code, and the actual infrastructure are now out of sync, creating wrong assumptions and increasing the chance of misconfiguration.

2. Terraform ReApplies

Let’s say an intern logs into the AWS Console and manually increases the idle timeout of an Application Load Balancer (ALB) from 60 seconds to 180 seconds to resolve client timeout issues. The ALB starts handling requests more reliably, so the intern thinks the problem is fixed.

But the Terraform configuration, say, alb.tf still defines [.code]idle_timeout = 60[.code]. Since no one updated the code or imported the new state into Terraform, the next [.code]terraform apply[.code] maybe triggered as part of an unrelated change—will detect the 180-second value as drift and silently reset it back to 60 seconds. Unless someone closely inspects the plan output, this change goes unnoticed. A few days later, clients report timeouts again, and the team wastes time debugging an issue that was seemingly fixed.

To be clear, [.code]terraform import[.code] is used to bring the current cloud resource into the Terraform state file. It doesn’t apply the change to match the code; it syncs the existing resource into Terraform’s state. If you skip updating the IaC to match the current resource settings after import, running [.code]terraform apply[.code] will overwrite the live configuration based on what’s defined in code. This is why you can’t just import and forget; you need to align both the state and the code. For more information on how to properly use terraform import check out this detailed guide.

3. No Audit Trail for Security or Compliance

In regulated environments, every infrastructure change must be tracked, reviewed, and tied to a change management process or CI CD pipelines. When changes are made manually through the AWS Console, they bypass version control, peer review, and any form of documented approval. That breaks the audit trail. There’s no clear record of who made the change, whether it was authorized, or if it aligns with internal security policies.

For example, let's say a developer logs into the AWS Console and modifies a security group to allow inbound SSH (port 22) from their home IP to access a staging EC2 instance. This change is applied instantly, but it’s not in the Terraform config, hence it’s not tracked in the Terraform state file, and there’s no ticket or PR to explain the reason. The security group now exposes SSH access, but no one else on the team knows it happened. If your organization enforces SOC 2 or ISO 27001, this is a policy violation because you’ve made a privileged access change with no record or review.

4. Environment Drift Breaks Cost Controls and Resource Governance

When we make changes directly in the AWS Console instead of through Terraform, the infrastructure can quickly drift from the standards defined by the platform team. This breaks governance rules tied to cost tracking, tagging, and environment consistency.

In most organizations, standardization means enforcing a consistent set of rules around things like instance types, required tags, storage classes, and network configurations. Unapproved changes to these configurations, especially ones made manually, bypass these policies.

Consider this: a developer increases the instance size of a dev EC2 machine from t3.medium to m5.large through the AWS Console to troubleshoot a load issue. They don’t update the Terraform code or apply the required tags. Now, that instance violates sizing policies for the dev environment and goes untagged, so cost-tracking tools can’t attribute its usage. Meanwhile, staging and production still use the t3.medium instance as defined in code, which means testing results won’t match real-world performance. On top of that, auto-remediation scripts that rely on tags or instance types won’t detect this outlier. You’re left with an unmanaged, overprovisioned resource that’s invisible to your cost dashboards and out of sync with the rest of your environments.

The Challenge of Configuration Drift

Everything we’ve talked about so far: manual changes in the AWS Console, skipped updates to Terraform, inconsistently sized resources across environments, up to one core issue: configuration drift.

Drift means your infrastructure is no longer what your code says it is. The Terraform state might show t3.medium, but the cloud is running m5.large. Your security group in Git blocks all SSH, but in AWS, someone opened port 22. These aren’t hypothetical. They’re what actually happens when teams make changes outside the pipeline and don’t reconcile them later.

This breaks more than just infrastructure consistency; it erodes trust. Teams assume environments are in sync when they’re not. Drift makes debugging harder, auditing messy, and automation brittle.

The real problem isn’t that changes happen. It’s that you don’t have visibility into them until they break something.

AWS gives you some tools to track changes. But if you’ve used CloudTrail or AWS Config, you know the truth: they log everything, but tell you nothing useful out of the box.

Let’s break down how AWS detects and manages drift in real-world systems

Why AWS Alone Is Not Enough

AWS offers several tools, CloudTrail, Config, and EventBridge, that provide visibility into your cloud environment. However, each has limitations when it comes to detecting and managing configuration drift and unauthorized changes, especially those made outside of Infrastructure as Code (IaC) workflows.

AWS CloudTrail

AWS CloudTrail records API calls made on your account, including actions taken through the AWS Management Console, CLI, and SDKs. It captures details such as the identity of the API caller, the time of the API call, the source IP address, and the request parameters. While this information is valuable for auditing and compliance, CloudTrail does not provide a straightforward way to see the before-and-after state of a resource.

For example, if an EC2 instance type is changed, CloudTrail logs the API call but doesn't show the previous and new instance types side by side. This makes it challenging to quickly assess the impact of changes and identify configuration drift.

AWS Config

AWS Config provides a detailed view of the configuration of AWS resources in your account. It records configuration changes and maintains a history of these changes, allowing you to see how configurations evolve over time. AWS Config can also evaluate resource configurations against desired settings using Config Rules.

However, AWS Config operates independently of your IaC tools like Terraform. It doesn't automatically correlate changes with your IaC definitions, making it difficult to determine if a change aligns with your intended configurations. Additionally, setting up and managing Config Rules for comprehensive coverage can be complex and time-consuming.

Amazon EventBridge

EventBridge is a serverless event bus that enables you to connect applications using data from your own applications, integrated SaaS applications, and AWS services. It allows you to ingest, filter, and route events to various targets. While EventBridge is powerful for building event-driven architectures, it doesn't inherently provide insights into configuration changes or drift. It requires you to define specific rules and targets, and it doesn't offer built-in mechanisms to compare current resource states against your IaC configurations.

In summary, while AWS provides tools that offer valuable information about your cloud environment, they each have limitations in providing a comprehensive, cohesive view of configuration changes and drift, particularly in relation to your IaC definitions. This gap necessitates additional solutions to effectively monitor and manage your infrastructure's state.

Tracking and Auditing Cloud Changes with Firefly’s Event Center

Firefly’s Event Center gives you a real-time, detailed view of all configuration changes, called mutation events, across your AWS, GCP, or Azure accounts. A mutation event is any change to the configuration of a resource. This includes updates made manually in the AWS Console (ClickOps), through CLI commands or SDKs, or via automated tools like Terraform. Firefly detects these changes by ingesting data from AWS CloudTrail and processing it into a clear, searchable timeline.

Each mutation event captures the exact details of the change:

- What resource was changed (e.g., an EC2 instance, an S3 bucket, a security group)

- Which attributes were modified, with before-and-after values

- When the change happened

- Who made the change (IAM user, role, or CI/CD system)

- Where it came from (e.g., AWS Console, CLI, SDK, or IaC pipeline)

This level of detail allows Firefly to provide full visibility and traceability across your infrastructure. It detects drift by comparing the live configuration of cloud assets with the configurations stored in the Terraform state file. Rather than relying solely on IaC outputs, Firefly continuously monitors the actual state of resources in the cloud. Any configuration change, whether manual or automated, triggers a new mutation revision. ClickOps events are identified through CloudTrail, and if they result in a configuration change, they are logged as both ClickOps and mutation events. This ensures comprehensive insight into all changes, whether initiated through code or the console.

For more details on how Firefly ingests and processes mutation events, see the Firefly Event Center documentation.

Here’s what you can do and see with Firefly’s Event Center:

- Get a Timeline of Changes

Firefly shows a chronological list of changes across your cloud accounts. You can see exactly what changed, when it changed, and who triggered it. Each event includes the resource, the attributes affected, the actor (IAM identity), source IP, and the specific before-and-after diff. - See All Change Sources

Firefly captures changes from any source, manual changes through the AWS Console (ClickOps), CLI commands, SDK calls, and automated IaC pipelines like Terraform. - Filter and Search

You can filter mutation events by event type (ClickOps, CLI/SDK, IaC), AWS service, region, resource type, user, or time window. This makes it easy to find specific changes or narrow down the scope during an incident. - Ensure Clear Accountability

Every change is linked to the IAM user, role, or service account that made it. You know exactly who changed what, which helps with debugging, post-incident reviews, and audits. - Integrate with Your Alerts

Firefly can send notifications when high-risk changes are detected, so you get real-time alerts for critical mutations like security group updates, public S3 buckets, or production database modifications.

Detecting Mutations with Firefly

To see Firefly’s Event Center in action, we provisioned two resources using Terraform:

- An S3 bucket with versioning explicitly disabled

- An EC2 instance with a public IP address assigned

We then simulated changes via the AWS Console:

- Enabled versioning on the S3 bucket

- Removed the public IP assignment from the EC2 instance

Firefly’s Event Center immediately flagged these as mutation events. It displayed clear before/after diffs and metadata showing the source (AWS Console), time, and affected resource as shown in the snapshot below:

Here’s how Firefly presented the changes as individual mutation events. For the S3 bucket, Firefly detected that the [.code]versioning[.code] attribute had been modified. The Before state showed [.code]versioning.[0].enabled: false[.code], while the after state showed [.code]versioning.[0].enabled: true[.code]. This change indicated that versioning had been enabled manually through the AWS Console, outside of the IaC workflow.

For the EC2 instance, Firefly captured multiple attribute changes. The [.code]associate_public_ip_address[.code] setting changed from [.code]true[.code] to [.code]false[.code]. Along with this, the public_dns and public_ip fields were cleared, going from specific values ([.code]ec2-16-16-233-141.eu-north-1.compute.amazonaws.com[.code] and [.code]16.16.233.141[.code]) to blank. This showed that the EC2 instance’s public IP was manually removed in the AWS Console, resulting in drift from the Terraform-defined state.

These events were logged in Firefly’s Event Center with precise before-and-after diffs, making it clear what changed, where it changed, and who triggered it.

These events were automatically categorized under the “Mutations” and “ClickOps” filters. With no manual tagging or scripting, the Event Center surfaced unauthorized console changes, complete with actionable context. This makes it easy to detect configuration drift before it causes downtime, security exposure, or cost overruns.

Best Practices for Managing ClickOps and Drift

Firefly’s Event Center helps surface and track manual changes, but preventing configuration drift in the first place requires discipline and proper process. Here’s a breakdown of practical best practices that help keep environments aligned and minimize the risk of ClickOps incidents:

1. Use Lifecycle Ignore for Known Exceptions

When you know a resource will be updated outside of Terraform, for example, an auto-managed timestamp field or a service-generated ID, you can use Terraform’s [.code]lifecycle[.code] block with [.code]ignore_changes[.code] to suppress unwanted diffs. This tells Terraform to skip tracking specific attributes, preventing false positives during drift detection. This approach is useful for cases where occasional manual changes are expected and approved, such as scaling an ASG during an incident.

2. Run Terraform Plan Regularly in CI

Integrate [.code]terraform plan[.code] as part of your CI/CD pipeline. Run it on a schedule (e.g., nightly) or on every commit to catch drift early. If the plan shows unexpected changes, investigate them before merging any new code.

For example, set up a GitHub Actions workflow that runs terraform plan against the current state and posts a diff summary in your pull requests. This builds visibility into changes and flags potential drift as early as possible.

3. Reconcile State After Manual Changes

If a manual change is necessary, such as resizing an RDS instance during an incident, you need to reconcile the Terraform state with the actual cloud configuration to avoid drift issues. The process is straightforward: first, remove the resource from the Terraform state using [.code]terraform state rm[.code] if it no longer matches the declared configuration. Then, use [.code]terraform import[.code] to pull the current live state of the resource back into Terraform. Finally, update the .tf files to match the actual resource settings as they exist in the cloud. This ensures that the state file and the live infrastructure are all in sync, reducing the risk of unexpected changes or overwrites when reapplying [.code]terraform apply[.code].

Building Complete Visibility into AWS ClickOps with Firefly

Firefly’s Event Center helps teams gain precise visibility into infrastructure changes by ingesting data directly from AWS CloudTrail. Every API call—whether made through the AWS Console, CLI, SDKs, or automation tools—is captured and processed. Firefly doesn’t just log the raw API event; it parses the data, links it to the specific resource, and shows exactly what changed. This lets you see real-time, granular differences between your declared infrastructure and what’s actually running in AWS.

Firefly is also key for:

Detecting Console (AWS UI) Changes

ClickOps or changes made through the AWS Console bypass your Infrastructure as Code (IaC) pipeline. Firefly identifies these changes by analyzing CloudTrail events and showing which resource was changed, and exactly what was modified. For example, if an engineer enables versioning on an S3 bucket via the console, Firefly will display the before/after diff:

[.code]versioning.enabled: false[.code] → [.code]versioning.enabled: true[.code].

It will also tag the change as a ClickOps event, so you can filter and review all manual changes via console or CLI modifications in one place.

Drift Analysis

Firefly compares the current state of your AWS resources, as seen in CloudTrail, against what’s defined in your Terraform configuration. If there’s a mismatch, it flags the change as drift. For instance, if your Terraform code defines an EC2 instance type as [.code]t3.medium[.code], but a console change upgraded it to [.code]m5.large[.code], Firefly will catch the change, show the diff, and tag it as drift. This lets you reconcile changes back into code or revert them to the expected state.

Compliance Auditing

For compliance-driven environments, SOC 2, ISO 27001, or internal policy frameworks, Firefly creates a reliable audit trail of all infrastructure changes. Every mutation event is logged with metadata: resource type, change details, timestamp, and the IAM principal responsible. This makes it possible to review, validate, and document all changes against policy requirements, ensuring there are no hidden modifications that violate governance standards.

Try Firefly to see it for yourself, or request a demo.

FAQs

How does IaC help to prevent configuration drift?

Infrastructure as Code (IaC) tools like Terraform define your desired infrastructure state in version-controlled files. By applying these configurations consistently through pipelines, you ensure that your infrastructure matches the code. Any changes made outside of the IaC workflow, like console changes, create drift. Regular terraform plan runs, combined with code reviews, help catch drift early and reconcile it before it causes issues in production.

What is ClickOps vs DevOps?

ClickOps refers to making manual infrastructure changes directly through a cloud provider’s console, like the AWS Management Console, without using IaC tools. These changes are fast but often undocumented, untracked, and hard to audit. DevOps, on the other hand, emphasizes automation, reproducibility, and consistency. DevOps workflows use IaC tools, CI/CD pipelines, and version control to ensure infrastructure is deployed and managed in a controlled, traceable manner.

What is drift in infrastructure?

Drift occurs when the actual state of cloud infrastructure diverges from the state defined in your IaC configuration files. For example, if your Terraform code defines an EC2 instance type as t3.medium, but someone manually updates it to m5.large through the console, the two states are no longer in sync. This can lead to unexpected behavior, deployment failures, and compliance gaps.

What is ClickOps in AWS?

In AWS, ClickOps refers to manual changes made through the AWS Management Console, such as modifying an EC2 instance, updating an S3 bucket, or changing security group rules, without using Terraform, CloudFormation, or other IaC tools. These changes are not captured in your codebase, making it hard to track, audit, or revert them through your normal deployment processes.

What are infrastructure mutations in cloud platforms?

Infrastructure mutations are any changes to the configuration of cloud resources. This includes changes made through IaC tools, API calls, SDKs, the console, or third-party services. For example, updating an EC2 instance type, modifying an S3 bucket policy, or enabling versioning on a DynamoDB table are all infrastructure mutations. Tracking these mutations is critical to understanding who changed what in your cloud environment and ensuring your infrastructure stays aligned with your intended design.

.avif)